This is page 1 of 4. Use http://codebase.md/dbt-labs/dbt-mcp?page={x} to view the full context.

# Directory Structure

```

├── .changes

│ ├── header.tpl.md

│ ├── unreleased

│ │ ├── .gitkeep

│ │ ├── Bug Fix-20251028-143835.yaml

│ │ ├── Enhancement or New Feature-20251014-175047.yaml

│ │ └── Under the Hood-20251030-151902.yaml

│ ├── v0.1.3.md

│ ├── v0.10.0.md

│ ├── v0.10.1.md

│ ├── v0.10.2.md

│ ├── v0.10.3.md

│ ├── v0.2.0.md

│ ├── v0.2.1.md

│ ├── v0.2.10.md

│ ├── v0.2.11.md

│ ├── v0.2.12.md

│ ├── v0.2.13.md

│ ├── v0.2.14.md

│ ├── v0.2.15.md

│ ├── v0.2.16.md

│ ├── v0.2.17.md

│ ├── v0.2.18.md

│ ├── v0.2.19.md

│ ├── v0.2.2.md

│ ├── v0.2.20.md

│ ├── v0.2.3.md

│ ├── v0.2.4.md

│ ├── v0.2.5.md

│ ├── v0.2.6.md

│ ├── v0.2.7.md

│ ├── v0.2.8.md

│ ├── v0.2.9.md

│ ├── v0.3.0.md

│ ├── v0.4.0.md

│ ├── v0.4.1.md

│ ├── v0.4.2.md

│ ├── v0.5.0.md

│ ├── v0.6.0.md

│ ├── v0.6.1.md

│ ├── v0.6.2.md

│ ├── v0.7.0.md

│ ├── v0.8.0.md

│ ├── v0.8.1.md

│ ├── v0.8.2.md

│ ├── v0.8.3.md

│ ├── v0.8.4.md

│ ├── v0.9.0.md

│ ├── v0.9.1.md

│ └── v1.0.0.md

├── .changie.yaml

├── .env.example

├── .github

│ ├── actions

│ │ └── setup-python

│ │ └── action.yml

│ ├── CODEOWNERS

│ ├── ISSUE_TEMPLATE

│ │ ├── bug_report.yml

│ │ └── feature_request.yml

│ ├── pull_request_template.md

│ └── workflows

│ ├── changelog-check.yml

│ ├── codeowners-check.yml

│ ├── create-release-pr.yml

│ ├── release.yml

│ └── run-checks-pr.yaml

├── .gitignore

├── .pre-commit-config.yaml

├── .task

│ └── checksum

│ └── d2

├── .tool-versions

├── .vscode

│ ├── launch.json

│ └── settings.json

├── CHANGELOG.md

├── CONTRIBUTING.md

├── docs

│ ├── d2.png

│ └── diagram.d2

├── evals

│ └── semantic_layer

│ └── test_eval_semantic_layer.py

├── examples

│ ├── .DS_Store

│ ├── aws_strands_agent

│ │ ├── __init__.py

│ │ ├── .DS_Store

│ │ ├── dbt_data_scientist

│ │ │ ├── __init__.py

│ │ │ ├── .env.example

│ │ │ ├── agent.py

│ │ │ ├── prompts.py

│ │ │ ├── quick_mcp_test.py

│ │ │ ├── test_all_tools.py

│ │ │ └── tools

│ │ │ ├── __init__.py

│ │ │ ├── dbt_compile.py

│ │ │ ├── dbt_mcp.py

│ │ │ └── dbt_model_analyzer.py

│ │ ├── LICENSE

│ │ ├── README.md

│ │ └── requirements.txt

│ ├── google_adk_agent

│ │ ├── __init__.py

│ │ ├── main.py

│ │ ├── pyproject.toml

│ │ └── README.md

│ ├── langgraph_agent

│ │ ├── __init__.py

│ │ ├── .python-version

│ │ ├── main.py

│ │ ├── pyproject.toml

│ │ ├── README.md

│ │ └── uv.lock

│ ├── openai_agent

│ │ ├── __init__.py

│ │ ├── .gitignore

│ │ ├── .python-version

│ │ ├── main_streamable.py

│ │ ├── main.py

│ │ ├── pyproject.toml

│ │ ├── README.md

│ │ └── uv.lock

│ ├── openai_responses

│ │ ├── __init__.py

│ │ ├── .gitignore

│ │ ├── .python-version

│ │ ├── main.py

│ │ ├── pyproject.toml

│ │ ├── README.md

│ │ └── uv.lock

│ ├── pydantic_ai_agent

│ │ ├── __init__.py

│ │ ├── .gitignore

│ │ ├── .python-version

│ │ ├── main.py

│ │ ├── pyproject.toml

│ │ └── README.md

│ └── remote_mcp

│ ├── .python-version

│ ├── main.py

│ ├── pyproject.toml

│ ├── README.md

│ └── uv.lock

├── LICENSE

├── pyproject.toml

├── README.md

├── src

│ ├── client

│ │ ├── __init__.py

│ │ ├── main.py

│ │ └── tools.py

│ ├── dbt_mcp

│ │ ├── __init__.py

│ │ ├── .gitignore

│ │ ├── config

│ │ │ ├── config_providers.py

│ │ │ ├── config.py

│ │ │ ├── dbt_project.py

│ │ │ ├── dbt_yaml.py

│ │ │ ├── headers.py

│ │ │ ├── settings.py

│ │ │ └── transport.py

│ │ ├── dbt_admin

│ │ │ ├── __init__.py

│ │ │ ├── client.py

│ │ │ ├── constants.py

│ │ │ ├── run_results_errors

│ │ │ │ ├── __init__.py

│ │ │ │ ├── config.py

│ │ │ │ └── parser.py

│ │ │ └── tools.py

│ │ ├── dbt_cli

│ │ │ ├── binary_type.py

│ │ │ └── tools.py

│ │ ├── dbt_codegen

│ │ │ ├── __init__.py

│ │ │ └── tools.py

│ │ ├── discovery

│ │ │ ├── client.py

│ │ │ └── tools.py

│ │ ├── errors

│ │ │ ├── __init__.py

│ │ │ ├── admin_api.py

│ │ │ ├── base.py

│ │ │ ├── cli.py

│ │ │ ├── common.py

│ │ │ ├── discovery.py

│ │ │ ├── semantic_layer.py

│ │ │ └── sql.py

│ │ ├── gql

│ │ │ └── errors.py

│ │ ├── lsp

│ │ │ ├── __init__.py

│ │ │ ├── lsp_binary_manager.py

│ │ │ ├── lsp_client.py

│ │ │ ├── lsp_connection.py

│ │ │ └── tools.py

│ │ ├── main.py

│ │ ├── mcp

│ │ │ ├── create.py

│ │ │ └── server.py

│ │ ├── oauth

│ │ │ ├── client_id.py

│ │ │ ├── context_manager.py

│ │ │ ├── dbt_platform.py

│ │ │ ├── fastapi_app.py

│ │ │ ├── logging.py

│ │ │ ├── login.py

│ │ │ ├── refresh_strategy.py

│ │ │ ├── token_provider.py

│ │ │ └── token.py

│ │ ├── prompts

│ │ │ ├── __init__.py

│ │ │ ├── admin_api

│ │ │ │ ├── cancel_job_run.md

│ │ │ │ ├── get_job_details.md

│ │ │ │ ├── get_job_run_artifact.md

│ │ │ │ ├── get_job_run_details.md

│ │ │ │ ├── get_job_run_error.md

│ │ │ │ ├── list_job_run_artifacts.md

│ │ │ │ ├── list_jobs_runs.md

│ │ │ │ ├── list_jobs.md

│ │ │ │ ├── retry_job_run.md

│ │ │ │ └── trigger_job_run.md

│ │ │ ├── dbt_cli

│ │ │ │ ├── args

│ │ │ │ │ ├── full_refresh.md

│ │ │ │ │ ├── limit.md

│ │ │ │ │ ├── resource_type.md

│ │ │ │ │ ├── selectors.md

│ │ │ │ │ ├── sql_query.md

│ │ │ │ │ └── vars.md

│ │ │ │ ├── build.md

│ │ │ │ ├── compile.md

│ │ │ │ ├── docs.md

│ │ │ │ ├── list.md

│ │ │ │ ├── parse.md

│ │ │ │ ├── run.md

│ │ │ │ ├── show.md

│ │ │ │ └── test.md

│ │ │ ├── dbt_codegen

│ │ │ │ ├── args

│ │ │ │ │ ├── case_sensitive_cols.md

│ │ │ │ │ ├── database_name.md

│ │ │ │ │ ├── generate_columns.md

│ │ │ │ │ ├── include_data_types.md

│ │ │ │ │ ├── include_descriptions.md

│ │ │ │ │ ├── leading_commas.md

│ │ │ │ │ ├── materialized.md

│ │ │ │ │ ├── model_name.md

│ │ │ │ │ ├── model_names.md

│ │ │ │ │ ├── schema_name.md

│ │ │ │ │ ├── source_name.md

│ │ │ │ │ ├── table_name.md

│ │ │ │ │ ├── table_names.md

│ │ │ │ │ ├── tables.md

│ │ │ │ │ └── upstream_descriptions.md

│ │ │ │ ├── generate_model_yaml.md

│ │ │ │ ├── generate_source.md

│ │ │ │ └── generate_staging_model.md

│ │ │ ├── discovery

│ │ │ │ ├── get_all_models.md

│ │ │ │ ├── get_all_sources.md

│ │ │ │ ├── get_exposure_details.md

│ │ │ │ ├── get_exposures.md

│ │ │ │ ├── get_mart_models.md

│ │ │ │ ├── get_model_children.md

│ │ │ │ ├── get_model_details.md

│ │ │ │ ├── get_model_health.md

│ │ │ │ └── get_model_parents.md

│ │ │ ├── lsp

│ │ │ │ ├── args

│ │ │ │ │ ├── column_name.md

│ │ │ │ │ └── model_id.md

│ │ │ │ └── get_column_lineage.md

│ │ │ ├── prompts.py

│ │ │ └── semantic_layer

│ │ │ ├── get_dimensions.md

│ │ │ ├── get_entities.md

│ │ │ ├── get_metrics_compiled_sql.md

│ │ │ ├── list_metrics.md

│ │ │ └── query_metrics.md

│ │ ├── py.typed

│ │ ├── semantic_layer

│ │ │ ├── client.py

│ │ │ ├── gql

│ │ │ │ ├── gql_request.py

│ │ │ │ └── gql.py

│ │ │ ├── levenshtein.py

│ │ │ ├── tools.py

│ │ │ └── types.py

│ │ ├── sql

│ │ │ └── tools.py

│ │ ├── telemetry

│ │ │ └── logging.py

│ │ ├── tools

│ │ │ ├── annotations.py

│ │ │ ├── definitions.py

│ │ │ ├── policy.py

│ │ │ ├── register.py

│ │ │ ├── tool_names.py

│ │ │ └── toolsets.py

│ │ └── tracking

│ │ └── tracking.py

│ └── remote_mcp

│ ├── __init__.py

│ └── session.py

├── Taskfile.yml

├── tests

│ ├── __init__.py

│ ├── env_vars.py

│ ├── integration

│ │ ├── __init__.py

│ │ ├── dbt_codegen

│ │ │ ├── __init__.py

│ │ │ └── test_dbt_codegen.py

│ │ ├── discovery

│ │ │ └── test_discovery.py

│ │ ├── initialization

│ │ │ ├── __init__.py

│ │ │ └── test_initialization.py

│ │ ├── lsp

│ │ │ └── test_lsp_connection.py

│ │ ├── remote_mcp

│ │ │ └── test_remote_mcp.py

│ │ ├── remote_tools

│ │ │ └── test_remote_tools.py

│ │ ├── semantic_layer

│ │ │ └── test_semantic_layer.py

│ │ └── tracking

│ │ └── test_tracking.py

│ ├── mocks

│ │ └── config.py

│ └── unit

│ ├── __init__.py

│ ├── config

│ │ ├── __init__.py

│ │ ├── test_config.py

│ │ └── test_transport.py

│ ├── dbt_admin

│ │ ├── __init__.py

│ │ ├── test_client.py

│ │ ├── test_error_fetcher.py

│ │ └── test_tools.py

│ ├── dbt_cli

│ │ ├── __init__.py

│ │ ├── test_cli_integration.py

│ │ └── test_tools.py

│ ├── dbt_codegen

│ │ ├── __init__.py

│ │ └── test_tools.py

│ ├── discovery

│ │ ├── __init__.py

│ │ ├── conftest.py

│ │ ├── test_exposures_fetcher.py

│ │ └── test_sources_fetcher.py

│ ├── lsp

│ │ ├── __init__.py

│ │ ├── test_lsp_client.py

│ │ ├── test_lsp_connection.py

│ │ └── test_lsp_tools.py

│ ├── oauth

│ │ ├── test_credentials_provider.py

│ │ ├── test_fastapi_app_pagination.py

│ │ └── test_token.py

│ ├── tools

│ │ ├── test_disable_tools.py

│ │ ├── test_tool_names.py

│ │ ├── test_tool_policies.py

│ │ └── test_toolsets.py

│ └── tracking

│ └── test_tracking.py

├── ui

│ ├── .gitignore

│ ├── assets

│ │ ├── dbt_logo BLK.svg

│ │ └── dbt_logo WHT.svg

│ ├── eslint.config.js

│ ├── index.html

│ ├── package.json

│ ├── pnpm-lock.yaml

│ ├── pnpm-workspace.yaml

│ ├── README.md

│ ├── src

│ │ ├── App.css

│ │ ├── App.tsx

│ │ ├── global.d.ts

│ │ ├── index.css

│ │ ├── main.tsx

│ │ └── vite-env.d.ts

│ ├── tsconfig.app.json

│ ├── tsconfig.json

│ ├── tsconfig.node.json

│ └── vite.config.ts

└── uv.lock

```

# Files

--------------------------------------------------------------------------------

/.changes/unreleased/.gitkeep:

--------------------------------------------------------------------------------

```

```

--------------------------------------------------------------------------------

/src/dbt_mcp/.gitignore:

--------------------------------------------------------------------------------

```

ui

```

--------------------------------------------------------------------------------

/examples/langgraph_agent/.python-version:

--------------------------------------------------------------------------------

```

3.13

```

--------------------------------------------------------------------------------

/examples/openai_agent/.python-version:

--------------------------------------------------------------------------------

```

3.13

```

--------------------------------------------------------------------------------

/examples/openai_responses/.python-version:

--------------------------------------------------------------------------------

```

3.13

```

--------------------------------------------------------------------------------

/examples/pydantic_ai_agent/.python-version:

--------------------------------------------------------------------------------

```

3.13

```

--------------------------------------------------------------------------------

/examples/remote_mcp/.python-version:

--------------------------------------------------------------------------------

```

3.13

```

--------------------------------------------------------------------------------

/examples/openai_agent/.gitignore:

--------------------------------------------------------------------------------

```

.envrc

```

--------------------------------------------------------------------------------

/examples/openai_responses/.gitignore:

--------------------------------------------------------------------------------

```

.envrc

```

--------------------------------------------------------------------------------

/examples/pydantic_ai_agent/.gitignore:

--------------------------------------------------------------------------------

```

.envrc

```

--------------------------------------------------------------------------------

/ui/.gitignore:

--------------------------------------------------------------------------------

```

node_modules

```

--------------------------------------------------------------------------------

/.tool-versions:

--------------------------------------------------------------------------------

```

nodejs 20.17.0

uv 0.8.19

task 3.43.2

pnpm 10.15.1

```

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

```

__pycache__/

.venv/

.env

.mypy_cache/

.pytest_cache/

*.egg-info/

.idea/

vortex_dev_mode_output.jsonl

dbt-mcp.log

```

--------------------------------------------------------------------------------

/.env.example:

--------------------------------------------------------------------------------

```

DBT_HOST=cloud.getdbt.com

DBT_PROD_ENV_ID=your-production-environment-id

DBT_DEV_ENV_ID=your-development-environment-id

DBT_USER_ID=your-user-id

DBT_TOKEN=your-service-token

DBT_PROJECT_DIR=/path/to/your/dbt/project

DBT_PATH=/path/to/your/dbt/executable

MULTICELL_ACCOUNT_PREFIX=your-account-prefix

```

--------------------------------------------------------------------------------

/.changie.yaml:

--------------------------------------------------------------------------------

```yaml

changesDir: .changes

unreleasedDir: unreleased

headerPath: header.tpl.md

changelogPath: CHANGELOG.md

versionExt: md

versionFormat: '## {{.Version}} - {{.Time.Format "2006-01-02"}}'

kindFormat: '### {{.Kind}}'

changeFormat: '* {{.Body}}'

kinds:

- label: Breaking Change

auto: major

- label: Enhancement or New Feature

auto: minor

- label: Under the Hood

auto: patch

- label: Bug Fix

auto: patch

- label: Security

auto: patch

newlines:

afterChangelogHeader: 1

beforeChangelogVersion: 1

endOfVersion: 1

envPrefix: CHANGIE_

```

--------------------------------------------------------------------------------

/examples/aws_strands_agent/dbt_data_scientist/.env.example:

--------------------------------------------------------------------------------

```

# Local Project Development Setup

DBT_PROJECT_LOCATION= Path to your local dbt project directory

DBT_EXECUTABLE= Path to dbt executable (/Users/username/.local/bin/dbt)

# Not Required: Used for comparing fusion and core

DBT_CLASSIC= Path to dbt executable (/opt/homebrew/bin/dbt)

# LLM Keys

GOOGLE_API_KEY= Your Google API Key

ANTHROPIC_API_KEY=Your Anthropic/Claude API Key

OPENAI_API_KEY=Your OpenAI API Key

# ===== REQUIRED: dbt MCP Server Configuration =====

DBT_MCP_URL= The URL of your dbt MCP server

DBT_TOKEN= Your dbt Cloud authentication token

DBT_USER_ID= Your dbt Cloud user ID (numeric)

DBT_PROD_ENV_ID= Your dbt Cloud production environment ID (numeric)

# ===== OPTIONAL: dbt Environment IDs =====

DBT_DEV_ENV_ID= Your dbt Cloud development environment ID (numeric)

DBT_ACCOUNT_ID= Your dbt Cloud account ID (numeric)

```

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

```yaml

exclude: |

(?x)^(

.mypy_cache/

| .pytest_cache/

| .venv/

)$

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v5.0.0

hooks:

- id: pretty-format-json

args: ["--autofix"]

- id: check-merge-conflict

- id: no-commit-to-branch

args: [--branch, main]

- repo: https://github.com/rhysd/actionlint

rev: v1.7.3

hooks:

- id: actionlint

- repo: https://github.com/charliermarsh/ruff-pre-commit

rev: v0.9.5

hooks:

- id: ruff

args: [--fix]

exclude: examples/

- id: ruff-format

exclude: examples/

- repo: https://github.com/astral-sh/uv-pre-commit

rev: 0.8.19

hooks:

- id: uv-lock

- repo: https://github.com/pre-commit/mirrors-mypy

rev: v1.13.0

hooks:

- id: mypy

language: system

pass_filenames: false

args: ["--show-error-codes", "--namespace-packages", "--exclude", "examples/", "."]

exclude: examples/

```

--------------------------------------------------------------------------------

/examples/remote_mcp/README.md:

--------------------------------------------------------------------------------

```markdown

```

--------------------------------------------------------------------------------

/examples/openai_responses/README.md:

--------------------------------------------------------------------------------

```markdown

# OpenAI Responses

An example of using remote dbt-mcp with OpenAI's Responses API

## Usage

`uv run main.py`

```

--------------------------------------------------------------------------------

/ui/README.md:

--------------------------------------------------------------------------------

```markdown

# dbt MCP

This UI enables easier configuration of dbt MCP. Check out `package.json` and `Taskfile.yml` for usage.

```

--------------------------------------------------------------------------------

/examples/langgraph_agent/README.md:

--------------------------------------------------------------------------------

```markdown

# LangGraph Agent Example

This is a simple example of how to create conversational agent with remote dbt MCP & LangGraph.

## Usage

1. Set an `ANTHROPIC_API_KEY` environment variable with your Anthropic API key.

2. Run `uv run main.py`.

```

--------------------------------------------------------------------------------

/examples/pydantic_ai_agent/README.md:

--------------------------------------------------------------------------------

```markdown

# Pydantic AI Agent

An example of using Pydantic AI with the remote dbt MCP server.

## Config

Set the following environment variables:

- `OPENAI_API_KEY` (or the API key for any other model supported by PydanticAI)

- `DBT_TOKEN`

- `DBT_PROD_ENV_ID`

- `DBT_HOST` (if not using the default `cloud.getdbt.com`)

## Usage

`uv run main.py`

```

--------------------------------------------------------------------------------

/examples/google_adk_agent/README.md:

--------------------------------------------------------------------------------

```markdown

# Google ADK Agent for dbt MCP

An example of using Google Agent Development Kit with the remote dbt MCP server.

## Config

Set the following environment variables:

- `GOOGLE_GENAI_API_KEY` (or the API key for any other model supported by google ADK)

- `ADK_MODEL` (Choose a different model (default: gemini-2.0-flash))

- `DBT_TOKEN`

- `DBT_PROD_ENV_ID`

- `DBT_HOST` (if not using the default `cloud.getdbt.com`)

- `DBT_PROJECT_DIR` (if using dbt core)

### Usage

`uv run main.py`

```

--------------------------------------------------------------------------------

/examples/openai_agent/README.md:

--------------------------------------------------------------------------------

```markdown

# OpenAI Agent

Examples of using dbt-mcp with OpenAI's agent framework

## Usage

### Local MCP Server

- set up the env var file like described in the README and make sure that the `MCPServerStdio` points to it

- set up the env var `OPENAI_API_KEY` with your OpenAI API key

- run `uv run main.py`

### MCP Streamable HTTP Server

- set up the env var `OPENAI_API_KEY` with your OpenAI API key

- set up the env var `DBT_TOKEN` with your dbt API token

- set up the env var `DBT_PROD_ENV_ID` with your dbt production environment ID

- set up the env var `DBT_HOST` with your dbt host (default is `cloud.getdbt.com`)

- run `uv run main_streamable.py`

```

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

```markdown

# dbt MCP Server

[](https://www.bestpractices.dev/projects/11137)

This MCP (Model Context Protocol) server provides various tools to interact with dbt. You can use this MCP server to provide AI agents with context of your project in dbt Core, dbt Fusion, and dbt Platform.

Read our documentation [here](https://docs.getdbt.com/docs/dbt-ai/about-mcp) to learn more. [This](https://docs.getdbt.com/blog/introducing-dbt-mcp-server) blog post provides more details for what is possible with the dbt MCP server.

## Feedback

If you have comments or questions, create a GitHub Issue or join us in [the community Slack](https://www.getdbt.com/community/join-the-community) in the `#tools-dbt-mcp` channel.

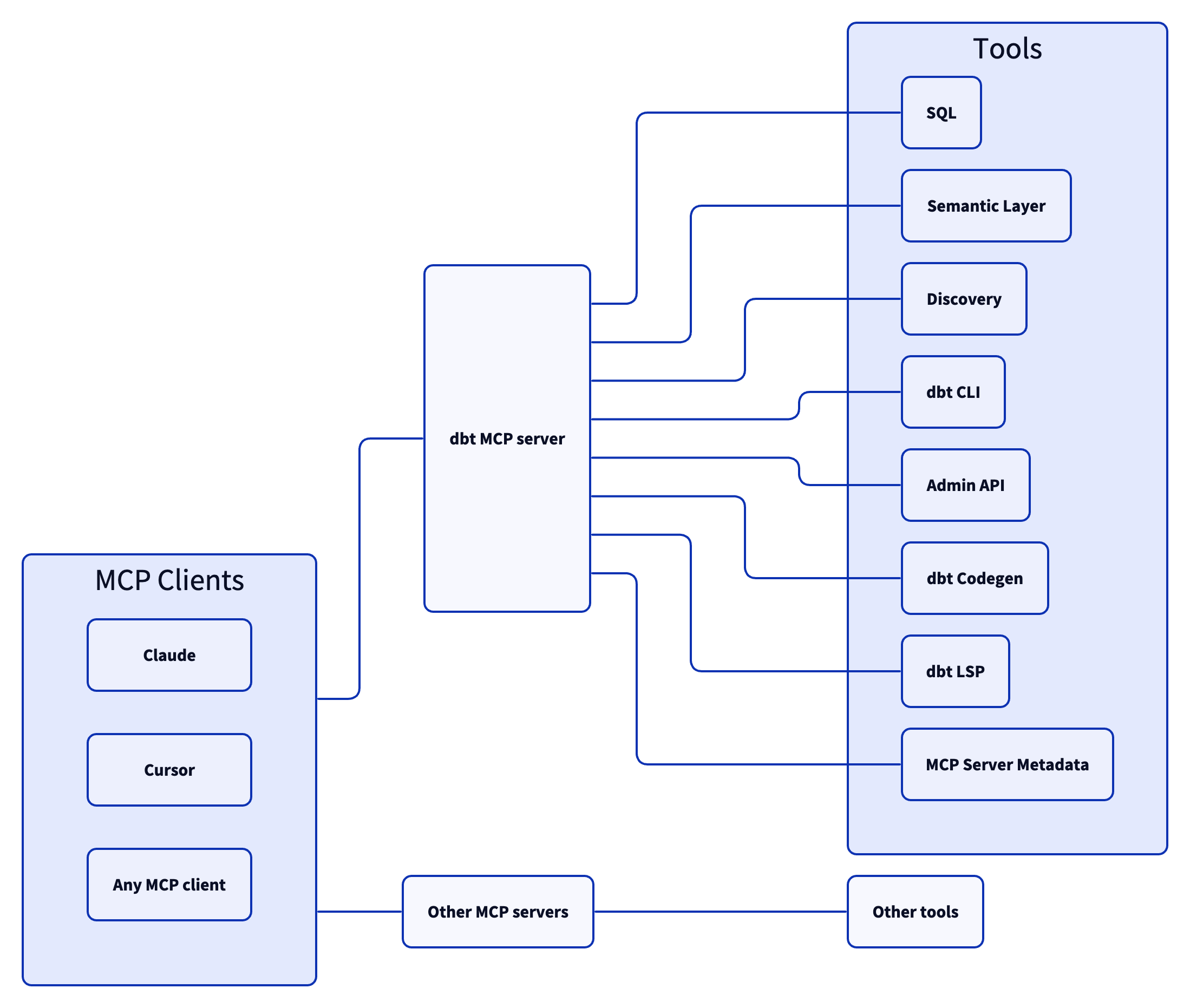

## Architecture

The dbt MCP server architecture allows for your agent to connect to a variety of tools.

## Examples

Commonly, you will connect the dbt MCP server to an agent product like Claude or Cursor. However, if you are interested in creating your own agent, check out [the examples directory](https://github.com/dbt-labs/dbt-mcp/tree/main/examples) for how to get started.

## Contributing

Read `CONTRIBUTING.md` for instructions on how to get involved!

```

--------------------------------------------------------------------------------

/examples/aws_strands_agent/README.md:

--------------------------------------------------------------------------------

```markdown

# dbt AWS Agentcore Multi-Agent

A multi-agent system built with AWS Bedrock Agent Core that provides intelligent dbt project management and analysis capabilities.

## Architecture

This project implements a multi-agent architecture with three specialized tools:

1. **dbt Compile Tool** - Local dbt compilation functionality

2. **dbt Model Analyzer** - Data model analysis and recommendations

3. **dbt MCP Server Tool** - Remote dbt MCP server connection

## 📋 Prerequisites

- Python 3.10+

- dbt CLI installed and configured

- dbt Fusion installed

- AWS Agentcore setup

## 🛠️ Installation

1. **Clone the repository**:

```bash

git clone <repository-url>

cd dbt-aws-agent

```

2. **Create a virtual environment**:

```bash

python -m venv .venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

```

3. **Install dependencies**:

```bash

pip install -r requirements.txt

```

4. **Set up environment variables**:

```bash

cp .env.example .env

# Edit .env with your configuration

```

5. **Run**:

```bash

cd dbt_data_scientist

python agent.py

```

## Project Structure

```

dbt-aws-agent/

├── dbt_data_scientist/ # Main application package

│ ├── __init__.py # Package initialization

│ ├── agent.py # Main agent with Bedrock Agent Core integration

│ ├── prompts.py # Agent prompts and instructions

│ ├── test_all_tools.py # Comprehensive test suite

│ ├── quick_mcp_test.py # Quick MCP connectivity test

│ └── tools/ # Tool implementations

│ ├── __init__.py

│ ├── dbt_compile.py # Local dbt compilation tool

│ ├── dbt_mcp.py # Remote dbt MCP server tool (translated from Google ADK)

│ └── dbt_model_analyzer.py # Data model analysis tool

├── requirements.txt # Python dependencies

├── env.example # Environment configuration template

└── README.md # This documentation

```

## Tools Overview

### 1. dbt Compile Tool (`dbt_compile.py`)

- **Purpose**: Local dbt project compilation and troubleshooting

- **Features**:

- Runs `dbt compile --log-format json` locally

- Parses JSON logs for structured analysis

- Provides compilation error analysis and recommendations

- Routes to specialized dbt compile agent for intelligent responses

### 2. dbt Model Analyzer Tool (`dbt_model_analyzer.py`)

- **Purpose**: Data model analysis and recommendations

- **Features**:

- Analyzes model structure and dependencies

- Assesses data quality patterns and test coverage

- Reviews adherence to dbt best practices

- Provides optimization recommendations

- Generates model documentation suggestions

### 3. dbt MCP Server Tool (`dbt_mcp.py`)

- **Purpose**: Remote dbt MCP server connection using AWS Bedrock Agent Core

- **Features**:

- Connects to remote dbt MCP server using streamable HTTP client

- Supports dbt Cloud authentication with headers

- Lists available MCP tools dynamically

- Executes dbt MCP tool functions

- Provides intelligent query routing to appropriate tools

- Built-in connection testing and error handling

```

### 3. Test the Setup

Before running the full application, test that everything is working:

```bash

# Quick MCP test

python dbt_data_scientist/quick_mcp_test.py

# Full test suite

python dbt_data_scientist/test_all_tools.py

```

### 4. Run the Application

#### For AWS Bedrock Agent Core:

```bash

python -m dbt_data_scientist.agent

```

#### For Local Testing:

```bash

python -m dbt_data_scientist.agent

```

## Usage Examples

### dbt Compile Tool

```

> "Compile my dbt project and find any issues"

> "What's wrong with my models in the staging folder?"

```

### dbt Model Analyzer Tool

```

> "Analyze my data modeling approach for best practices"

> "Review the dependencies in my dbt project"

> "Check the data quality patterns in my models"

```

### dbt MCP Server Tool

```

> "List all available dbt MCP tools"

> "Show me the catalog from dbt Cloud"

> "Run my models in dbt Cloud"

> "What tests are available in my dbt project?"

```

## Testing

The project includes comprehensive testing capabilities to verify all components are working correctly.

### Quick Tests

#### Test MCP Connection Only

```bash

python dbt_data_scientist/quick_mcp_test.py

```

- Fast, minimal test of MCP connectivity

- Verifies environment variables and connection

- Lists available MCP tools

#### Test MCP Tool Directly

```bash

python dbt_data_scientist/tools/dbt_mcp.py

```

- Tests the MCP module directly

- Built-in connection testing

- Shows detailed error messages

### Comprehensive Testing

#### Full Test Suite

```bash

python dbt_data_scientist/test_all_tools.py

```

- Tests all tools individually

- Verifies agent initialization

- Tests tool integration

- Comprehensive error reporting

### What Tests Verify

1. **Environment Variables** - All required variables are set

2. **Tool Imports** - All tools can be imported successfully

3. **Agent Initialization** - Agent loads with all tools

4. **Individual Tool Testing** - Each tool executes correctly

5. **Agent Integration** - Tools work together in the agent

6. **MCP Connectivity** - Remote MCP server connection works

### Test Output Example

```

🚀 Complete Tool and Agent Test Suite

==================================================

🔧 Testing Environment Setup

------------------------------

✅ DBT_MCP_URL: https://your-mcp-server.com

✅ DBT_TOKEN: ****************

✅ DBT_USER_ID: your_user_id

✅ DBT_PROD_ENV_ID: your_env_id

✅ Environment setup complete!

📦 Testing Tool Imports

------------------------------

✅ All tools imported successfully

✅ dbt_compile is callable

✅ dbt_mcp_tool is callable

✅ dbt_model_analyzer_agent is callable

... (more tests)

🎉 All tests passed! Your agent and tools are working correctly.

```

## Key Features

### 🔄 **Intelligent Routing**

The main agent automatically routes queries to the appropriate specialized tool based on keywords and context.

### 🌐 **MCP Server Integration**

Seamless connection to remote dbt MCP servers with proper authentication and error handling.

### 📊 **Comprehensive Analysis**

Multi-faceted analysis including compilation, modeling best practices, and data quality assessment.

### ⚡ **Async Support**

Full async/await support for MCP operations while maintaining compatibility with Bedrock Agent Core.

### 🛡️ **Error Handling**

Robust error handling and fallback mechanisms for all tool operations.

## Development

### Adding New Tools

1. Create a new tool file in `dbt_data_scientist/tools/`

2. Use the `@tool` decorator from strands

3. Add the tool to the main agent's tools list in `agent.py`

4. Update the routing logic in the main agent's system prompt

## Troubleshooting

### Testing First

Before troubleshooting, run the test suite to identify issues:

```bash

# Quick test for MCP issues

python dbt_data_scientist/quick_mcp_test.py

# Comprehensive test for all issues

python dbt_data_scientist/test_all_tools.py

```

### Common Issues

1. **MCP Connection Failed**

- Run `python dbt_data_scientist/quick_mcp_test.py` to diagnose

- Verify `DBT_MCP_URL` is correct

- Check authentication headers

- Ensure dbt MCP server is accessible

- Check network connectivity

2. **dbt Compile Errors**

- Verify `DBT_PROJECT_LOCATION` path exists

- Check `DBT_EXECUTABLE` is in PATH

- Ensure dbt project is valid

- Run `dbt compile` manually to test

3. **Environment Variable Issues**

- Copy `env.example` to `.env`

- Verify all required variables are set

- Check variable values are correct

- Use the test suite to validate configuration

4. **Agent Initialization Issues**

- Check that all tools can be imported

- Verify MCP server is accessible

- Ensure all dependencies are installed

- Run individual tool tests

### Debug Mode

For detailed debugging, you can run individual components:

```bash

# Test MCP tool directly

python dbt_data_scientist/tools/dbt_mcp.py

# Test individual tools

python -c "from dbt_data_scientist.tools import dbt_compile; print(dbt_compile('test'))"

```

## Contributing

1. Fork the repository

2. Create a feature branch

3. Make your changes

4. Add tests for new functionality

5. Submit a pull request

## License

This project is licensed under the MIT License - see the LICENSE file for details.

```

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

```markdown

# Contributing

With [task](https://taskfile.dev/) installed, simply run `task` to see the list of available commands. For comments, questions, or requests open a GitHub issue.

## Setup

1. Clone the repository:

```shell

git clone https://github.com/dbt-labs/dbt-mcp.git

cd dbt-mcp

```

2. [Install uv](https://docs.astral.sh/uv/getting-started/installation/)

3. [Install Task](https://taskfile.dev/installation/)

4. Run `task install`

5. Configure environment variables:

```shell

cp .env.example .env

```

Then edit `.env` with your specific environment variables (see the `Configuration` section of the `README.md`).

## Testing

This repo has automated tests which can be run with `task test:unit`. Additionally, there is a simple CLI tool which can be used to test by running `task client`. If you would like to test in a client like Cursor or Claude, use a configuration file like this:

```

{

"mcpServers": {

"dbt": {

"command": "<path-to-uv>",

"args": [

"--directory",

"<path-to-this-directory>/dbt-mcp",

"run",

"dbt-mcp",

"--env-file",

"<path-to-this-directory>/dbt-mcp/.env"

]

}

}

}

```

Or, if you would like to test with Oauth, use a configuration like this:

```

{

"mcpServers": {

"dbt": {

"command": "<path-to-uv>",

"args": [

"--directory",

"<path-to-this-directory>/dbt-mcp",

"run",

"dbt-mcp",

],

"env": {

"DBT_HOST": "<dbt-host-with-custom-subdomain>",

}

}

}

}

```

For improved debugging, you can set the `DBT_MCP_SERVER_FILE_LOGGING=true` environment variable to log to a `./dbt-mcp.log` file.

## Signed Commits

Before committing changes, ensure that you have set up [signed commits](https://docs.github.com/en/authentication/managing-commit-signature-verification/signing-commits).

This repo requires signing on all commits for PRs.

## Changelog

Every PR requires a changelog entry. [Install changie](https://changie.dev/) and run `changie new` to create a new changelog entry.

## Debugging

The dbt-mcp server runs with `stdio` transport by default which does not allow for Python debugger support. For debugging with breakpoints, use `streamable-http` transport.

### Option 1: MCP Inspector Only (No Breakpoints)

1. Run `task inspector` - this starts both the server and inspector automatically

2. Open MCP Inspector UI

3. Use "STDIO" Transport Type to connect

4. Test tools interactively in the inspector UI (uses `stdio` transport, no debugger support)

### Option 2: VS Code Debugger with Breakpoints (Recommended for Debugging)

1. Set breakpoints in your code

2. Press `F5` or select "debug dbt-mcp" from the Run menu

3. Open MCP Inspector UI via `npx @modelcontextprotocol/inspector`

4. Connect to `http://localhost:8000/mcp/v1` using "Streamable HTTP" transport and "Via Proxy" connection type

5. Call tools from Inspector - your breakpoints will trigger

### Option 3: Manual Debugging with `task dev`

1. Run `task dev` - this starts the server with `streamable-http` transport on `http://localhost:8000`

2. Set breakpoints in your code

3. Attach your debugger manually (see [debugpy documentation](https://github.com/microsoft/debugpy#debugpy) for examples)

4. Open MCP Inspector via `npx @modelcontextprotocol/inspector`

5. Connect to `http://localhost:8000/mcp/v1` using "Streamable HTTP" transport and "Via Proxy" connection type

6. Call tools from Inspector - your breakpoints will trigger

**Note:** `task dev` uses `streamable-http` by default. The `streamable-http` transport allows the debugger and MCP Inspector to work simultaneously without conflicts. To override, use `MCP_TRANSPORT=stdio task dev`.

If you encounter any problems, you can try running `task run` to see errors in your terminal.

## Release

Only people in the `CODEOWNERS` file should trigger a new release with these steps:

1. Consider these guidelines when choosing a version number:

- Major

- Removing a tool or toolset

- Changing the behavior of existing environment variables or configurations

- Minor

- Changes to config system related to the function signature of the register functions (e.g. `register_discovery_tools`)

- Adding optional parameters to a tool function signature

- Adding a new tool or toolset

- Removing or adding non-optional parameters from tool function signatures

- Patch

- Bug and security fixes - only major security and bug fixes will be back-ported to prior minor and major versions

- Dependency updates which don’t change behavior

- Minor enhancements

- Editing a tool or parameter description prompt

- Adding an allowed environment variable with the `DBT_MCP_` prefix

2. Trigger the [Create release PR Action](https://github.com/dbt-labs/dbt-mcp/actions/workflows/create-release-pr.yml).

- If the release is NOT a pre-release, just pick if the bump should be patch, minor or major

- If the release is a pre-release, set the bump and the pre-release suffix. We support alpha.N, beta.N and rc.N.

- use alpha for early releases of experimental features that specific people might want to test. Significant changes can be expected between alpha and the official release.

- use beta for releases that are mostly stable but still in development. It can be used to gather feedback from a group of peopleon how a specific feature should work.

- use rc for releases that are mostly stable and already feature complete. Only bugfixes and minor changes are expected between rc and the official release.

- Picking the prerelease suffix will depend on whether the last release was the stable release or a pre-release:

| Last Stable | Last Pre-release | Bump | Pre-release Suffix | Resulting Version |

| ----------- | ---------------- | ----- | ------------------ | ----------------- |

| 1.2.0 | - | minor | beta.1 | 1.3.0-beta.1 |

| 1.2.0 | 1.3.0-beta.1 | minor | beta.2 | 1.3.0-beta.2 |

| 1.2.0 | 1.3.0-beta.2 | minor | rc.1 | 1.3.0-rc.1 |

| 1.2.0 | 1.3.0-rc.1 | minor | | 1.3.0 |

| 1.2.0 | 1.3.0-beta.2 | minor | - | 1.3.0 |

| 1.2.0 | - | major | rc.1 | 2.0.0-rc.1 |

| 1.2.0 | 2.0.0-rc.1 | major | - | 2.0.0 |

3. Get this PR approved & merged in (if the resulting release name is not the one expected in the PR, just close the PR and try again step 1)

4. This will trigger the `Release dbt-mcp` Action. On the `Summary` page of this Action a member of the `CODEOWNERS` file will have to manually approve the release. The rest of the release process is automated.

```

--------------------------------------------------------------------------------

/examples/google_adk_agent/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/examples/langgraph_agent/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/examples/openai_agent/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/examples/openai_responses/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/examples/pydantic_ai_agent/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/src/client/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/src/dbt_mcp/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/src/dbt_mcp/dbt_admin/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/src/dbt_mcp/mcp/create.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/src/remote_mcp/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/integration/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/integration/dbt_codegen/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/integration/initialization/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/unit/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/unit/config/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/unit/dbt_admin/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/unit/dbt_cli/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/unit/dbt_codegen/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/tests/unit/discovery/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/ui/src/index.css:

--------------------------------------------------------------------------------

```css

```

--------------------------------------------------------------------------------

/examples/aws_strands_agent/__init__.py:

--------------------------------------------------------------------------------

```python

```

--------------------------------------------------------------------------------

/.changes/v0.2.17.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.17 - 2025-07-18

```

--------------------------------------------------------------------------------

/ui/src/global.d.ts:

--------------------------------------------------------------------------------

```typescript

declare module "*.css";

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/lsp/args/column_name.md:

--------------------------------------------------------------------------------

```markdown

The column name to trace lineage for.

```

--------------------------------------------------------------------------------

/ui/pnpm-workspace.yaml:

--------------------------------------------------------------------------------

```yaml

ignoredBuiltDependencies:

- esbuild

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/list.md:

--------------------------------------------------------------------------------

```markdown

List the resources in the your dbt project.

```

--------------------------------------------------------------------------------

/src/dbt_mcp/oauth/client_id.py:

--------------------------------------------------------------------------------

```python

OAUTH_CLIENT_ID = "34ec61e834cdffd9dd90a32231937821"

```

--------------------------------------------------------------------------------

/tests/unit/lsp/__init__.py:

--------------------------------------------------------------------------------

```python

"""Unit tests for the dbt Fusion LSP integration."""

```

--------------------------------------------------------------------------------

/.changes/v0.2.11.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.11 - 2025-07-03

### Bug Fix

* fix order_by input

```

--------------------------------------------------------------------------------

/.changes/v0.1.3.md:

--------------------------------------------------------------------------------

```markdown

## v0.1.3 and before

* Initial releases before using changie

```

--------------------------------------------------------------------------------

/examples/aws_strands_agent/dbt_data_scientist/__init__.py:

--------------------------------------------------------------------------------

```python

from . import agent

from . import prompts

from . import tools

```

--------------------------------------------------------------------------------

/src/dbt_mcp/dbt_admin/run_results_errors/__init__.py:

--------------------------------------------------------------------------------

```python

from .parser import ErrorFetcher

__all__ = ["ErrorFetcher"]

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/__init__.py:

--------------------------------------------------------------------------------

```python

from dbt_mcp.prompts.prompts import get_prompt # noqa: F401

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/table_name.md:

--------------------------------------------------------------------------------

```markdown

The source table name to generate a base model for (required)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/source_name.md:

--------------------------------------------------------------------------------

```markdown

The source name as defined in your sources.yml file (required)

```

--------------------------------------------------------------------------------

/ui/src/vite-env.d.ts:

--------------------------------------------------------------------------------

```typescript

/// <reference types="vite/client" />

declare module "*.css";

```

--------------------------------------------------------------------------------

/.changes/v0.4.1.md:

--------------------------------------------------------------------------------

```markdown

## v0.4.1 - 2025-08-08

### Under the Hood

* Upgrade dbt-sl-sdk

```

--------------------------------------------------------------------------------

/src/dbt_mcp/dbt_codegen/__init__.py:

--------------------------------------------------------------------------------

```python

"""dbt-codegen MCP tools for automated dbt code generation."""

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/discovery/get_all_models.md:

--------------------------------------------------------------------------------

```markdown

Get the name and description of all dbt models in the environment.

```

--------------------------------------------------------------------------------

/.changes/v0.8.1.md:

--------------------------------------------------------------------------------

```markdown

## v0.8.1 - 2025-09-22

### Under the Hood

* Create ConfigProvider ABC

```

--------------------------------------------------------------------------------

/.changes/v0.2.19.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.19 - 2025-07-22

### Under the Hood

* Create list of tool names

```

--------------------------------------------------------------------------------

/.changes/v0.2.10.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.10 - 2025-07-03

### Enhancement or New Feature

* Upgrade MCP SDK

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/schema_name.md:

--------------------------------------------------------------------------------

```markdown

The schema name in your database that contains the source tables (required)

```

--------------------------------------------------------------------------------

/.changes/v0.2.3.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.3 - 2025-06-02

### Under the Hood

* Fix release action to fetch tags

```

--------------------------------------------------------------------------------

/.changes/v0.10.1.md:

--------------------------------------------------------------------------------

```markdown

## v0.10.1 - 2025-10-02

### Bug Fix

* Fix get_job_run_error truncated log output

```

--------------------------------------------------------------------------------

/.changes/v0.2.12.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.12 - 2025-07-09

### Bug Fix

* Catch every tool error and surface as string

```

--------------------------------------------------------------------------------

/.changes/v0.2.13.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.13 - 2025-07-11

### Under the Hood

* Decouple discovery tools from FastMCP

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/database_name.md:

--------------------------------------------------------------------------------

```markdown

The database that contains your source data (optional, defaults to target database)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/case_sensitive_cols.md:

--------------------------------------------------------------------------------

```markdown

Whether to quote column names to preserve case sensitivity (optional, default false)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/tables.md:

--------------------------------------------------------------------------------

```markdown

List of table names to generate base models for from the specified source (required)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/docs.md:

--------------------------------------------------------------------------------

```markdown

The docs command is responsible for generating your project's documentation website.

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/materialized.md:

--------------------------------------------------------------------------------

```markdown

The materialization type for the model config block (optional, e.g., 'view', 'table')

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/include_data_types.md:

--------------------------------------------------------------------------------

```markdown

Whether to include data types in the model column definitions (optional, default true)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/leading_commas.md:

--------------------------------------------------------------------------------

```markdown

Whether to use leading commas instead of trailing commas in SQL (optional, default false)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/include_descriptions.md:

--------------------------------------------------------------------------------

```markdown

Whether to include placeholder descriptions in the generated YAML (optional, default false)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/generate_columns.md:

--------------------------------------------------------------------------------

```markdown

Whether to include column definitions in the generated source YAML (optional, default false)

```

--------------------------------------------------------------------------------

/.changes/v0.2.4.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.4 - 2025-06-03

### Bug Fix

* Add the missing selector argument when running commands

```

--------------------------------------------------------------------------------

/.changes/v0.10.0.md:

--------------------------------------------------------------------------------

```markdown

## v0.10.0 - 2025-10-01

### Enhancement or New Feature

* Add get_job_run_error to Admin API tools

```

--------------------------------------------------------------------------------

/.changes/v0.2.1.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.1 - 2025-05-28

### Under the Hood

* Remove hatch from tag action

* Manually triggering release

```

--------------------------------------------------------------------------------

/.changes/unreleased/Bug Fix-20251028-143835.yaml:

--------------------------------------------------------------------------------

```yaml

kind: Bug Fix

body: Minor update to the instruction for LSP tool

time: 2025-10-28T14:38:35.729371+01:00

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/build.md:

--------------------------------------------------------------------------------

```markdown

The dbt build command will:

- run models

- test tests

- snapshot snapshots

- seed seeds

In DAG order.

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/upstream_descriptions.md:

--------------------------------------------------------------------------------

```markdown

Whether to include descriptions from upstream models for matching column names (optional, default false)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/test.md:

--------------------------------------------------------------------------------

```markdown

dbt test runs data tests defined on models, sources, snapshots, and seeds and unit tests defined on SQL models.

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/table_names.md:

--------------------------------------------------------------------------------

```markdown

List of specific table names to generate source definitions for (optional, generates all tables if not specified)

```

--------------------------------------------------------------------------------

/.changes/unreleased/Enhancement or New Feature-20251014-175047.yaml:

--------------------------------------------------------------------------------

```yaml

kind: Enhancement or New Feature

body: This adds the get all sources tool.

time: 2025-10-14T17:50:47.539453+01:00

```

--------------------------------------------------------------------------------

/.changes/v0.9.1.md:

--------------------------------------------------------------------------------

```markdown

## v0.9.1 - 2025-09-30

### Under the Hood

* Reorganize code and add ability to format the arrow table differently

```

--------------------------------------------------------------------------------

/.changes/unreleased/Under the Hood-20251030-151902.yaml:

--------------------------------------------------------------------------------

```yaml

kind: Under the Hood

body: Add version number guidelines to contributing.md

time: 2025-10-30T15:19:02.963083-05:00

```

--------------------------------------------------------------------------------

/.changes/v0.2.15.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.15 - 2025-07-16

### Under the Hood

* Refactor sl tools for reusability

* Update VSCode instructions in README

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/args/sql_query.md:

--------------------------------------------------------------------------------

```markdown

This is the SQL query to run against the data platform. Do not add a limit to this query. Use the `limit` argument instead.

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/prompts.py:

--------------------------------------------------------------------------------

```python

from pathlib import Path

def get_prompt(name: str) -> str:

return (Path(__file__).parent / f"{name}.md").read_text()

```

--------------------------------------------------------------------------------

/examples/aws_strands_agent/dbt_data_scientist/tools/__init__.py:

--------------------------------------------------------------------------------

```python

from .dbt_compile import dbt_compile

from .dbt_mcp import dbt_mcp_tool

from .dbt_model_analyzer import dbt_model_analyzer_agent

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/args/full_refresh.md:

--------------------------------------------------------------------------------

```markdown

If true, dbt will force a complete rebuild of incremental models (built from scratch) rather than processing new or modifed data.

```

--------------------------------------------------------------------------------

/.changes/v0.2.16.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.16 - 2025-07-18

### Under the Hood

* Adding the ability to exclude certain tools when registering

* OpenAI responses example

```

--------------------------------------------------------------------------------

/ui/tsconfig.json:

--------------------------------------------------------------------------------

```json

{

"files": [],

"references": [

{

"path": "./tsconfig.app.json"

},

{

"path": "./tsconfig.node.json"

}

]

}

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/model_name.md:

--------------------------------------------------------------------------------

```markdown

The name of the model to generate import CTEs for, search for the model name provided with and without extension provided by users(required)

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_codegen/args/model_names.md:

--------------------------------------------------------------------------------

```markdown

List of model names to generate YAML documentation for, search for the model names provided with and without extension provided by users(required)

```

--------------------------------------------------------------------------------

/.vscode/settings.json:

--------------------------------------------------------------------------------

```json

{

"python.testing.pytestArgs": [

"tests",

"evals"

],

"python.testing.pytestEnabled": true,

"python.testing.unittestEnabled": false

}

```

--------------------------------------------------------------------------------

/.changes/v0.2.14.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.14 - 2025-07-14

### Enhancement or New Feature

* Make dbt CLI command timeout configurable

### Bug Fix

* Allow passing entities in the group by

```

--------------------------------------------------------------------------------

/src/dbt_mcp/errors/base.py:

--------------------------------------------------------------------------------

```python

"""Base error class for all dbt-mcp tool calls."""

class ToolCallError(Exception):

"""Base exception for all tool call errors in dbt-mcp."""

pass

```

--------------------------------------------------------------------------------

/.changes/v0.6.0.md:

--------------------------------------------------------------------------------

```markdown

## v0.6.0 - 2025-08-22

### Under the Hood

* Update docs with new tools

* Using streamable http for SQL tools

* Correctly handle admin API host containing protocol prefix

```

--------------------------------------------------------------------------------

/.changes/v0.9.0.md:

--------------------------------------------------------------------------------

```markdown

## v0.9.0 - 2025-09-30

### Enhancement or New Feature

* Adding the dbt codegen toolset.

### Under the Hood

* Updates README with new tools

* Fix .user.yml error with Fusion

```

--------------------------------------------------------------------------------

/.changes/v0.8.3.md:

--------------------------------------------------------------------------------

```markdown

## v0.8.3 - 2025-09-24

### Under the Hood

* Rename SemanticLayerConfig.service_token to SemanticLayerConfig.token

### Bug Fix

* Fix Error handling as per native MCP error spec

```

--------------------------------------------------------------------------------

/.changes/v0.6.1.md:

--------------------------------------------------------------------------------

```markdown

## v0.6.1 - 2025-08-28

### Enhancement or New Feature

* Add support for --vars flag

* Allow headers in AdminApiConfig

### Under the Hood

* Remove redundant and outdated documentation

```

--------------------------------------------------------------------------------

/examples/openai_responses/pyproject.toml:

--------------------------------------------------------------------------------

```toml

[project]

name = "openai-responses"

version = "0.1.0"

description = "Add your description here"

readme = "README.md"

requires-python = ">=3.12"

dependencies = [

"openai>=1.95.1",

]

```

--------------------------------------------------------------------------------

/.changes/v0.10.3.md:

--------------------------------------------------------------------------------

```markdown

## v0.10.3 - 2025-10-08

### Under the Hood

* Improved retry logic and post project selection screen

* Avoid double counting in usage tracking proxied tools

* Categorizing ToolCallErrors

```

--------------------------------------------------------------------------------

/examples/pydantic_ai_agent/pyproject.toml:

--------------------------------------------------------------------------------

```toml

[project]

name = "pydantic-ai-agent"

version = "0.1.0"

description = "Add your description here"

readme = "README.md"

requires-python = ">=3.10"

dependencies = [

"pydantic-ai>=0.8.1",

]

```

--------------------------------------------------------------------------------

/src/dbt_mcp/errors/semantic_layer.py:

--------------------------------------------------------------------------------

```python

"""Semantic Layer tool errors."""

from dbt_mcp.errors.base import ToolCallError

class SemanticLayerToolCallError(ToolCallError):

"""Base exception for Semantic Layer tool errors."""

pass

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/args/limit.md:

--------------------------------------------------------------------------------

```markdown

Limit the number of rows that the data platform will return. Use this in place of a `LIMIT` clause in the SQL query. If no limit is passed, use the default of 5 to prevent returning a very large result set.

```

--------------------------------------------------------------------------------

/.changes/v0.2.6.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.6 - 2025-06-16

### Under the Hood

* Instructing the LLM to more likely use a selector

* Instruct LLM to add limit as an argument instead of SQL

* Fix use of limit in dbt show

* Indicate type checking

```

--------------------------------------------------------------------------------

/.changes/v0.4.2.md:

--------------------------------------------------------------------------------

```markdown

## v0.4.2 - 2025-08-13

### Enhancement or New Feature

* Add default --limit to show tool

### Under the Hood

* Define toolsets

### Bug Fix

* Fix the prompt to ensure grain is passed even for non-time group by"

```

--------------------------------------------------------------------------------

/.changes/v0.2.8.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.8 - 2025-07-02

### Enhancement or New Feature

* Raise errors if no node is selected (can also be configured)

### Bug Fix

* Fix when people provide `DBT_PROJECT_DIR` as a relative path

* Fix link in README

```

--------------------------------------------------------------------------------

/examples/openai_agent/pyproject.toml:

--------------------------------------------------------------------------------

```toml

[project]

name = "openai-agent"

version = "0.1.0"

description = "A simple example of using this MCP server with OpenAI Agents"

readme = "README.md"

requires-python = ">=3.12"

dependencies = ["openai-agents>=0.1.0"]

```

--------------------------------------------------------------------------------

/.changes/v0.2.9.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.9 - 2025-07-02

### Enhancement or New Feature

* Decrease amount of data retrieved when listing models

### Under the Hood

* OpenAI conversational analytics example

* README updates

* Move Discover headers to config

```

--------------------------------------------------------------------------------

/examples/google_adk_agent/pyproject.toml:

--------------------------------------------------------------------------------

```toml

[project]

name = "google-adk-dbt-agent"

version = "0.1.0"

description = "Google ADK agent for dbt MCP"

readme = "README.md"

requires-python = ">=3.10"

dependencies = [

"google-adk>=0.4.0",

"google-genai<=1.34.0",

]

```

--------------------------------------------------------------------------------

/.changes/v0.8.2.md:

--------------------------------------------------------------------------------

```markdown

## v0.8.2 - 2025-09-23

### Enhancement or New Feature

* Use `dbt --help` to identify binary type

* Increase dbt CLI timeout default

### Under the Hood

* Implement SemanticLayerClientProvider

### Bug Fix

* Update how we identify CLIs

```

--------------------------------------------------------------------------------

/.changes/v0.2.7.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.7 - 2025-06-30

### Under the Hood

* Timeout dbt list command

* Troubleshooting section in README on clients not finding uvx

* Update Discovery config for simpler usage

### Bug Fix

* Fixing bug when ordering SL query by a metric

```

--------------------------------------------------------------------------------

/.changes/v0.7.0.md:

--------------------------------------------------------------------------------

```markdown

## v0.7.0 - 2025-09-09

### Enhancement or New Feature

* Add tools to retrieve exposure information from Disco API

### Under the Hood

* Expect string sub in oauth JWT

* Using sync endpoints for oauth FastAPI server

* Fix release pipeline

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/compile.md:

--------------------------------------------------------------------------------

```markdown

dbt compile generates executable SQL from source model, test, and analysis files.

The compile command is useful for visually inspecting the compiled output of model files. This is useful for validating complex jinja logic or macro usage.

```

--------------------------------------------------------------------------------

/examples/langgraph_agent/pyproject.toml:

--------------------------------------------------------------------------------

```toml

[project]

name = "langgraph-agent"

version = "0.1.0"

description = "Add your description here"

readme = "README.md"

requires-python = ">=3.12"

dependencies = [

"langchain-mcp-adapters>=0.1.9",

"langchain[anthropic]>=0.3.27",

"langgraph>=0.6.4",

]

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/discovery/get_mart_models.md:

--------------------------------------------------------------------------------

```markdown

Get the name and description of all mart models in the environment. A mart model is part of the presentation layer of the dbt project. It's where cleaned, transformed data is organized for consumption by end-users, like analysts, dashboards, or business tools.

```

--------------------------------------------------------------------------------

/src/dbt_mcp/prompts/dbt_cli/show.md:

--------------------------------------------------------------------------------

```markdown

dbt show executes an arbitrary SQL statement against the database and returns the results. It is useful for debugging and inspecting data in your dbt project. If you are adding a limit be sure to use the `limit` argument and do not add a limit to the SQL query.

```

--------------------------------------------------------------------------------

/.changes/v0.8.4.md:

--------------------------------------------------------------------------------

```markdown

## v0.8.4 - 2025-09-29

### Enhancement or New Feature

* Allow doc files to skip changie requirements

### Under the Hood

* Upgrade @vitejs/plugin-react

* Add ruff lint config to enforce Python 3.9+ coding style

* Opt-out of usage tracking with standard dbt methods

```

--------------------------------------------------------------------------------

/.changes/v0.2.18.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.18 - 2025-07-22

### Enhancement or New Feature

* Move env var parsing to pydantic_settings for better validation

### Under the Hood

* Add integration test for server initialization

### Bug Fix

* Fix SL validation error message when no misspellings are found

```

--------------------------------------------------------------------------------

/.changes/v0.4.0.md:

--------------------------------------------------------------------------------

```markdown

## v0.4.0 - 2025-08-08

### Enhancement or New Feature

* Tool policies

* Added Semantic Layer tool to get compiled sql

### Under the Hood

* Fix JSON formatting in README

* Document dbt Copilot credits relationship

### Bug Fix

* Make model_name of get_model_details optional

```

--------------------------------------------------------------------------------

/src/dbt_mcp/errors/sql.py:

--------------------------------------------------------------------------------

```python

"""SQL tool errors."""

from dbt_mcp.errors.base import ToolCallError

class SQLToolCallError(ToolCallError):

"""Base exception for SQL tool errors."""

pass

class RemoteToolError(SQLToolCallError):

"""Exception raised when a remote SQL tool call fails."""

pass

```

--------------------------------------------------------------------------------

/.changes/v0.2.5.md:

--------------------------------------------------------------------------------

```markdown

## v0.2.5 - 2025-06-06

### Under the Hood