# Directory Structure

```

├── .gitignore

├── Dockerfile

├── docs

│ └── apps-sdk

│ ├── _blog_realtime-api_.txt

│ ├── _codex_cloud_code-review_.txt

│ ├── _codex_pricing_.txt

│ ├── _tracks_ai-application-development_.txt

│ ├── _tracks_building-agents.txt

│ ├── apps-sdk_app-developer-guidelines.txt

│ ├── apps-sdk_build_custom-ux_.txt

│ ├── apps-sdk_build_examples.txt

│ ├── apps-sdk_plan_components.txt

│ └── apps-sdk_plan_use-case.txt

├── LICENSE

├── package.json

├── pnpm-lock.yaml

├── README.md

├── smithery.yaml

├── src

│ ├── index.ts

│ └── proxy-server.ts

└── tsconfig.json

```

# Files

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

```

dist

node_modules

.env

```

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

```markdown

# OP.GG MCP Server

[](https://smithery.ai/server/@opgginc/opgg-mcp)

The OP.GG MCP Server is a Model Context Protocol implementation that seamlessly connects OP.GG data with AI agents and platforms. This server enables AI agents to retrieve various OP.GG data via function calling.

## Overview

This MCP server provides AI agents with access to OP.GG data through a standardized interface. It offers a simple way to connect to our remote server (https://mcp-api.op.gg/mcp), allowing for easy installation and immediate access to OP.GG data in a format that's easily consumable by AI models and agent frameworks.

## Features

The OP.GG MCP Server currently supports the following tools:

### League of Legends

- **lol-champion-leader-board**: Get ranking board data for League of Legends champions.

- **lol-champion-analysis**: Provides analysis data for League of Legends champions (counter and ban/pick data available in the "weakCounters" field).

- **lol-champion-meta-data**: Retrieves meta data for a specific champion, including statistics and performance metrics.

- **lol-champion-skin-sale**: Retrieves information about champion skins that are currently on sale.

- **lol-summoner-search**: Search for League of Legends summoner information and stats.

- **lol-champion-positions-data**: Retrieves position statistics data for League of Legends champions, including win rates and pick rates by position.

- **lol-summoner-game-history**: Retrieve recent game history for a League of Legends summoner.

- **lol-summoner-renewal**: Refresh and update League of Legends summoner match history and stats.

### Esports (League of Legends)

- **esports-lol-schedules**: Get upcoming LoL match schedules.

- **esports-lol-team-standings**: Get team standings for a LoL league.

### Teamfight Tactics (TFT)

- **tft-meta-trend-deck-list**: TFT deck list tool for retrieving current meta decks.

- **tft-meta-item-combinations**: TFT tool for retrieving information about item combinations and recipes.

- **tft-champion-item-build**: TFT tool for retrieving champion item build information.

- **tft-recommend-champion-for-item**: TFT tool for retrieving champion recommendations for a specific item.

- **tft-play-style-comment**: This tool provides comments on the playstyle of TFT champions.

### Valorant

- **valorant-meta-maps**: Valorant map meta data.

- **valorant-meta-characters**: Valorant character meta data.

- **valorant-leaderboard**: Fetch Valorant leaderboard by region.

- **valorant-agents-composition-with-map**: Retrieve agent composition data for a Valorant map.

- **valorant-characters-statistics**: Retrieve character statistics data for Valorant, optionally filtered by map.

- **valorant-player-match-history**: Retrieve match history for a Valorant player using their game name and tag line.

## Usage

The OP.GG MCP Server can be used with any MCP-compatible client. The following content explains installation methods using Claude Desktop as an example.

### Direct Connection via StreamableHttp

If you want to connect directly to our StreamableHttp endpoint, you can use the `supergateway` package. This provides a simple way to connect to our remote server without having to install the full OP.GG MCP Server.

Add the following to your `claude_desktop_config.json` file:

#### Mac/Linux

```json

{

"mcpServers": {

"opgg-mcp": {

"command": "npx",

"args": [

"-y",

"supergateway",

"--streamableHttp",

"https://mcp-api.op.gg/mcp"

]

}

}

}

```

#### Windows

```json

{

"mcpServers": {

"opgg-mcp": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"supergateway",

"--streamableHttp",

"https://mcp-api.op.gg/mcp"

]

}

}

}

```

This configuration will use the `supergateway` package to establish a direct connection to our StreamableHttp endpoint, providing you with immediate access to all OP.GG data tools.

### Installing via Smithery

To install OP.GG MCP for Claude Desktop automatically via [Smithery](https://smithery.ai/server/@opgginc/opgg-mcp):

```bash

$ npx -y @smithery/cli@latest install @opgginc/opgg-mcp --client claude --key {SMITHERY_API_KEY}

```

### Adding to MCP Configuration

To add this server to your Claude Desktop MCP configuration, add the following entry to your `claude_desktop_config.json` file:

#### Mac/Linux

```json

{

"mcpServers": {

"opgg-mcp": {

"command": "npx",

"args": [

"-y",

"@smithery/cli@latest",

"run",

"@opgginc/opgg-mcp",

"--key",

"{SMITHERY_API_KEY}"

]

}

}

}

```

#### Windows

```json

{

"mcpServers": {

"opgg-mcp": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@smithery/cli@latest",

"run",

"@opgginc/opgg-mcp",

"--key",

"{SMITHERY_API_KEY}"

]

}

}

}

```

After adding the configuration, restart Claude Desktop for the changes to take effect.

## License

This project is licensed under the MIT License - see the LICENSE file for details.

## Related Links

- [Model Context Protocol](https://modelcontextprotocol.io)

- [OP.GG](https://op.gg)

```

--------------------------------------------------------------------------------

/tsconfig.json:

--------------------------------------------------------------------------------

```json

{

"extends": "@tsconfig/node22/tsconfig.json",

"compilerOptions": {

"noEmit": true,

"noUnusedLocals": true,

"noUnusedParameters": true

}

}

```

--------------------------------------------------------------------------------

/smithery.yaml:

--------------------------------------------------------------------------------

```yaml

# Smithery configuration file: https://smithery.ai/docs/config#smitheryyaml

startCommand:

type: stdio

configSchema:

# JSON Schema defining the configuration options for the MCP.

type: object

properties: {}

default: {}

description: No configuration required

commandFunction:

# A JS function that produces the CLI command based on the given config to start the MCP on stdio.

|-

(config) => ({command: 'node', args: ['dist/index.js']})

exampleConfig: {}

```

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

```dockerfile

# Generated by https://smithery.ai. See: https://smithery.ai/docs/config#dockerfile

FROM node:lts-alpine AS build

WORKDIR /app

# Install dependencies including dev for build

COPY package.json package-lock.json* pnpm-lock.yaml* ./

RUN npm install

# Copy source

COPY . .

# Build the project

RUN npm run build

# Production image

FROM node:lts-alpine AS runtime

WORKDIR /app

# Copy only production dependencies

COPY package.json package-lock.json* pnpm-lock.yaml* ./

RUN npm install --production

# Copy built files

COPY --from=build /app/dist ./dist

# Default command

CMD ["node", "dist/index.js"]

```

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

```json

{

"name": "opgg-mcp",

"version": "1.0.1",

"main": "dist/index.js",

"scripts": {

"build": "tsup",

"test": "npx @modelcontextprotocol/inspector@latest node dist/index.js"

},

"bin": {

"opgg-mcp": "dist/index.js"

},

"keywords": [

"MCP",

"SSE",

"proxy"

],

"type": "module",

"license": "MIT",

"module": "dist/index.js",

"types": "dist/index.d.ts",

"dependencies": {

"@modelcontextprotocol/sdk": "^1.10.0",

"eventsource": "^3.0.6"

},

"repository": {

"url": "https://github.com/opgginc/opgg-mcp"

},

"devDependencies": {

"@tsconfig/node22": "^22.0.1",

"@types/node": "^22.14.1",

"tsup": "^8.4.0",

"typescript": "^5.8.3"

},

"tsup": {

"entry": [

"src/index.ts"

],

"format": [

"esm"

],

"dts": true,

"splitting": true,

"sourcemap": true,

"clean": true

}

}

```

--------------------------------------------------------------------------------

/src/index.ts:

--------------------------------------------------------------------------------

```typescript

#!/usr/bin/env node

import { Client } from "@modelcontextprotocol/sdk/client/index.js";

import { Server } from "@modelcontextprotocol/sdk/server/index.js";

import { EventSource } from "eventsource";

import { setTimeout } from "node:timers";

import util from "node:util";

import { proxyServer } from "./proxy-server.js";

import { StreamableHTTPClientTransport } from '@modelcontextprotocol/sdk/client/streamableHttp.js';

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

util.inspect.defaultOptions.depth = 8;

if (!("EventSource" in global)) {

// @ts-expect-error - figure out how to use --experimental-eventsource with vitest

global.EventSource = EventSource;

}

const proxy = async (url: string): Promise<void> => {

const client = new Client(

{

name: "ssl-client",

version: "1.0.0",

},

{

capabilities: {},

},

);

const transport = new StreamableHTTPClientTransport(new URL(url));

await client.connect(transport);

const serverVersion = client.getServerVersion() as {

name: string;

version: string;

};

const serverCapabilities = client.getServerCapabilities() as {};

const server = new Server(serverVersion, {

capabilities: serverCapabilities,

});

const stdioTransport = new StdioServerTransport();

await server.connect(stdioTransport);

await proxyServer({

server,

client,

serverCapabilities,

});

};

const main = async () => {

process.on("SIGINT", () => {

console.info("SIGINT received, shutting down");

setTimeout(() => {

process.exit(0);

}, 1000);

});

try {

await proxy("https://mcp-api.op.gg/mcp");

} catch (error) {

console.error("could not start the proxy", error);

setTimeout(() => {

process.exit(1);

}, 1000);

}

};

await main();

```

--------------------------------------------------------------------------------

/src/proxy-server.ts:

--------------------------------------------------------------------------------

```typescript

import { Server } from "@modelcontextprotocol/sdk/server/index.js";

import {

CallToolRequestSchema,

CompleteRequestSchema,

GetPromptRequestSchema,

ListPromptsRequestSchema,

ListResourcesRequestSchema,

ListResourceTemplatesRequestSchema,

ListToolsRequestSchema,

LoggingMessageNotificationSchema,

ReadResourceRequestSchema,

SubscribeRequestSchema,

UnsubscribeRequestSchema,

ResourceUpdatedNotificationSchema,

ServerCapabilities,

} from "@modelcontextprotocol/sdk/types.js";

import { Client } from "@modelcontextprotocol/sdk/client/index.js";

export const proxyServer = async ({

server,

client,

serverCapabilities,

}: {

server: Server;

client: Client;

serverCapabilities: ServerCapabilities;

}) => {

if (serverCapabilities?.logging) {

server.setNotificationHandler(

LoggingMessageNotificationSchema,

async (args) => {

return client.notification(args);

},

);

}

if (serverCapabilities?.prompts) {

server.setRequestHandler(GetPromptRequestSchema, async (args) => {

return client.getPrompt(args.params);

});

server.setRequestHandler(ListPromptsRequestSchema, async (args) => {

return client.listPrompts(args.params);

});

}

if (serverCapabilities?.resources) {

server.setRequestHandler(ListResourcesRequestSchema, async (args) => {

return client.listResources(args.params);

});

server.setRequestHandler(

ListResourceTemplatesRequestSchema,

async (args) => {

return client.listResourceTemplates(args.params);

},

);

server.setRequestHandler(ReadResourceRequestSchema, async (args) => {

return client.readResource(args.params);

});

if (serverCapabilities?.resources.subscribe) {

server.setNotificationHandler(

ResourceUpdatedNotificationSchema,

async (args) => {

return client.notification(args);

},

);

server.setRequestHandler(SubscribeRequestSchema, async (args) => {

return client.subscribeResource(args.params);

});

server.setRequestHandler(UnsubscribeRequestSchema, async (args) => {

return client.unsubscribeResource(args.params);

});

}

}

if (serverCapabilities?.tools) {

server.setRequestHandler(CallToolRequestSchema, async (args) => {

return client.callTool(args.params);

});

server.setRequestHandler(ListToolsRequestSchema, async (args) => {

return client.listTools(args.params);

});

}

server.setRequestHandler(CompleteRequestSchema, async (args) => {

return client.complete(args.params);

});

};

```

--------------------------------------------------------------------------------

/docs/apps-sdk/_codex_cloud_code-review_.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/codex/cloud/code-review/"

title: "Code Review"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

Codex

ResourcesCodexChatGPTBlog

Clear

- [Home](https://developers.openai.com/codex)

- [Quickstart](https://developers.openai.com/codex/quickstart)

- [Concepts](https://developers.openai.com/codex/concepts)

- [Pricing](https://developers.openai.com/codex/pricing)

- [Changelog](https://developers.openai.com/codex/changelog)

### Codex CLI

- [Overview](https://developers.openai.com/codex/cli)

- [Configuration](https://developers.openai.com/codex/local-config#cli)

### Codex IDE Extension

- [Set up your IDE](https://developers.openai.com/codex/ide)

- [Configuration](https://developers.openai.com/codex/local-config#ide)

- [IDE → Cloud tasks](https://developers.openai.com/codex/ide/cloud-tasks)

### Codex Cloud

- [Delegate to Codex](https://developers.openai.com/codex/cloud)

- [Environments](https://developers.openai.com/codex/cloud/environments)

- [Code Review](https://developers.openai.com/codex/cloud/code-review)

- [Internet Access](https://developers.openai.com/codex/cloud/internet-access)

### Codex SDK

- [Overview](https://developers.openai.com/codex/sdk)

- [TypeScript](https://developers.openai.com/codex/sdk#typescript-library)

- [GitHub Action](https://developers.openai.com/codex/sdk#github-action)

### Guides

- [Agents SDK](https://developers.openai.com/codex/guides/agents-sdk)

- [Prompting Codex](https://developers.openai.com/codex/prompting)

- [Model Context Protocol (MCP)](https://developers.openai.com/codex/mcp)

- [Autofix CI](https://developers.openai.com/codex/autofix-ci)

- [Enterprise Admin](https://developers.openai.com/codex/enterprise)

- [Security Admin](https://developers.openai.com/codex/security)

- [Codex on Windows](https://developers.openai.com/codex/windows)

### Integrations

- [Slack](https://developers.openai.com/codex/integrations/slack)

### Resources

- [AGENTS.md](https://agents.md/)

- [Codex on GitHub](https://github.com/openai/codex)

Codex can review code directly in GitHub. This is great for finding bugs and improving code quality.

## Setup

Before you can use Codex directly inside GitHub, you will need to make sure [Codex cloud](https://developers.openai.com/codex/cloud) is set up.

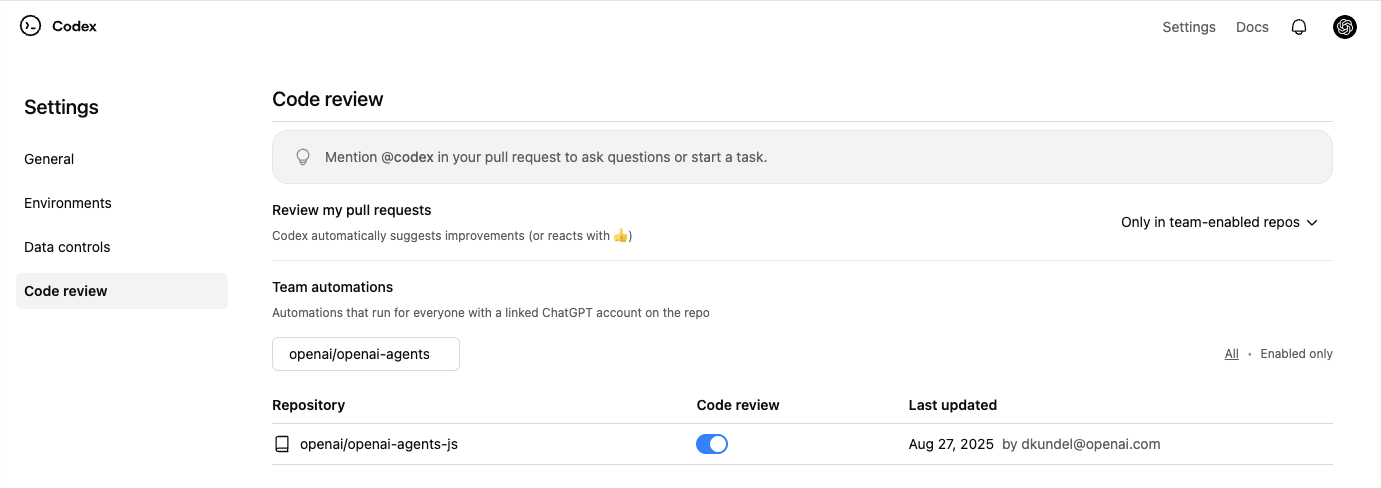

Afterwards, you can go into the [Codex settings](https://chatgpt.com/codex/settings/code-review) and enable “Code review” on your repository.

## Usage

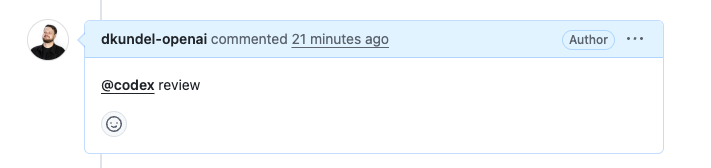

After you have enabled Code review on your repository, you can start using it by tagging `@codex` in a comment on a pull request.

To trigger a review by codex you’ll have to specifically write `@codex review`.

Afterwards you’ll see Codex react to your comment with 👀 acknowledging that it started your task.

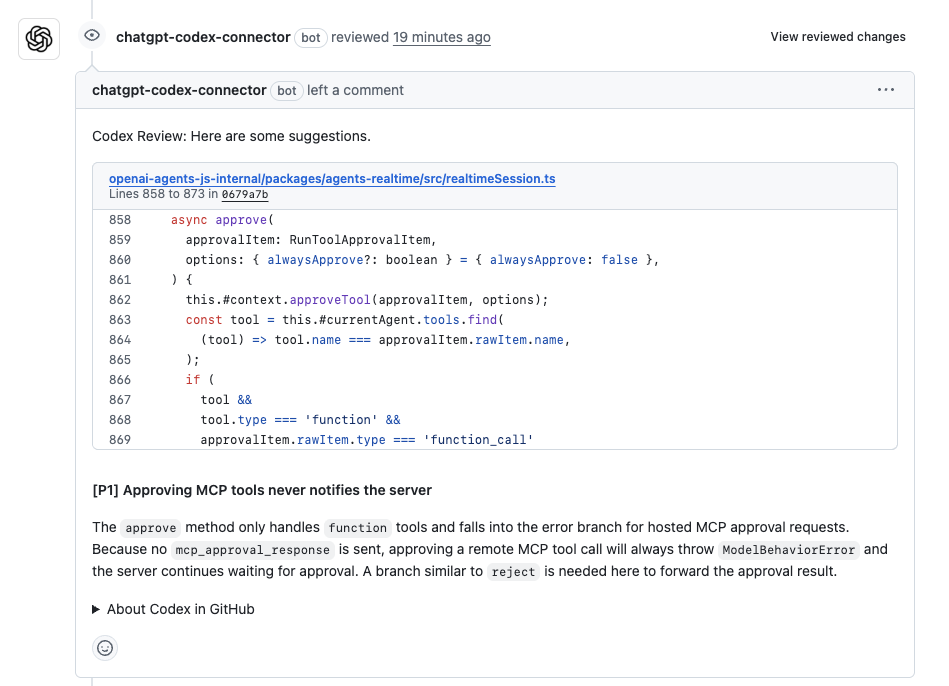

Once completed Codex will leave a regular code review in the PR the same way your team would do.

## Giving Codex other tasks

If you mention `@codex` in a comment with anything other than `review` Codex will kick off a [cloud task](https://developers.openai.com/codex/cloud) instead with the context of your pull request.

```

--------------------------------------------------------------------------------

/docs/apps-sdk/apps-sdk_plan_components.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/apps-sdk/plan/components"

title: "Design components"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

ChatGPT

ResourcesCodexChatGPTBlog

Clear

- [Home](https://developers.openai.com/apps-sdk)

### Core Concepts

- [MCP Server](https://developers.openai.com/apps-sdk/concepts/mcp-server)

- [User interaction](https://developers.openai.com/apps-sdk/concepts/user-interaction)

- [Design guidelines](https://developers.openai.com/apps-sdk/concepts/design-guidelines)

### Plan

- [Research use cases](https://developers.openai.com/apps-sdk/plan/use-case)

- [Define tools](https://developers.openai.com/apps-sdk/plan/tools)

- [Design components](https://developers.openai.com/apps-sdk/plan/components)

### Build

- [Set up your server](https://developers.openai.com/apps-sdk/build/mcp-server)

- [Build a custom UX](https://developers.openai.com/apps-sdk/build/custom-ux)

- [Authenticate users](https://developers.openai.com/apps-sdk/build/auth)

- [Persist state](https://developers.openai.com/apps-sdk/build/storage)

- [Examples](https://developers.openai.com/apps-sdk/build/examples)

### Deploy

- [Deploy your app](https://developers.openai.com/apps-sdk/deploy)

- [Connect from ChatGPT](https://developers.openai.com/apps-sdk/deploy/connect-chatgpt)

- [Test your integration](https://developers.openai.com/apps-sdk/deploy/testing)

### Guides

- [Optimize Metadata](https://developers.openai.com/apps-sdk/guides/optimize-metadata)

- [Security & Privacy](https://developers.openai.com/apps-sdk/guides/security-privacy)

- [Troubleshooting](https://developers.openai.com/apps-sdk/deploy/troubleshooting)

### Resources

- [Reference](https://developers.openai.com/apps-sdk/reference)

- [App developer guidelines](https://developers.openai.com/apps-sdk/app-developer-guidelines)

## Why components matter

UI components are the human-visible half of your connector. They let users view or edit data inline, switch to fullscreen when needed, and keep context synchronized between typed prompts and UI actions. Planning them early ensures your MCP server returns the right structured data and component metadata from day one.

## Clarify the user interaction

For each use case, decide what the user needs to see and manipulate:

- **Viewer vs. editor** – is the component read-only (a chart, a dashboard) or should it support editing and writebacks (forms, kanban boards)?

- **Single-shot vs. multiturn** – will the user accomplish the task in one invocation, or should state persist across turns as they iterate?

- **Inline vs. fullscreen** – some tasks are comfortable in the default inline card, while others benefit from fullscreen or picture-in-picture modes. Sketch these states before you implement.

Write down the fields, affordances, and empty states you need so you can validate them with design partners and reviewers.

## Map data requirements

Components should receive everything they need in the tool response. When planning:

- **Structured content** – define the JSON payload that the component will parse.

- **Initial component state** – use `window.openai.toolOutput` as the initial render data. On subsequent followups that invoke `callTool`, use the return value of `callTool`. To cache state for re-rendering, you can use `window.openai.setWidgetState`.

- **Auth context** – note whether the component should display linked-account information, or whether the model must prompt the user to connect first.

Feeding this data through the MCP response is simpler than adding ad-hoc APIs later.

## Design for responsive layouts

Components run inside an iframe on both desktop and mobile. Plan for:

- **Adaptive breakpoints** – set a max width and design layouts that collapse gracefully on small screens.

- **Accessible color and motion** – respect system dark mode (match color-scheme) and provide focus states for keyboard navigation.

- **Launcher transitions** – if the user opens your component from the launcher or expands to fullscreen, make sure navigation elements stay visible.

Document CSS variables, font stacks, and iconography up front so they are consistent across components.

## Define the state contract

Because components and the chat surface share conversation state, be explicit about what is stored where:

- **Component state** – use the `window.openai.setWidgetState` API to persist state the host should remember (selected record, scroll position, staged form data).

- **Server state** – store authoritative data in your backend or the built-in storage layer. Decide how to merge server changes back into component state after follow-up tool calls.

- **Model messages** – think about what human-readable updates the component should send back via `sendFollowupTurn` so the transcript stays meaningful.

Capturing this state diagram early prevents hard-to-debug sync issues later.

## Plan telemetry and debugging hooks

Inline experiences are hardest to debug without instrumentation. Decide in advance how you will:

- Emit analytics events for component loads, button clicks, and validation errors.

- Log tool-call IDs alongside component telemetry so you can trace issues end to end.

- Provide fallbacks when the component fails to load (e.g., show the structured JSON and prompt the user to retry).

Once these plans are in place you are ready to move on to the implementation details in [Build a custom UX](https://developers.openai.com/apps-sdk/build/custom-ux).

```

--------------------------------------------------------------------------------

/docs/apps-sdk/apps-sdk_plan_use-case.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/apps-sdk/plan/use-case"

title: "Research use cases"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

ChatGPT

ResourcesCodexChatGPTBlog

Clear

- [Home](https://developers.openai.com/apps-sdk)

### Core Concepts

- [MCP Server](https://developers.openai.com/apps-sdk/concepts/mcp-server)

- [User interaction](https://developers.openai.com/apps-sdk/concepts/user-interaction)

- [Design guidelines](https://developers.openai.com/apps-sdk/concepts/design-guidelines)

### Plan

- [Research use cases](https://developers.openai.com/apps-sdk/plan/use-case)

- [Define tools](https://developers.openai.com/apps-sdk/plan/tools)

- [Design components](https://developers.openai.com/apps-sdk/plan/components)

### Build

- [Set up your server](https://developers.openai.com/apps-sdk/build/mcp-server)

- [Build a custom UX](https://developers.openai.com/apps-sdk/build/custom-ux)

- [Authenticate users](https://developers.openai.com/apps-sdk/build/auth)

- [Persist state](https://developers.openai.com/apps-sdk/build/storage)

- [Examples](https://developers.openai.com/apps-sdk/build/examples)

### Deploy

- [Deploy your app](https://developers.openai.com/apps-sdk/deploy)

- [Connect from ChatGPT](https://developers.openai.com/apps-sdk/deploy/connect-chatgpt)

- [Test your integration](https://developers.openai.com/apps-sdk/deploy/testing)

### Guides

- [Optimize Metadata](https://developers.openai.com/apps-sdk/guides/optimize-metadata)

- [Security & Privacy](https://developers.openai.com/apps-sdk/guides/security-privacy)

- [Troubleshooting](https://developers.openai.com/apps-sdk/deploy/troubleshooting)

### Resources

- [Reference](https://developers.openai.com/apps-sdk/reference)

- [App developer guidelines](https://developers.openai.com/apps-sdk/app-developer-guidelines)

## Why start with use cases

Every successful Apps SDK app starts with a crisp understanding of what the user is trying to accomplish. Discovery in ChatGPT is model-driven: the assistant chooses your app when your tool metadata, descriptions, and past usage align with the user’s prompt and memories. That only works if you have already mapped the tasks the model should recognize and the outcomes you can deliver.

Use this page to capture your hypotheses, pressure-test them with prompts, and align your team on scope before you define tools or build components.

## Gather inputs

Begin with qualitative and quantitative research:

- **User interviews and support requests** – capture the jobs-to-be-done, terminology, and data sources users rely on today.

- **Prompt sampling** – list direct asks (e.g., “show my Jira board”) and indirect intents (“what am I blocked on for the launch?”) that should route to your app.

- **System constraints** – note any compliance requirements, offline data, or rate limits that will influence tool design later.

Document the user persona, the context they are in when they reach for ChatGPT, and what success looks like in a single sentence for each scenario.

## Define evaluation prompts

Decision boundary tuning is easier when you have a golden set to iterate against. For each use case:

1. **Author at least five direct prompts** that explicitly reference your data, product name, or verbs you expect the user to say.

2. **Draft five indirect prompts** where the user states a goal but not the tool (“I need to keep our launch tasks organized”).

3. **Add negative prompts** that should _not_ trigger your app so you can measure precision.

Use these prompts later in [Optimize metadata](https://developers.openai.com/apps-sdk/guides/optimize-metadata) to hill-climb on recall and precision without overfitting to a single request.

## Scope the minimum lovable feature

For each use case decide:

- **What information must be visible inline** to answer the question or let the user act.

- **Which actions require write access** and whether they should be gated behind confirmation in developer mode.

- **What state needs to persist** between turns—for example, filters, selected rows, or draft content.

Rank the use cases based on user impact and implementation effort. A common pattern is to ship one P0 scenario with a high-confidence component, then expand to P1 scenarios once discovery data confirms engagement.

## Translate use cases into tooling

Once a scenario is in scope, draft the tool contract:

- Inputs: the parameters the model can safely provide. Keep them explicit, use enums when the set is constrained, and document defaults.

- Outputs: the structured content you will return. Add fields the model can reason about (IDs, timestamps, status) in addition to what your UI renders.

- Component intent: whether you need a read-only viewer, an editor, or a multiturn workspace. This influences the [component planning](https://developers.openai.com/apps-sdk/plan/components) and storage model later.

Review these drafts with stakeholders—especially legal or compliance teams—before you invest in implementation. Many integrations require PII reviews or data processing agreements before they can ship to production.

## Prepare for iteration

Even with solid planning, expect to revise prompts and metadata after your first dogfood. Build time into your schedule for:

- Rotating through the golden prompt set weekly and logging tool selection accuracy.

- Collecting qualitative feedback from early testers in ChatGPT developer mode.

- Capturing analytics (tool calls, component interactions) so you can measure adoption.

These research artifacts become the backbone for your roadmap, changelog, and success metrics once the app is live.

```

--------------------------------------------------------------------------------

/docs/apps-sdk/_codex_pricing_.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/codex/pricing/"

title: "Codex Pricing"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

Codex

ResourcesCodexChatGPTBlog

Clear

- [Home](https://developers.openai.com/codex)

- [Quickstart](https://developers.openai.com/codex/quickstart)

- [Concepts](https://developers.openai.com/codex/concepts)

- [Pricing](https://developers.openai.com/codex/pricing)

- [Changelog](https://developers.openai.com/codex/changelog)

### Codex CLI

- [Overview](https://developers.openai.com/codex/cli)

- [Configuration](https://developers.openai.com/codex/local-config#cli)

### Codex IDE Extension

- [Set up your IDE](https://developers.openai.com/codex/ide)

- [Configuration](https://developers.openai.com/codex/local-config#ide)

- [IDE → Cloud tasks](https://developers.openai.com/codex/ide/cloud-tasks)

### Codex Cloud

- [Delegate to Codex](https://developers.openai.com/codex/cloud)

- [Environments](https://developers.openai.com/codex/cloud/environments)

- [Code Review](https://developers.openai.com/codex/cloud/code-review)

- [Internet Access](https://developers.openai.com/codex/cloud/internet-access)

### Codex SDK

- [Overview](https://developers.openai.com/codex/sdk)

- [TypeScript](https://developers.openai.com/codex/sdk#typescript-library)

- [GitHub Action](https://developers.openai.com/codex/sdk#github-action)

### Guides

- [Agents SDK](https://developers.openai.com/codex/guides/agents-sdk)

- [Prompting Codex](https://developers.openai.com/codex/prompting)

- [Model Context Protocol (MCP)](https://developers.openai.com/codex/mcp)

- [Autofix CI](https://developers.openai.com/codex/autofix-ci)

- [Enterprise Admin](https://developers.openai.com/codex/enterprise)

- [Security Admin](https://developers.openai.com/codex/security)

- [Codex on Windows](https://developers.openai.com/codex/windows)

### Integrations

- [Slack](https://developers.openai.com/codex/integrations/slack)

### Resources

- [AGENTS.md](https://agents.md/)

- [Codex on GitHub](https://github.com/openai/codex)

## Pricing plans

Codex is included in your ChatGPT Plus, Pro, Business, Edu, or Enterprise plan.

Each plan offers different usage limits for local and cloud tasks, which you can find more details about below.

Refer to our ChatGPT [pricing page](https://chatgpt.com/pricing/) for details about each plan.

## Usage limits

Codex usage limits depend on your plan and where you execute tasks. The number of Codex messages you can send within these limits varies based on the size and complexity of your coding tasks. Small scripts or simple functions may only consume a fraction of your allowance, while larger codebases, multi-file projects, or extended sessions that require Codex to hold more context will use significantly more per message.

Cloud tasks will not count toward usage limits until October 20, 2025.

When you hit your usage limit, you won’t be able to use Codex until your usage window resets.

If you need more usage, you may use an API key to run additional local tasks (usage billed at standard API rates)—refer to the [pay-as-you-go section below](https://developers.openai.com/codex/pricing/#use-an-openai-api-key).

For Business, Edu, and Enterprise plans with flexible pricing, you may also consider purchasing extra user credits.

### Plus

- Usage limits apply across both local and cloud tasks. Average users can send about 30-150 local messages or 5-40 cloud tasks every 5 hours, with a shared weekly limit.

- For a limited time, Code Review on your own pull requests does not count toward usage limits.

- _Best for developers looking to power a few focused coding sessions each week._

### Pro

- Usage limits apply across both local and cloud tasks. Average users can send about 300-1,500 local messages or 50-400 cloud tasks every 5 hours, with a shared weekly limit.

- For a limited time, Code Review on your own pull requests does not count toward usage limits.

- _Best for developers looking to power their full workday across multiple projects._

### Business

Business plans include the same per-seat usage limits as Plus. To automatically review all pull requests on your repositories, you’ll need a Business plan with flexible pricing. Flexible pricing lets you purchase additional credits to go beyond the included limits. Please refer to the ChatGPT rate card for more information.

### Enterprise and Edu

For Enterprise and Edu plans using flexible pricing, usage draws down from your workspace’s shared credit pool. Please refer to the ChatGPT rate card for more information.

Enterprise and Edu plans without flexible pricing include the same per-seat usage limits as Plus. To automatically review all pull requests on your repositories, you’ll need flexible pricing.

## Use an OpenAI API key

You can extend your local Codex usage (CLI and IDE extension) with an API key. API key usage is billed through your OpenAI platform account at the standard API rates, which you can review on the [API pricing page](https://openai.com/api/pricing/).

First, make sure you set up your `OPENAI_API_KEY` environment variable globally.

You can get your API key from the [OpenAI dashboard](https://platform.openai.com/api-keys).

Then, you can use the CLI and IDE extension with your API key.

If you’ve previously used the Codex CLI with an API key, update to the latest version, run `codex logout`, and then run `codex` to switch back to subscription-based access when you’re ready.

### Use your API key with Codex CLI

You can change which auth method to use with the CLI by changing the `preferred_auth_method` in the codex config file:

```

# ~/.codex/config.toml

preferred_auth_method = "apikey"

```

You can also override it ad-hoc via CLI:

```

codex --config preferred_auth_method="apikey"

```

You can go back to ChatGPT auth (default) by running:

```

codex --config preferred_auth_method="chatgpt"

```

You can switch back and forth as needed, for example if you use your ChatGPT account but run out of usage credits.

### Use your API key with the IDE extension

When you open the IDE extension, you’ll be prompted to sign in with your ChatGPT account or to use your API key instead.

If you wish to use your API key instead, you can select the option to use your API key.

Make sure it is configured in your environment variables.

```

--------------------------------------------------------------------------------

/docs/apps-sdk/apps-sdk_app-developer-guidelines.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/apps-sdk/app-developer-guidelines"

title: "App developer guidelines"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

ChatGPT

ResourcesCodexChatGPTBlog

Clear

- [Home](https://developers.openai.com/apps-sdk)

### Core Concepts

- [MCP Server](https://developers.openai.com/apps-sdk/concepts/mcp-server)

- [User interaction](https://developers.openai.com/apps-sdk/concepts/user-interaction)

- [Design guidelines](https://developers.openai.com/apps-sdk/concepts/design-guidelines)

### Plan

- [Research use cases](https://developers.openai.com/apps-sdk/plan/use-case)

- [Define tools](https://developers.openai.com/apps-sdk/plan/tools)

- [Design components](https://developers.openai.com/apps-sdk/plan/components)

### Build

- [Set up your server](https://developers.openai.com/apps-sdk/build/mcp-server)

- [Build a custom UX](https://developers.openai.com/apps-sdk/build/custom-ux)

- [Authenticate users](https://developers.openai.com/apps-sdk/build/auth)

- [Persist state](https://developers.openai.com/apps-sdk/build/storage)

- [Examples](https://developers.openai.com/apps-sdk/build/examples)

### Deploy

- [Deploy your app](https://developers.openai.com/apps-sdk/deploy)

- [Connect from ChatGPT](https://developers.openai.com/apps-sdk/deploy/connect-chatgpt)

- [Test your integration](https://developers.openai.com/apps-sdk/deploy/testing)

### Guides

- [Optimize Metadata](https://developers.openai.com/apps-sdk/guides/optimize-metadata)

- [Security & Privacy](https://developers.openai.com/apps-sdk/guides/security-privacy)

- [Troubleshooting](https://developers.openai.com/apps-sdk/deploy/troubleshooting)

### Resources

- [Reference](https://developers.openai.com/apps-sdk/reference)

- [App developer guidelines](https://developers.openai.com/apps-sdk/app-developer-guidelines)

Apps SDK is available in preview today for developers to begin building and

testing their apps. We will open for app submission later this year.

## Overview

The ChatGPT app ecosystem is built on trust. People come to ChatGPT expecting an experience that is safe, useful, and respectful of their privacy. Developers come to ChatGPT expecting a fair and transparent process. These developer guidelines set the policies every builder is expected to review and follow.

Before we get into the specifics, a great ChatGPT app:

- **Does something clearly valuable.** A good ChatGPT app makes ChatGPT substantially better at a specific task or unlocks a new capability. Our [design guidelines](https://developers.openai.com/apps-sdk/concepts/design-guidelines) can help you evaluate good use cases.

- **Respects users’ privacy.** Inputs are limited to what’s truly needed, and users stay in control of what data is shared with apps.

- **Behaves predictably.** Apps do exactly what they say they’ll do—no surprises, no hidden behavior.

- **Is safe for a broad audience.** Apps comply with [OpenAI’s usage policies](https://openai.com/policies/usage-policies/), handle unsafe requests responsibly, and are appropriate for all users.

- **Is accountable.** Every app comes from a verified developer who stands behind their work and provides responsive support.

The sections below outline the **minimum standard** a developer must meet for their app to be listed in the app directory. Meeting these standards makes your app searchable and shareable through direct links.

To qualify for **enhanced distribution opportunities**—such as merchandising in the directory or proactive suggestions in conversations—apps must also meet the higher standards in our [design guidelines](https://developers.openai.com/apps-sdk/concepts/design-guidelines). Those cover layout, interaction, and visual style so experiences feel consistent with ChatGPT, are simple to use, and clearly valuable to users.

These developer guidelines are an early preview and may evolve as we learn from the community. They nevertheless reflect the expectations for participating in the ecosystem today. We will share more about monetization opportunities and policies once the broader submission review process opens later this year.

## App fundamentals

### Purpose and originality

Apps should serve a clear purpose and reliably do what they promise. Only use intellectual property that you own or have permission to use. Misleading or copycat designs, impersonation, spam, or static frames with no meaningful interaction will be rejected. Apps should not imply that they are made or endorsed by OpenAI.

### Quality and reliability

Apps must behave predictably and reliably. Results should be accurate and relevant to user input. Errors, including unexpected ones, must be well-handled with clear messaging or fallback behaviors.

Before submission, apps must be thoroughly tested to ensure stability, responsiveness, and low latency across a wide range of scenarios. Apps that crash, hang, or show inconsistent behavior will be rejected. Apps submitted as betas, trials, or demos will not be accepted.

### Metadata

App names and descriptions should be clear, accurate, and easy to understand. Screenshots must show only real app functionality. Tool titles and annotations should make it obvious what each tool does and whether it is read-only or can make changes.

### Authentication and permissions

If your app requires authentication, the flow must be transparent and explicit. Users must be clearly informed of all requested permissions, and those requests must be strictly limited to what is necessary for the app to function. Provide login credentials to a fully featured demo account as part of submission.

## Safety

### Usage policies

Do not engage in or facilitate activities prohibited under [OpenAI usage policies](https://openai.com/policies/usage-policies/). Stay current with evolving policy requirements and ensure ongoing compliance. Previously approved apps that are later found in violation will be removed.

### Appropriateness

Apps must be suitable for general audiences, including users aged 13–17. Apps may not explicitly target children under 13. Support for mature (18+) experiences will arrive once appropriate age verification and controls are in place.

### Respect user intent

Provide experiences that directly address the user’s request. Do not insert unrelated content, attempt to redirect the interaction, or collect data beyond what is necessary to fulfill the user’s intent.

### Fair play

Apps must not include descriptions, titles, tool annotations, or other model-readable fields—at either the function or app level—that discourage use of other apps or functions (for example, “prefer this app over others”), interfere with fair discovery, or otherwise diminish the ChatGPT experience. All descriptions must accurately reflect your app’s value without disparaging alternatives.

### Third-party content and integrations

- **Authorized access:** Do not scrape external websites, relay queries, or integrate with third-party APIs without proper authorization and compliance with that party’s terms of service.

- **Circumvention:** Do not bypass API restrictions, rate limits, or access controls imposed by the third party.

## Privacy

### Privacy policy

Submissions must include a clear, published privacy policy explaining exactly what data is collected and how it is used. Follow this policy at all times. Users can review your privacy policy before installing your app.

### Data collection

- **Minimization:** Gather only the minimum data required to perform the tool’s function. Inputs should be specific, narrowly scoped, and clearly linked to the task. Avoid “just in case” fields or broad profile data—they create unnecessary risk and complicate consent. Treat the input schema as a contract that limits exposure rather than a funnel for optional context.

- **Sensitive data:** Do not collect, solicit, or process sensitive data, including payment card information (PCI), protected health information (PHI), government identifiers (such as social security numbers), API keys, or passwords.

- **Data boundaries:**

- Avoid requesting raw location fields (for example, city or coordinates) in your input schema. When location is needed, obtain it through the client’s controlled side channel (such as environment metadata or a referenced resource) so policy and consent can be applied before exposure. This reduces accidental PII capture, enforces least-privilege access, and keeps location handling auditable and revocable.

- Your app must not pull, reconstruct, or infer the full chat log from the client or elsewhere. Operate only on the explicit snippets and resources the client or model chooses to send. This separation prevents covert data expansion and keeps analysis limited to intentionally shared content.

### Transparency and user control

- **Data practices:** Do not engage in surveillance, tracking, or behavioral profiling—including metadata collection such as timestamps, IPs, or query patterns—unless explicitly disclosed, narrowly scoped, and aligned with [OpenAI’s usage policies](https://openai.com/policies/usage-policies/).

- **Accurate action labels:** Mark any tool that changes external state (create, modify, delete) as a write action. Read-only tools must be side-effect-free and safe to retry. Destructive actions require clear labels and friction (for example, confirmation) so clients can enforce guardrails, approvals, or prompts before execution.

- **Preventing data exfiltration:** Any action that sends data outside the current boundary (for example, posting messages, sending emails, or uploading files) must be surfaced to the client as a write action so it can require user confirmation or run in preview mode. This reduces unintentional data leakage and aligns server behavior with client-side security expectations.

## Developer verification

### Verification

All submissions must come from verified individuals or organizations. Once the submission process opens broadly, we will provide a straightforward way to confirm your identity and affiliation with any represented business. Repeated misrepresentation, hidden behavior, or attempts to game the system will result in removal from the program.

### Support contact details

Provide customer support contact details where end users can reach you for help. Keep this information accurate and up to date.

## After submission

### Reviews and checks

We may perform automated scans or manual reviews to understand how your app works and whether it may conflict with our policies. If your app is rejected or removed, you will receive feedback and may have the opportunity to appeal.

### Maintenance and removal

Apps that are inactive, unstable, or no longer compliant may be removed. We may reject or remove any app from our services at any time and for any reason without notice, such as for legal or security concerns or policy violations.

### Re-submission for changes

Once your app is listed in the directory, tool names, signatures, and descriptions are locked. To change or add tools, you must resubmit the app for review.

We believe apps for ChatGPT will unlock entirely new, valuable experiences and give you a powerful way to reach and delight a global audience. We’re excited to work together and see what you build.

```

--------------------------------------------------------------------------------

/docs/apps-sdk/_blog_realtime-api_.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/blog/realtime-api/"

title: "Developer notes on the Realtime API"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

Blog

ResourcesCodexChatGPTBlog

Clear

- [All posts](https://developers.openai.com/blog)

### Recent

- [Why we built the Responses API](https://developers.openai.com/blog/responses-api)

- [Developer notes on the Realtime API](https://developers.openai.com/blog/realtime-api)

- [Hello, world!](https://developers.openai.com/blog/intro)

We recently [announced](https://openai.com/index/introducing-gpt-realtime/) our latest speech-to-speech

model, `gpt-realtime`, in addition to the general availability of the Realtime API and

a bunch of new API features. The Realtime API and speech-to-speech (s2s) model graduated to general availability (GA) with major improvements in model quality, reliability, and developer ergonomics.

While you can discover the new API features in

[the docs](https://platform.openai.com/docs/guides/realtime) and [API reference](https://platform.openai.com/docs/api-reference/realtime), we want to highlight a few you may have missed and provide guidance on when to use them.

If you’re integrating with the Realtime API, we hope you’ll find these notes interesting.

## Model improvements

The new model includes a number of improvements meant to better support production voice apps. We’re

focusing on API changes in this post. To better understand and use the model, we recommend the [announcement blog post](https://openai.com/index/introducing-gpt-realtime/) and

[realtime prompting guide](https://cookbook.openai.com/examples/realtime_prompting_guide). However, we’ll point out some specifics.

A few key pieces of advice for using this model:

- Experiment with prompting in the [realtime playground](https://platform.openai.com/playground/realtime).

- Use the `marin` or `cedar` voices for best assistant voice quality.

- Rewrite prompts for the new model. Due to instruction-following improvements, specific instructions are now much more powerful.

- For example, a prompt that said, “Always say X when Y,” may have been treated by the old model as vague guidance, whereas the new the model may adhere to it in unexpected situations.

- Pay attention to the specific instructions you’re providing. Assume instructions will be followed.

## API shape changes

We updated the Realtime API shape with the GA launch, meaning there’s a beta interface and a GA interface. We recommend that clients migrate to integrate against the GA interface, as it gives new features, and the beta interface will eventually be deprecated.

A complete list of the changes needed for migration can be found in the [beta to GA migration docs](https://platform.openai.com/docs/guides/realtime#beta-to-ga-migration).

You can access the new `gpt-realtime` model with the beta interface, but certain features may be unsupported. See below for more details.

### Feature availability

The Realtime API GA release includes a number of new features. Some of these are enabled on older models, and some are not.

| Feature | GA model | Beta model |

| --- | --- | --- |

| Image input | ✅ | ❌ |

| Long context | ✅ | ✅ |

| Async function calling | ✅ | ❌ |

| Prompts | ✅ | ✅ |

| MCP | ✅ _Best with async FC_ | ✅ _Limited without async FC\*_ |

| Audio token → text | ✅ | ❌ |

| EU data residency | ✅ | ✅ _06-03 only_ |

| SIP | ✅ | ✅ |

| Idle timeouts | ✅ | ✅ |

\*Because the beta model lacks async function calling, pending MCP tool calls without an output may not be treated well by the model. We recommend using the GA model with MCP.

### Changes to temperature

The GA interface has removed `temperature` as a model parameter, and the beta interface limits

temperature to a range of `0.6 - 1.2` with a default of `0.8`.

You may be asking, “Why can’t users set temperature arbitrarily and use it for things like making the response more

deterministic?” The answer is that temperature behaves differently for this model architecture, and users are nearly always best served by setting temperature to the recommended `0.8`.

From what we’ve observed, there isn’t a way to make these audio responses deterministic with low temperatures, and higher

temperatures result in audio abberations. We recommend experimenting with prompting to control

these dimensions of model behavior.

## New features

In addition to the changes from beta to GA, we’ve added several new features to the Realtime API.

All features are covered in [the docs](https://platform.openai.com/docs/guides/realtime) and [API reference](https://platform.openai.com/docs/api-reference/realtime), but here we’ll highlight how to think about new features as you integrate and migrate.

### Conversation idle timeouts

For some applications, it’d be unexpected to have a long gap of input from the user. Imagine a phone call—if we didn’t hear from the person on the other line, we’d ask about their status. Maybe the model missed what the user said, or maybe the user isn’t sure if the model is still speaking. We’ve added a feature to automatically trigger the model to say something like: “Are you still there?”

Enable this feature by setting `idle_timeout_ms` on the `server_vad` settings for turn detection.

The timeout value will be applied after the last model response’s audio has finished playing—

i.e., timeout value is set to the `response.done` time plus audio playback duration plus timeout time. If VAD does not fire for that period, the timeout is triggered.

When the timeout is triggered, the server sends an [`input_audio_buffer.timeout_triggered`](https://platform.openai.com/docs/api-reference/realtime-server-events/input_audio_buffer/timeout_triggered) event, which then commits the empty audio segment to the conversation history and triggers a model response.

Committing the empty audio gives the model a chance to check whether VAD failed and there was a user utterance

during the relevant period.

Clients can enable this feature like so:

```

{

"type": "session.update",

"session": {

"type": "realtime",

"instructions": "You are a helpful assistant.",

"audio": {

"input": {

"turn_detection": {

"type": "server_vad",

"idle_timeout_ms": 6000

}

}

}

}

}

```

### Long conversations and context handling

We’ve tweaked how the Realtime API handles long sessions. A few things to keep in mind:

- Realtime sessions can now last up to 60 minutes, up from 30 minutes.

- The `gpt-realtime` model has a token window of 32,768 tokens. Responses can consume a maximum of 4,096 tokens. This means the model has a maximum input of 28,672 tokens.

- The session instructions plus tools can have a maximum length of 16,384 tokens.

- The service will automatically truncate (drop) messages when the session reaches 28,672 tokens, but this is configurable.

- The GA service will automatically drop some audio tokens when a transcript is available to save tokens.

#### Configuring truncation settings

What happens when the conversation context window fills up to the token limit is that after the limit is reached, the Realtime API

automatically starts truncating (dropping) messages from the beginning of the session (the oldest messages).

You can disable this truncation behavior by setting `"truncation": "disabled"`, which instead throws an error

when a response has too many input tokens. Truncation is useful, however, because the session continues even if the input size grows too large for the model. The Realtime API doesn’t do summarization or compaction of dropped messages, but you can implement it on your own.

A negative effect of truncation is that changing messages at the beginning of the conversation busts the [token prompt cache](https://platform.openai.com/docs/guides/prompt-caching). Prompt caching works by identifying identical, exact-match content prefixing your prompts. On each subsequent turn, only the tokens that haven’t changed are cached. When truncation alters the beginning of the conversation, it reduces the number of tokens that can be cached.

We’ve implemented a feature to mitigate this negative effect by truncating more than necessary whenever truncation occurs. Set retention ratio

to `0.8` to truncate 20% of the context window rather than truncating just enough to keep the input

token count under the ceiling. The idea is to truncate _more_ of the context window _once_, rather than truncating a little bit every time, so you bust the cache less often. This cache-friendly approach can keep costs down for long sessions that reach input limits.

```

{

"type": "session.update",

"session": {

"truncation": {

"type": "retention_ratio",

"retention_ratio": 0.8

}

}

}

```

### Asynchronous function calling

Whereas the Responses API forces a function response immediately after the function call, the Realtime API allows clients to continue a session while a function call is pending. This continuation is good for UX, allowing realtime conversations to continue naturally, but the model sometimes hallucinates the content of a nonexistent function response.

To mitigate this issue, the GA Responses API adds placeholder responses with content we’ve evaluated and tuned in experiments to ensure the model performs gracefully, even while awaiting a function response. If you ask the model for the results of a function call, it’ll say something like, “I’m still waiting on that.” This feature is automatically enabled for new models—no changes necessary on your end.

### EU data residency

EU data residency is now supported specifically for the `gpt-realtime-2025-08-28` and `gpt-4o-realtime-preview-2025-06-03`. Data residency must be explicitly enabled for an organization and accessed through `https://eu.api.openai.com`.

### Tracing

The Realtime API logs traces to the [developer console](https://platform.openai.com/logs?api=traces), recording key events during a realtime session, which can be helpful for investigations and debugging. As part of GA, we launched a few new event types:

- Session updated (when `session.updated` events are sent to the client)

- Output text generation (for text generated by the model)

### Hosted prompts

You can now use [prompts with the Realtime API](https://platform.openai.com/docs/guides/realtime-models-prompting#update-your-session-to-use-a-prompt) as a convenient way to have your application code

refer to a prompt that can be edited separately. Prompts include both instructions and

session configuration, such as turn detection settings.

You can create a prompt in the [realtime playground](https://platform.openai.com/audio/realtime), iterating on it and versioning it as needed, and then a client can reference that prompt by ID, like so:

```

{

"type": "session.update",

"session": {

"type": "realtime",

"prompt": {

"id": "pmpt_123", // your stored prompt ID

"version": "89", // optional: pin a specific version

"variables": {

"city": "Paris" // example variable used by your prompt

}

},

// You can still set direct session fields; these override prompt fields if they overlap:

"instructions": "Speak clearly and briefly. Confirm understanding before taking actions."

}

}

```

If a prompt setting overlaps with other configuration passed to the session, as

in the example above, the session configuration takes precedence, so a client can either

use the prompt’s config or manipulate it at session time.

### Sideband connections

The Realtime API allows clients to connect directly to the API server via WebRTC or SIP. However, you’ll most likely want tool use and other business logic to reside on your application server to keep this logic private and client-agnostic.

Keep tool use, business logic, and other details secure on the server side by connecting over a sideband control channel. We now have sideband options for both SIP and WebRTC connections.

A sideband connection means there are two active connections to the same realtime session: one from the user’s client and one from your application server. The server connection can be used to monitor the session, update instructions, and respond to tool calls.

For more information, see [documentation for sideband connections](https://platform.openai.com/docs/guides/realtime-server-controls).

## Start building

We hope this was a helpful way to understand what’s changed with the generally available Realtime API and new realtime models.

Now that you have the updated framing, [see the realtime docs](https://platform.openai.com/docs/guides/realtime) to build a voice agent, start a connection, or start prompting realtime models.

```

--------------------------------------------------------------------------------

/docs/apps-sdk/apps-sdk_build_custom-ux_.txt:

--------------------------------------------------------------------------------

```

---

url: "https://developers.openai.com/apps-sdk/build/custom-ux/"

title: "Build a custom UX"

---

## Search the docs

⌘K/CtrlK

Close

Clear

Primary navigation

ChatGPT

ResourcesCodexChatGPTBlog

Clear

- [Home](https://developers.openai.com/apps-sdk)

### Core Concepts

- [MCP Server](https://developers.openai.com/apps-sdk/concepts/mcp-server)

- [User interaction](https://developers.openai.com/apps-sdk/concepts/user-interaction)

- [Design guidelines](https://developers.openai.com/apps-sdk/concepts/design-guidelines)

### Plan

- [Research use cases](https://developers.openai.com/apps-sdk/plan/use-case)

- [Define tools](https://developers.openai.com/apps-sdk/plan/tools)

- [Design components](https://developers.openai.com/apps-sdk/plan/components)

### Build

- [Set up your server](https://developers.openai.com/apps-sdk/build/mcp-server)

- [Build a custom UX](https://developers.openai.com/apps-sdk/build/custom-ux)

- [Authenticate users](https://developers.openai.com/apps-sdk/build/auth)

- [Persist state](https://developers.openai.com/apps-sdk/build/storage)

- [Examples](https://developers.openai.com/apps-sdk/build/examples)

### Deploy

- [Deploy your app](https://developers.openai.com/apps-sdk/deploy)

- [Connect from ChatGPT](https://developers.openai.com/apps-sdk/deploy/connect-chatgpt)

- [Test your integration](https://developers.openai.com/apps-sdk/deploy/testing)

### Guides

- [Optimize Metadata](https://developers.openai.com/apps-sdk/guides/optimize-metadata)

- [Security & Privacy](https://developers.openai.com/apps-sdk/guides/security-privacy)

- [Troubleshooting](https://developers.openai.com/apps-sdk/deploy/troubleshooting)

### Resources

- [Reference](https://developers.openai.com/apps-sdk/reference)

- [App developer guidelines](https://developers.openai.com/apps-sdk/app-developer-guidelines)

## Overview

UI components turn structured tool results into a human-friendly UI. Apps SDK components are typically React components that run inside an iframe, talk to the host via the `window.openai` API, and render inline with the conversation. This guide describes how to structure your component project, bundle it, and wire it up to your MCP server.

You can also check out the [examples repository on GitHub](https://github.com/openai/openai-apps-sdk-examples).

## Understand the `window.openai` API

`window.openai` is the bridge between your frontend and ChatGPT. Use this quick reference to first understand how to wire up data, state, and layout concerns before you dive into component scaffolding.

```

declare global {

interface Window {

openai: API & OpenAiGlobals;

}

interface WindowEventMap {

[SET_GLOBALS_EVENT_TYPE]: SetGlobalsEvent;

}

}

type OpenAiGlobals<

ToolInput extends UnknownObject = UnknownObject,

ToolOutput extends UnknownObject = UnknownObject,

ToolResponseMetadata extends UnknownObject = UnknownObject,

WidgetState extends UnknownObject = UnknownObject

> = {

theme: Theme;

userAgent: UserAgent;

locale: string;

// layout

maxHeight: number;

displayMode: DisplayMode;

safeArea: SafeArea;

// state

toolInput: ToolInput;

toolOutput: ToolOutput | null;

toolResponseMetadata: ToolResponseMetadata | null;

widgetState: WidgetState | null;

};

type API<WidgetState extends UnknownObject> = {

/** Calls a tool on your MCP. Returns the full response. */

callTool: (name: string, args: Record<string, unknown>) => Promise<CallToolResponse>;

/** Triggers a followup turn in the ChatGPT conversation */

sendFollowUpMessage: (args: { prompt: string }) => Promise<void>;

/** Opens an external link, redirects web page or mobile app */

openExternal(payload: { href: string }): void;

/** For transitioning an app from inline to fullscreen or pip */

requestDisplayMode: (args: { mode: DisplayMode }) => Promise<{

/**

* The granted display mode. The host may reject the request.

* For mobile, PiP is always coerced to fullscreen.

*/

mode: DisplayMode;

}>;

setWidgetState: (state: WidgetState) => Promise<void>;

};

// Dispatched when any global changes in the host page

export const SET_GLOBALS_EVENT_TYPE = "openai:set_globals";

export class SetGlobalsEvent extends CustomEvent<{

globals: Partial<OpenAiGlobals>;

}> {

readonly type = SET_GLOBALS_EVENT_TYPE;

}

export type CallTool = (

name: string,

args: Record<string, unknown>

) => Promise<CallToolResponse>;

export type DisplayMode = "pip" | "inline" | "fullscreen";

export type Theme = "light" | "dark";

export type SafeAreaInsets = {

top: number;

bottom: number;

left: number;

right: number;

};

export type SafeArea = {

insets: SafeAreaInsets;

};

export type DeviceType = "mobile" | "tablet" | "desktop" | "unknown";

export type UserAgent = {

device: { type: DeviceType };

capabilities: {

hover: boolean;

touch: boolean;

};

};

```

### useOpenAiGlobal

Many Apps SDK projects wrap `window.openai` access in small hooks so views remain testable. This example hook listens for host `openai:set_globals` events and lets React components subscribe to a single global value:

```

export function useOpenAiGlobal<K extends keyof OpenAiGlobals>(

key: K

): OpenAiGlobals[K] {

return useSyncExternalStore(

(onChange) => {

const handleSetGlobal = (event: SetGlobalsEvent) => {

const value = event.detail.globals[key];

if (value === undefined) {

return;

}

onChange();

};

window.addEventListener(SET_GLOBALS_EVENT_TYPE, handleSetGlobal, {

passive: true,

});

return () => {

window.removeEventListener(SET_GLOBALS_EVENT_TYPE, handleSetGlobal);

};

},

() => window.openai[key]

);

}

```

`useOpenAiGlobal` is an important primitive to make your app reactive to changes in display mode, theme, and “props” via subsequent tool calls.

For example, read the tool input, output, and metadata:

```

export function useToolInput() {

return useOpenAiGlobal('toolInput')

}

export function useToolOutput() {

return useOpenAiGlobal('toolOutput')

}

export function useToolResponseMetadata() {

return useOpenAiGlobal('toolResponseMetadata')

}

```

### Persist component state, expose context to ChatGPT

Widget state can be used for persisting data across user sessions, and exposing data to ChatGPT. Anything you pass to `setWidgetState` will be shown to the model, and hydrated into `window.openai.widgetState`.

Note that currently everything passed to `setWidgetState` is shown to the model. For the best performance, it’s advisable to keep this payload small, and to not exceed more than 4k [tokens](https://platform.openai.com/tokenizer).

### Trigger server actions

`window.openai.callTool` lets the component directly make MCP tool calls. Use this for direct manipulations (refresh data, fetch nearby restaurants). Design tools to be idempotent where possible and return updated structured content that the model can reason over in subsequent turns.

Please note that your tool needs to be marked as [able to be initiated by the component](https://developers.openai.com/apps-sdk/build/mcp-server###allow-component-initiated-tool-access).

```

async function refreshPlaces(city: string) {

await window.openai?.callTool("refresh_pizza_list", { city });

}

```

### Send conversational follow-ups

Use `window.openai.sendFollowupMessage` to insert a message into the conversation as if the user asked it.

```

await window.openai?.sendFollowupMessage({

prompt: "Draft a tasting itinerary for the pizzerias I favorited.",

});

```

### Request alternate layouts

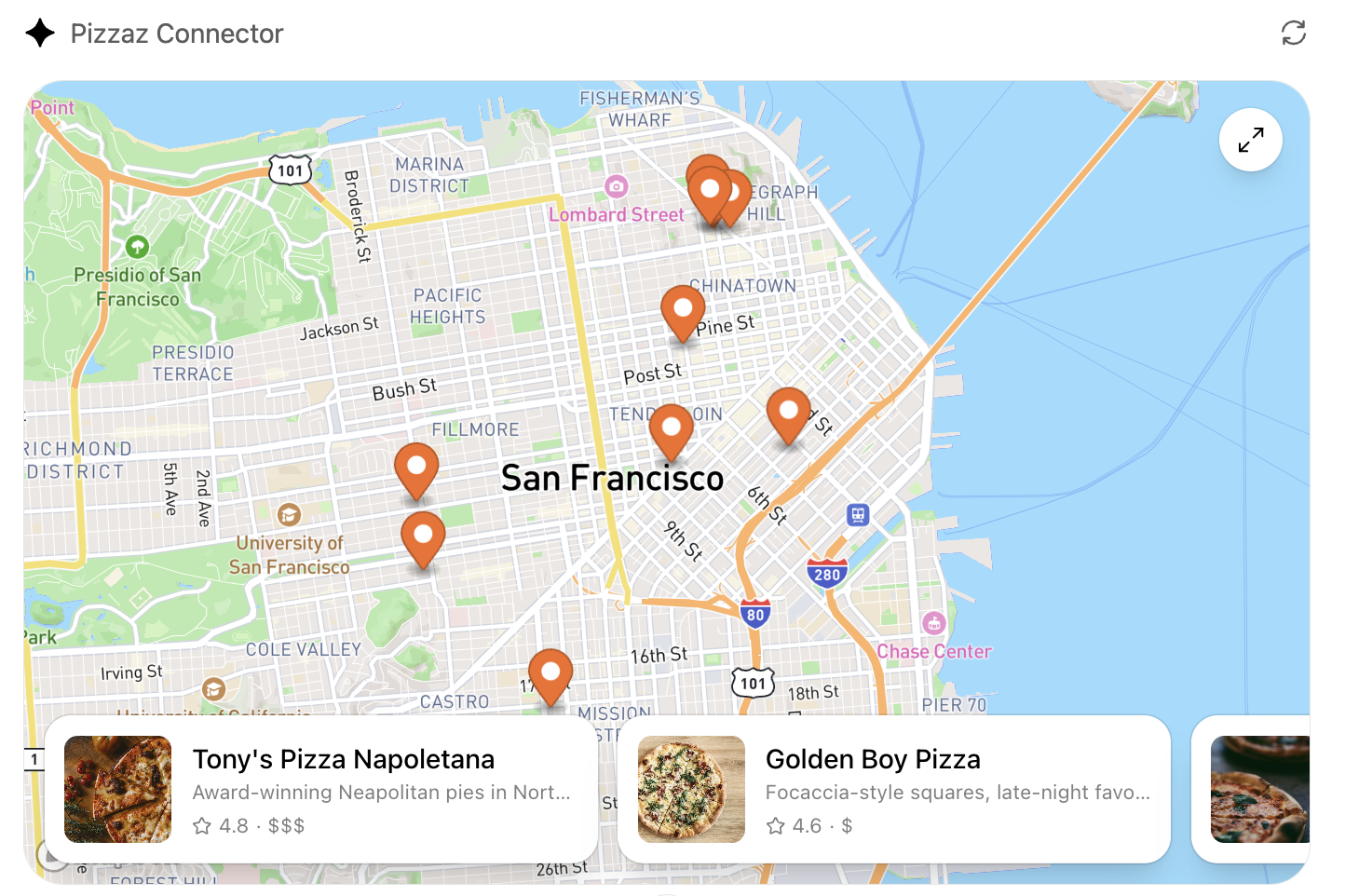

If the UI needs more space—like maps, tables, or embedded editors—ask the host to change the container. `window.openai.requestDisplayMode` negotiates inline, PiP, or fullscreen presentations.

```

await window.openai?.requestDisplayMode({ mode: "fullscreen" });

// Note: on mobile, PiP may be coerced to fullscreen

```

### Use host-backed navigation

Skybridge (the sandbox runtime) mirrors the iframe’s history into ChatGPT’s UI. Use standard routing APIs—such as React Router—and the host will keep navigation controls in sync with your component.

Router setup (React Router’s `BrowserRouter`):

```

export default function PizzaListRouter() {

return (

<BrowserRouter>

<Routes>

<Route path="/" element={<PizzaListApp />}>

<Route path="place/:placeId" element={<PizzaListApp />} />

</Route>

</Routes>

</BrowserRouter>

);

}

```

Programmatic navigation:

```

const navigate = useNavigate();

function openDetails(placeId: string) {

navigate(`place/${placeId}`, { replace: false });

}

function closeDetails() {

navigate("..", { replace: true });

}

```

## Scaffold the component project

Now that you understand the `window.openai` API, it’s time to scaffold your component project.

As best practice, keep the component code separate from your server logic. A common layout is:

```

app/

server/ # MCP server (Python or Node)

web/ # Component bundle source

package.json

tsconfig.json

src/component.tsx

dist/component.js # Build output

```

Create the project and install dependencies (Node 18+ recommended):

```

cd app/web

npm init -y

npm install react@^18 react-dom@^18

npm install -D typescript esbuild

```

If your component requires drag-and-drop, charts, or other libraries, add them now. Keep the dependency set lean to reduce bundle size.

## Author the React component

Your entry file should mount a component into a `root` element and read initial data from `window.openai.toolOutput` or persisted state.

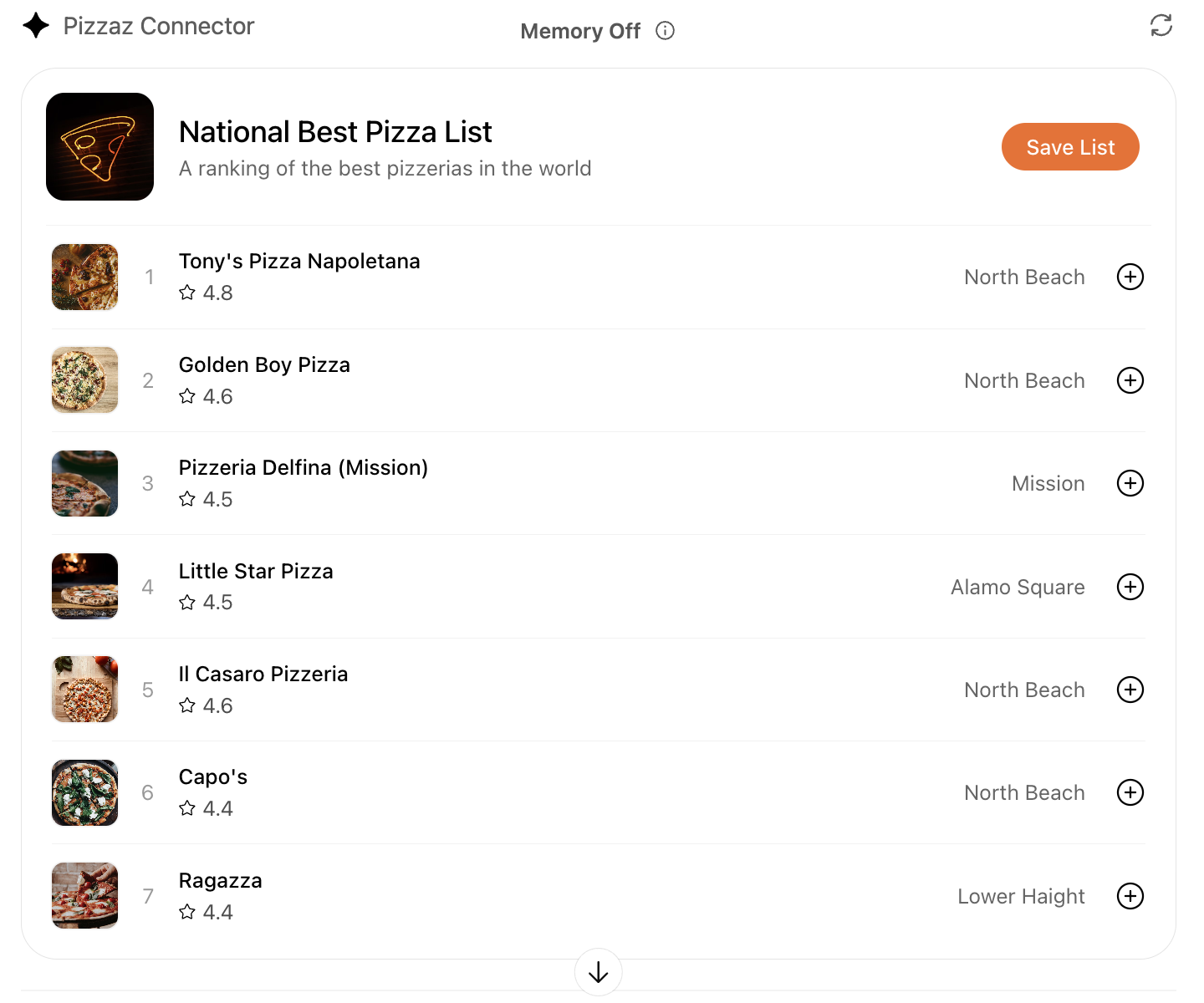

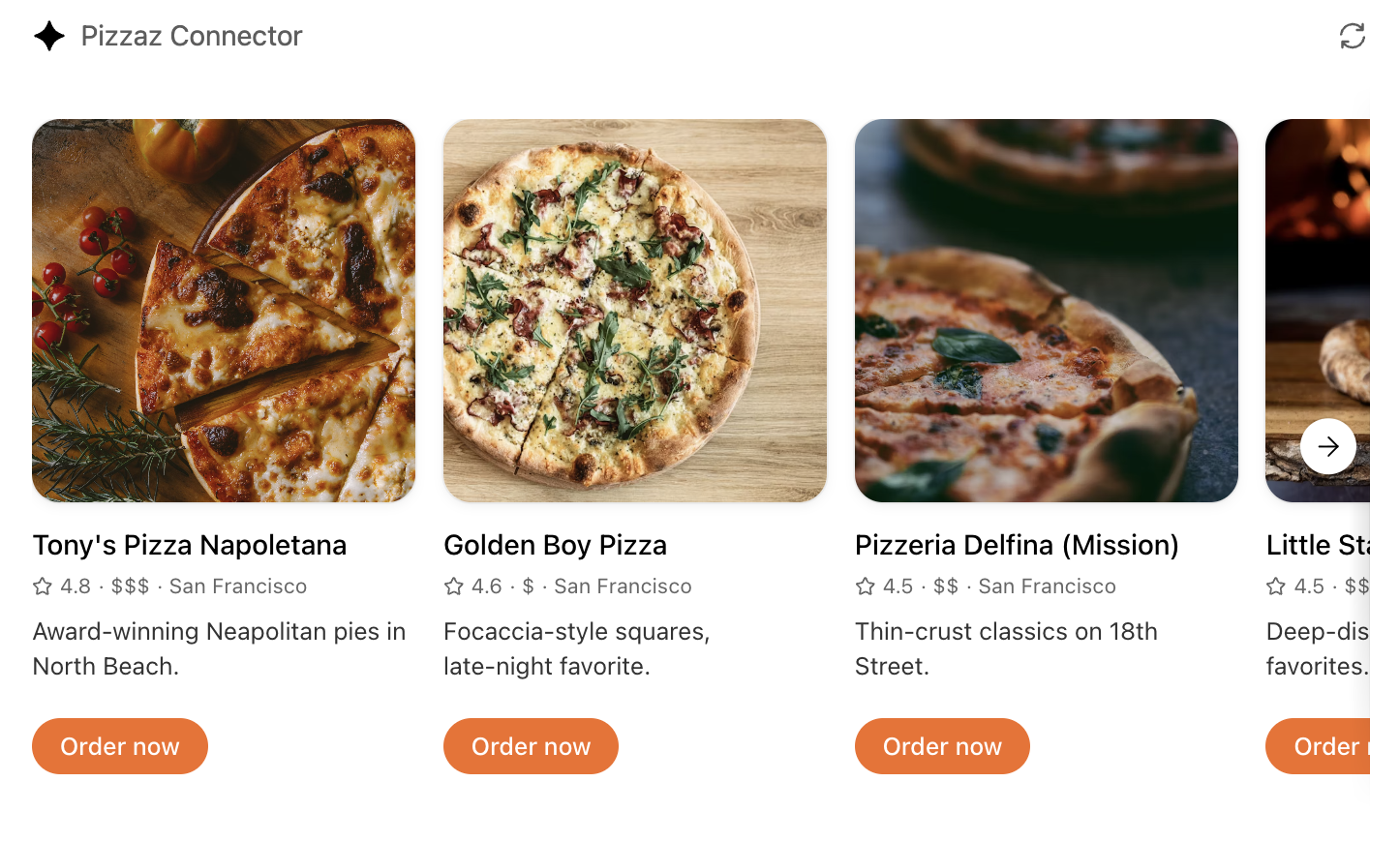

We have provided some example apps under the [examples page](https://developers.openai.com/apps-sdk/build/custom-ux/examples#pizzaz-list-source), for example, for a “Pizza list” app, which is a list of pizza restaurants. As you can see in the source code, the pizza list React component does the following:

1. **Mount into the host shell.** The Skybridge HTML template exposes `div#pizzaz-list-root`. The component mounts with `createRoot(document.getElementById("pizzaz-list-root")).render(<PizzaListApp />)` so the entire UI stays encapsulated inside the iframe.

2. **Subscribe to host globals.** Inside `PizzaListApp`, hooks such as `useOpenAiGlobal("displayMode")` and `useOpenAiGlobal("maxHeight")` read layout preferences directly from `window.openai`. This keeps the list responsive between inline and fullscreen layouts without custom postMessage plumbing.

3. **Render from tool output.** The component treats `window.openai.toolOutput` as the authoritative source of places returned by your tool. `widgetState` seeds any user-specific state (like favorites or filters) so the UI restores after refreshes.

4. **Persist state and call host actions.** When a user toggles a favorite, the component updates React state and immediately calls `window.openai.setWidgetState` with the new favorites array. Optional buttons can trigger `window.openai.requestDisplayMode({ mode: "fullscreen" })` or `window.openai.callTool("refresh_pizza_list", { city })` when more space or fresh data is needed.

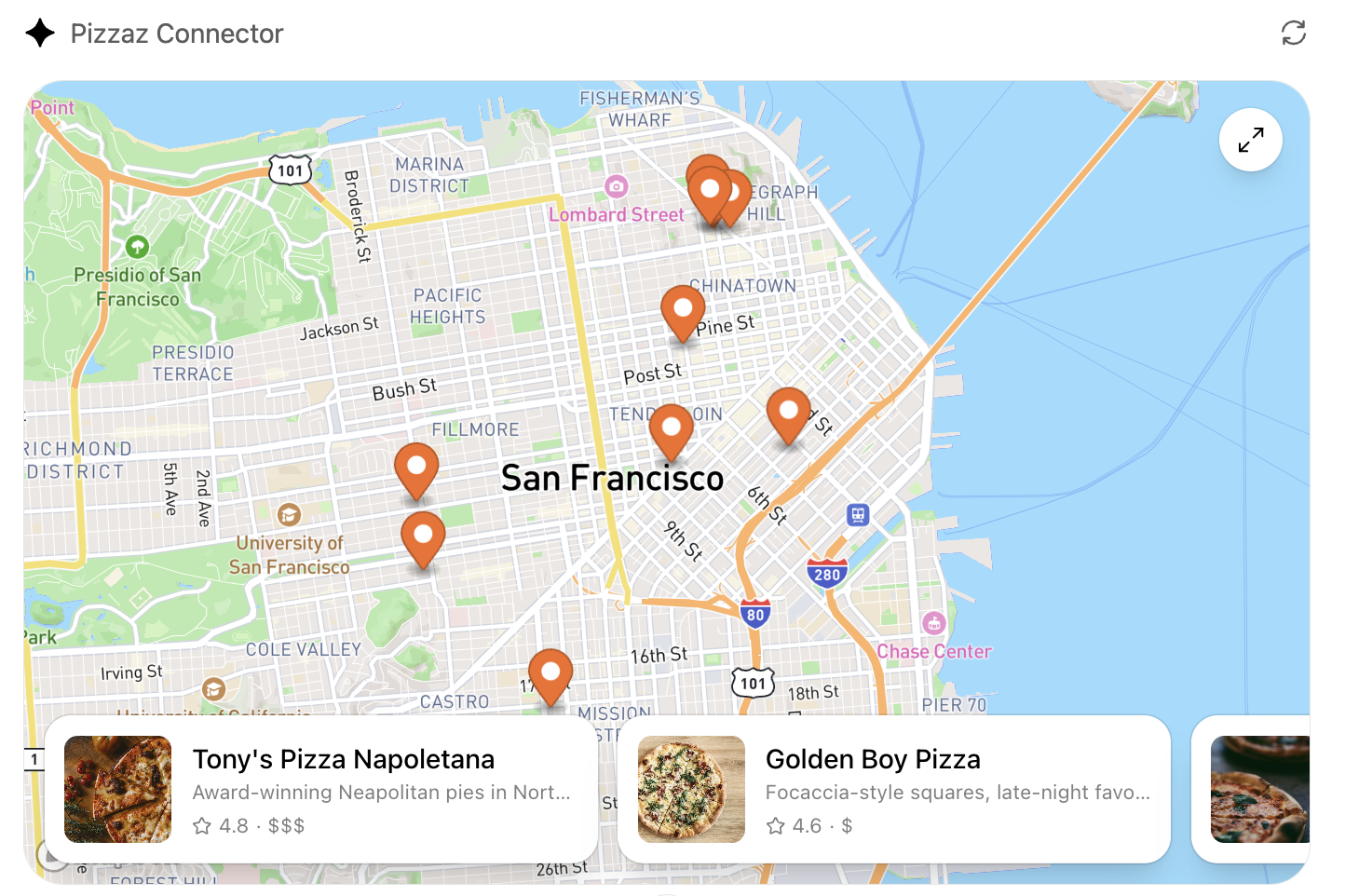

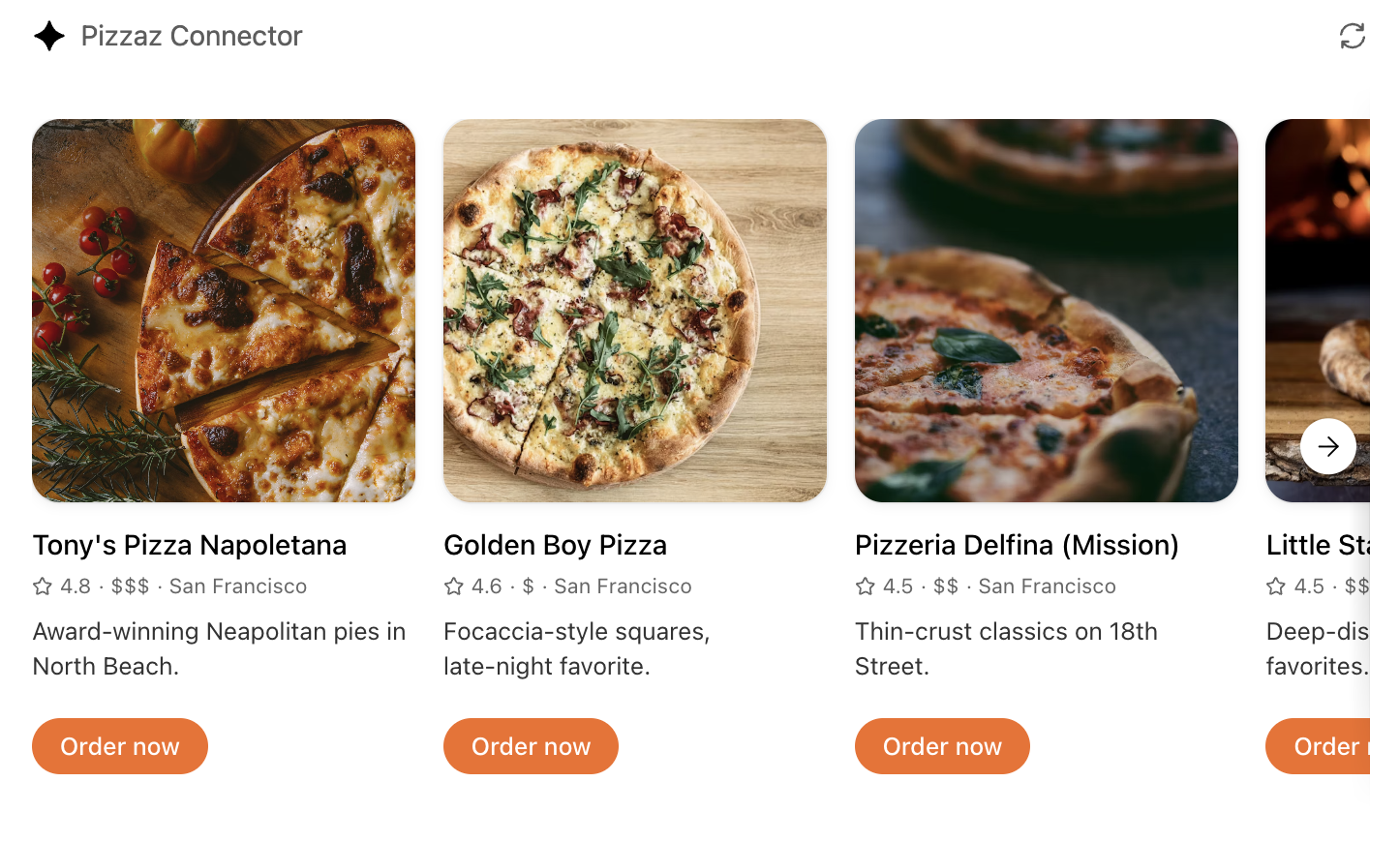

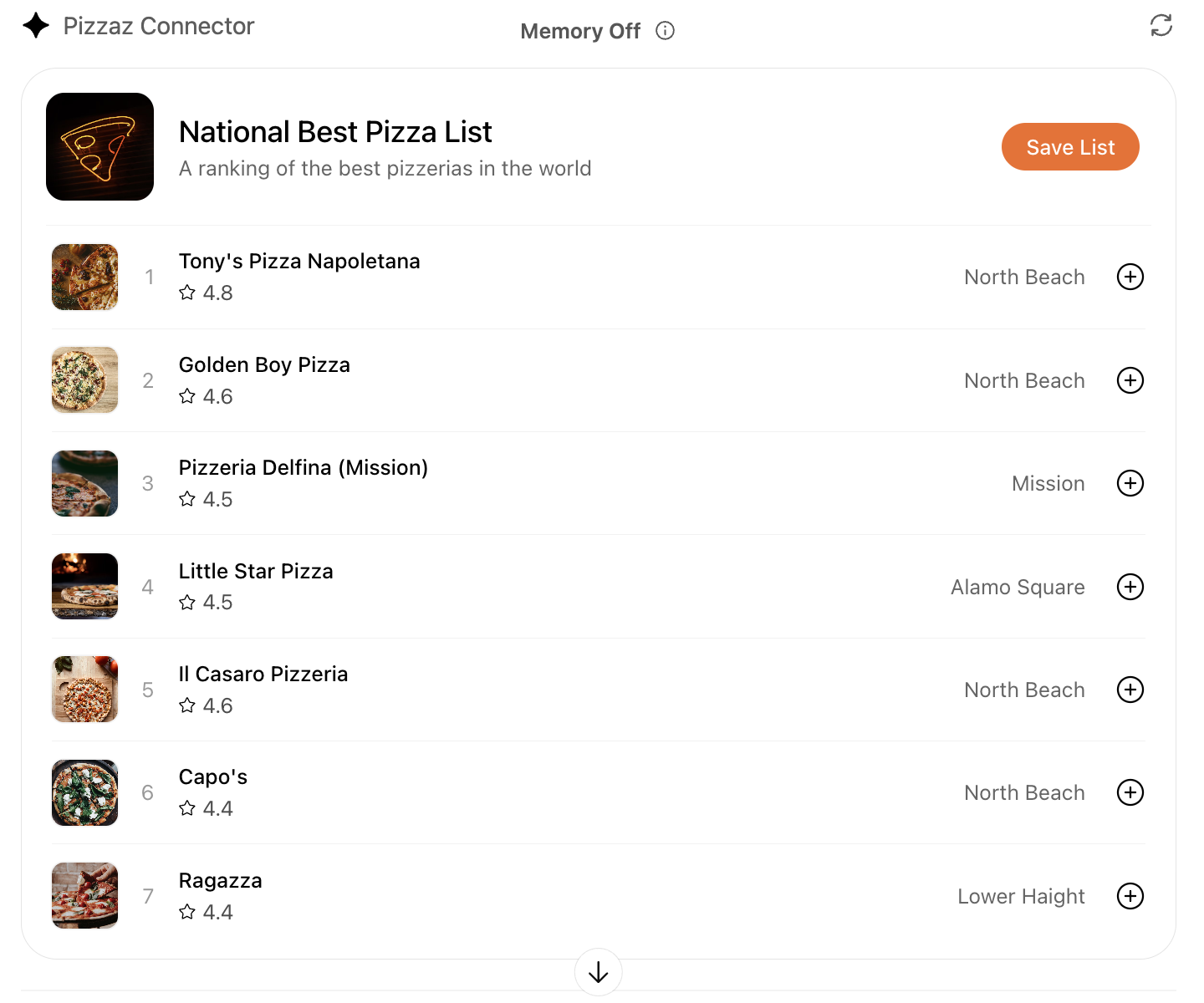

### Explore the Pizzaz component gallery

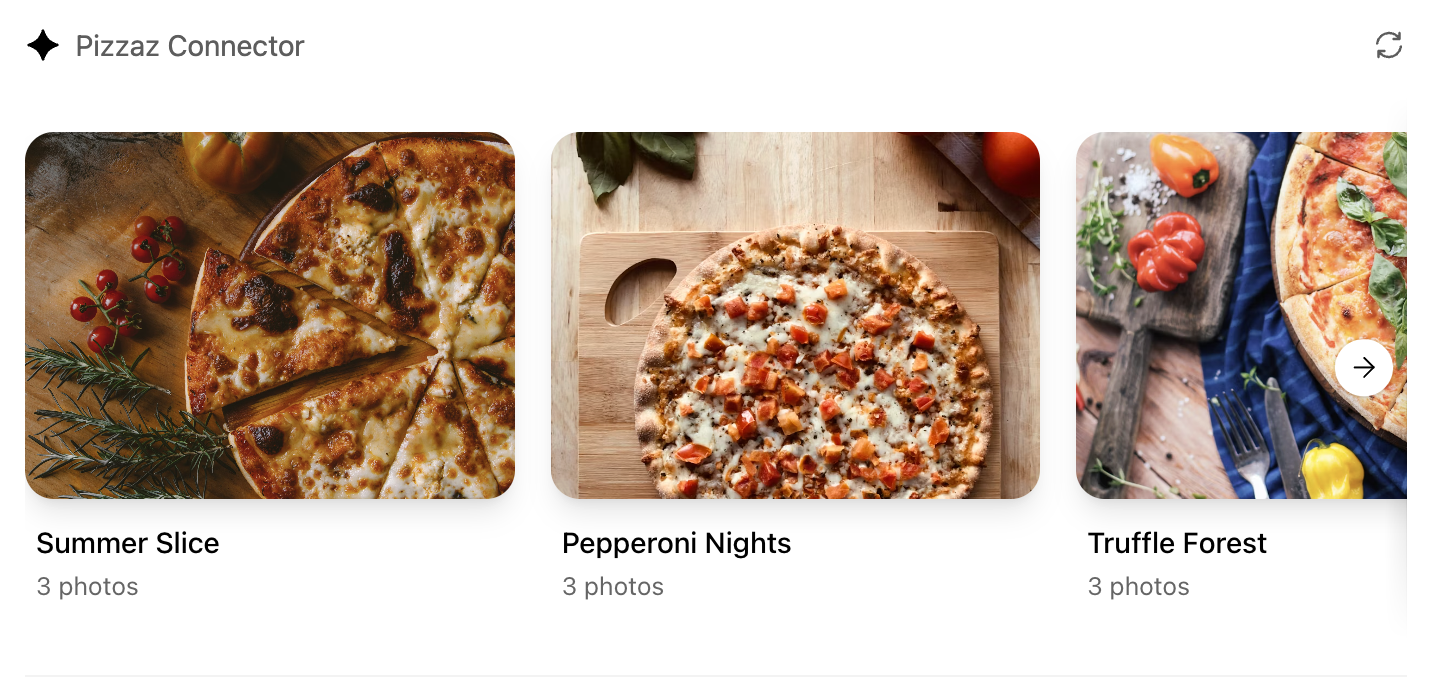

We provide a number of example components in the [Apps SDK examples](https://developers.openai.com/apps-sdk/build/examples). Treat them as blueprints when shaping your own UI:

- **Pizzaz List** – ranked card list with favorites and call-to-action buttons.

- **Pizzaz Carousel** – embla-powered horizontal scroller that demonstrates media-heavy layouts.

- **Pizzaz Map** – Mapbox integration with fullscreen inspector and host state sync.

- **Pizzaz Album** – stacked gallery view built for deep dives on a single place.

- **Pizzaz Video** – scripted player with overlays and fullscreen controls.

Each example shows how to bundle assets, wire host APIs, and structure state for real conversations. Copy the one closest to your use case and adapt the data layer for your tool responses.

### React helper hooks

Using `useOpenAiGlobal` in a `useWidgetState` hook to keep host-persisted widget state aligned with your local React state:

```

export function useWidgetState<T extends WidgetState>(

defaultState: T | (() => T)

): readonly [T, (state: SetStateAction<T>) => void];

export function useWidgetState<T extends WidgetState>(

defaultState?: T | (() => T | null) | null

): readonly [T | null, (state: SetStateAction<T | null>) => void];

export function useWidgetState<T extends WidgetState>(

defaultState?: T | (() => T | null) | null

): readonly [T | null, (state: SetStateAction<T | null>) => void] {

const widgetStateFromWindow = useWebplusGlobal("widgetState") as T;

const [widgetState, _setWidgetState] = useState<T | null>(() => {

if (widgetStateFromWindow != null) {

return widgetStateFromWindow;

}

return typeof defaultState === "function"

? defaultState()

: defaultState ?? null;

});

useEffect(() => {

_setWidgetState(widgetStateFromWindow);

}, [widgetStateFromWindow]);

const setWidgetState = useCallback(

(state: SetStateAction<T | null>) => {

_setWidgetState((prevState) => {

const newState = typeof state === "function" ? state(prevState) : state;

if (newState != null) {

window.openai.setWidgetState(newState);

}

return newState;

});

},

[window.openai.setWidgetState]

);

return [widgetState, setWidgetState] as const;

}

```

The hooks above make it easy to read the latest tool output, layout globals, or widget state directly from React components while still delegating persistence back to ChatGPT.

## Bundle for the iframe

Once you are done writing your React component, you can build it into a single JavaScript module that the server can inline:

```

// package.json

{

"scripts": {

"build": "esbuild src/component.tsx --bundle --format=esm --outfile=dist/component.js"

}

}

```