This is page 1 of 7. Use http://codebase.md/bytebase/dbhub?lines=false&page={x} to view the full context.

# Directory Structure

```

├── .dockerignore

├── .env.example

├── .github

│ ├── CODEOWNERS

│ ├── copilot-instructions.md

│ └── workflows

│ ├── docker-publish.yml

│ ├── npm-publish.yml

│ └── run-tests.yml

├── .gitignore

├── .husky

│ └── pre-commit

├── .npmrc

├── .prettierrc.json

├── bun.lock

├── CLAUDE.md

├── Dockerfile

├── LICENSE

├── llms-full.txt

├── package.json

├── pnpm-lock.yaml

├── pnpm-workspace.yaml

├── README.md

├── resources

│ ├── employee-sqlite

│ │ ├── employee.sql

│ │ ├── load_department.sql

│ │ ├── load_dept_emp.sql

│ │ ├── load_dept_manager.sql

│ │ ├── load_employee.sql

│ │ ├── load_salary1.sql

│ │ ├── load_title.sql

│ │ ├── object.sql

│ │ ├── show_elapsed.sql

│ │ └── test_employee_md5.sql

│ └── images

│ ├── claude-desktop.webp

│ ├── cursor.webp

│ ├── logo-full.svg

│ ├── logo-full.webp

│ ├── logo-icon-only.svg

│ ├── logo-text-only.svg

│ └── mcp-inspector.webp

├── scripts

│ └── setup-husky.sh

├── src

│ ├── __tests__

│ │ └── json-rpc-integration.test.ts

│ ├── config

│ │ ├── __tests__

│ │ │ ├── env.test.ts

│ │ │ └── ssh-config-integration.test.ts

│ │ ├── demo-loader.ts

│ │ └── env.ts

│ ├── connectors

│ │ ├── __tests__

│ │ │ ├── mariadb.integration.test.ts

│ │ │ ├── mysql.integration.test.ts

│ │ │ ├── postgres-ssh.integration.test.ts

│ │ │ ├── postgres.integration.test.ts

│ │ │ ├── shared

│ │ │ │ └── integration-test-base.ts

│ │ │ ├── sqlite.integration.test.ts

│ │ │ └── sqlserver.integration.test.ts

│ │ ├── interface.ts

│ │ ├── manager.ts

│ │ ├── mariadb

│ │ │ └── index.ts

│ │ ├── mysql

│ │ │ └── index.ts

│ │ ├── postgres

│ │ │ └── index.ts

│ │ ├── sqlite

│ │ │ └── index.ts

│ │ └── sqlserver

│ │ └── index.ts

│ ├── index.ts

│ ├── prompts

│ │ ├── db-explainer.ts

│ │ ├── index.ts

│ │ └── sql-generator.ts

│ ├── resources

│ │ ├── index.ts

│ │ ├── indexes.ts

│ │ ├── procedures.ts

│ │ ├── schema.ts

│ │ ├── schemas.ts

│ │ └── tables.ts

│ ├── server.ts

│ ├── tools

│ │ ├── __tests__

│ │ │ └── execute-sql.test.ts

│ │ ├── execute-sql.ts

│ │ └── index.ts

│ ├── types

│ │ ├── sql.ts

│ │ └── ssh.ts

│ └── utils

│ ├── __tests__

│ │ ├── safe-url.test.ts

│ │ ├── ssh-config-parser.test.ts

│ │ └── ssh-tunnel.test.ts

│ ├── allowed-keywords.ts

│ ├── dsn-obfuscate.ts

│ ├── response-formatter.ts

│ ├── safe-url.ts

│ ├── sql-row-limiter.ts

│ ├── ssh-config-parser.ts

│ └── ssh-tunnel.ts

├── tsconfig.json

├── tsup.config.ts

└── vitest.config.ts

```

# Files

--------------------------------------------------------------------------------

/.npmrc:

--------------------------------------------------------------------------------

```

# Skip husky install when installing in CI or production environments

ignore-scripts=false

engine-strict=true

hoist=true

enable-pre-post-scripts=true

auto-install-peers=true

```

--------------------------------------------------------------------------------

/.prettierrc.json:

--------------------------------------------------------------------------------

```json

{

"printWidth": 100,

"tabWidth": 2,

"useTabs": false,

"semi": true,

"singleQuote": false,

"trailingComma": "es5",

"bracketSpacing": true,

"arrowParens": "always"

}

```

--------------------------------------------------------------------------------

/.dockerignore:

--------------------------------------------------------------------------------

```

# Git

.git

.gitignore

.github

# Node.js

node_modules

npm-debug.log

yarn-debug.log

yarn-error.log

pnpm-debug.log

# Build output

dist

node_modules

# Environment

.env

.env.*

!.env.example

# Editor directories and files

.vscode

.idea

*.suo

*.ntvs*

*.njsproj

*.sln

*.sw?

# OS generated files

.DS_Store

.DS_Store?

._*

.Spotlight-V100

.Trashes

ehthumbs.db

Thumbs.db

# Logs

logs

*.log

# Docker

Dockerfile

.dockerignore

# Project specific

*.md

!README.md

LICENSE

CLAUDE.md

```

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

```

# Dependencies

node_modules/

.pnp/

.pnp.js

vendor/

jspm_packages/

bower_components/

# Build outputs

build/

dist/

out/

*.min.js

*.min.css

# Environment & config

.env

.env.local

.env.development.local

.env.test.local

.env.production.local

.venv

env/

venv/

ENV/

config.local.js

*.local.json

# Logs

logs

*.log

npm-debug.log*

yarn-debug.log*

yarn-error.log*

lerna-debug.log*

# Cache and temp

.npm

.eslintcache

.stylelintcache

.cache/

.parcel-cache/

.next/

.nuxt/

.vuepress/dist

.serverless/

.fusebox/

.dynamodb/

.grunt

.temp

.tmp

.sass-cache/

__pycache__/

*.py[cod]

*$py.class

.pytest_cache/

.coverage

htmlcov/

coverage/

.nyc_output/

# OS files

.DS_Store

Thumbs.db

ehthumbs.db

Desktop.ini

$RECYCLE.BIN/

*.lnk

# Editor directories and files

.idea/

.vscode/

*.swp

*.swo

*~

.*.sw[a-p]

*.sublime-workspace

*.sublime-project

# Compiled binaries

*.com

*.class

*.dll

*.exe

*.o

*.so

```

--------------------------------------------------------------------------------

/.env.example:

--------------------------------------------------------------------------------

```

# DBHub Configuration

# Method 1: Connection String (DSN)

# Use one of these DSN formats:

# DSN=postgres://user:password@localhost:5432/dbname

# DSN=sqlite:///path/to/database.db

# DSN=sqlite::memory:

# DSN=sqlserver://user:password@localhost:1433/dbname

# DSN=mysql://user:password@localhost:3306/dbname

DSN=

# Method 2: Individual Database Parameters

# Use this method if your password contains special characters like @, :, /, #, etc.

# that would break URL parsing in the DSN format above

# DB_TYPE=postgres

# DB_HOST=localhost

# DB_PORT=5432

# DB_USER=postgres

# DB_PASSWORD=my@password:with/special#chars

# DB_NAME=mydatabase

# Supported DB_TYPE values: postgres, mysql, mariadb, sqlserver, sqlite

# DB_PORT is optional - defaults to standard port for each database type

# For SQLite: only DB_TYPE and DB_NAME are required (DB_NAME is the file path)

# Transport configuration

# --transport=stdio (default) for stdio transport

# --transport=sse for SSE transport with HTTP server

TRANSPORT=stdio

# Server port for SSE transport (default: 3000)

PORT=3000

# SSH Tunnel Configuration (optional)

# Use these settings to connect through an SSH bastion host

# SSH_HOST=bastion.example.com

# SSH_PORT=22

# SSH_USER=ubuntu

# SSH_PASSWORD=mypassword

# SSH_KEY=~/.ssh/id_rsa

# SSH_PASSPHRASE=mykeypassphrase

# Read-only mode (optional)

# Set to true to restrict SQL execution to read-only operations

# READONLY=false

```

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

```markdown

> [!NOTE]

> Brought to you by [Bytebase](https://www.bytebase.com/), open-source database DevSecOps platform.

<p align="center">

<a href="https://dbhub.ai/" target="_blank">

<picture>

<img src="https://raw.githubusercontent.com/bytebase/dbhub/main/resources/images/logo-full.webp" width="50%">

</picture>

</a>

</p>

<p align="center">

<a href="https://discord.gg/BjEkZpsJzn"><img src="https://img.shields.io/badge/%20-Hang%20out%20on%20Discord-5865F2?style=for-the-badge&logo=discord&labelColor=EEEEEE" alt="Join our Discord" height="32" /></a>

</p>

<p>

Add to Cursor by copying the below link to browser

```text

cursor://anysphere.cursor-deeplink/mcp/install?name=dbhub&config=eyJjb21tYW5kIjoibnB4IEBieXRlYmFzZS9kYmh1YiIsImVudiI6eyJUUkFOU1BPUlQiOiJzdGRpbyIsIkRTTiI6InBvc3RncmVzOi8vdXNlcjpwYXNzd29yZEBsb2NhbGhvc3Q6NTQzMi9kYm5hbWU%2Fc3NsbW9kZT1kaXNhYmxlIiwiUkVBRE9OTFkiOiJ0cnVlIn19

```

</p>

DBHub is a universal database gateway implementing the Model Context Protocol (MCP) server interface. This gateway allows MCP-compatible clients to connect to and explore different databases.

```bash

+------------------+ +--------------+ +------------------+

| | | | | |

| | | | | |

| Claude Desktop +--->+ +--->+ PostgreSQL |

| | | | | |

| Claude Code +--->+ +--->+ SQL Server |

| | | | | |

| Cursor +--->+ DBHub +--->+ SQLite |

| | | | | |

| Other Clients +--->+ +--->+ MySQL |

| | | | | |

| | | +--->+ MariaDB |

| | | | | |

| | | | | |

+------------------+ +--------------+ +------------------+

MCP Clients MCP Server Databases

```

## Supported Matrix

### Database Resources

| Resource Name | URI Format | PostgreSQL | MySQL | MariaDB | SQL Server | SQLite |

| --------------------------- | ------------------------------------------------------ | :--------: | :---: | :-----: | :--------: | :----: |

| schemas | `db://schemas` | ✅ | ✅ | ✅ | ✅ | ✅ |

| tables_in_schema | `db://schemas/{schemaName}/tables` | ✅ | ✅ | ✅ | ✅ | ✅ |

| table_structure_in_schema | `db://schemas/{schemaName}/tables/{tableName}` | ✅ | ✅ | ✅ | ✅ | ✅ |

| indexes_in_table | `db://schemas/{schemaName}/tables/{tableName}/indexes` | ✅ | ✅ | ✅ | ✅ | ✅ |

| procedures_in_schema | `db://schemas/{schemaName}/procedures` | ✅ | ✅ | ✅ | ✅ | ❌ |

| procedure_details_in_schema | `db://schemas/{schemaName}/procedures/{procedureName}` | ✅ | ✅ | ✅ | ✅ | ❌ |

### Database Tools

| Tool | Command Name | Description | PostgreSQL | MySQL | MariaDB | SQL Server | SQLite |

| ----------- | ------------- | ------------------------------------------------------------------- | :--------: | :---: | :-----: | :--------: | ------ |

| Execute SQL | `execute_sql` | Execute single or multiple SQL statements (separated by semicolons) | ✅ | ✅ | ✅ | ✅ | ✅ |

### Prompt Capabilities

| Prompt | Command Name | PostgreSQL | MySQL | MariaDB | SQL Server | SQLite |

| ------------------- | -------------- | :--------: | :---: | :-----: | :--------: | ------ |

| Generate SQL | `generate_sql` | ✅ | ✅ | ✅ | ✅ | ✅ |

| Explain DB Elements | `explain_db` | ✅ | ✅ | ✅ | ✅ | ✅ |

## Installation

### Docker

```bash

# PostgreSQL example

docker run --rm --init \

--name dbhub \

--publish 8080:8080 \

bytebase/dbhub \

--transport http \

--port 8080 \

--dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

```

```bash

# Demo mode with sqlite sample employee database

docker run --rm --init \

--name dbhub \

--publish 8080:8080 \

bytebase/dbhub \

--transport http \

--port 8080 \

--demo

```

**Docker Compose Setup:**

If you're using Docker Compose for development, add DBHub to your `docker-compose.yml`:

```yaml

dbhub:

image: bytebase/dbhub:latest

container_name: dbhub

ports:

- "8080:8080"

environment:

- DBHUB_LOG_LEVEL=info

command:

- --transport

- http

- --port

- "8080"

- --dsn

- "postgres://user:password@database:5432/dbname"

depends_on:

- database

```

### NPM

```bash

# PostgreSQL example

npx @bytebase/dbhub --transport http --port 8080 --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

# Demo mode with sqlite sample employee database

npx @bytebase/dbhub --transport http --port 8080 --demo

```

```bash

# Demo mode with sample employee database

npx @bytebase/dbhub --transport http --port 8080 --demo

```

> Note: The demo mode includes a bundled SQLite sample "employee" database with tables for employees, departments, salaries, and more.

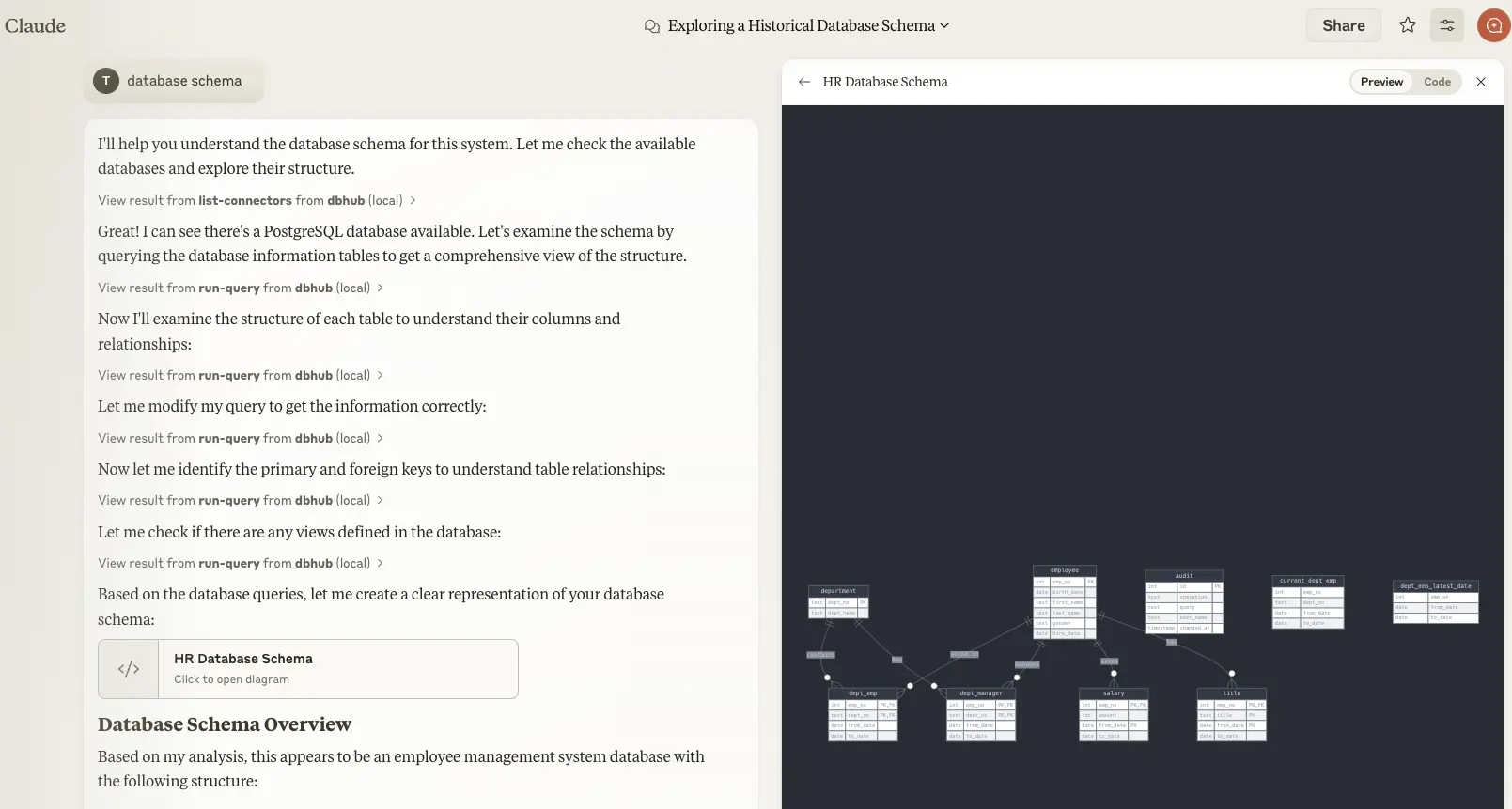

### Claude Desktop

- Claude Desktop only supports `stdio` transport https://github.com/orgs/modelcontextprotocol/discussions/16

```json

// claude_desktop_config.json

{

"mcpServers": {

"dbhub-postgres-docker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"bytebase/dbhub",

"--transport",

"stdio",

"--dsn",

// Use host.docker.internal as the host if connecting to the local db

"postgres://user:[email protected]:5432/dbname?sslmode=disable"

]

},

"dbhub-postgres-npx": {

"command": "npx",

"args": [

"-y",

"@bytebase/dbhub",

"--transport",

"stdio",

"--dsn",

"postgres://user:password@localhost:5432/dbname?sslmode=disable"

]

},

"dbhub-demo": {

"command": "npx",

"args": ["-y", "@bytebase/dbhub", "--transport", "stdio", "--demo"]

}

}

}

```

### Claude Code

Check https://docs.anthropic.com/en/docs/claude-code/mcp

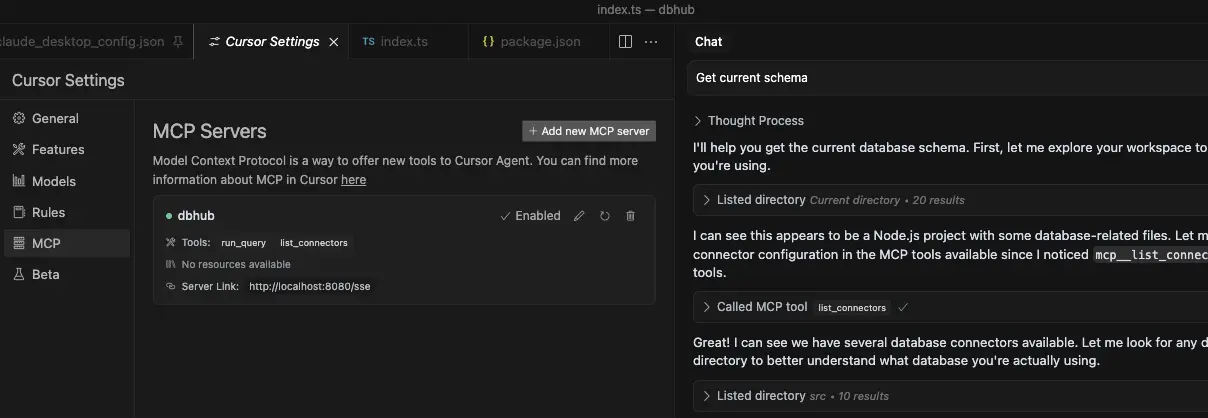

### Cursor

<p>

Add to Cursor by copying the below link to browser

```text

cursor://anysphere.cursor-deeplink/mcp/install?name=dbhub&config=eyJjb21tYW5kIjoibnB4IEBieXRlYmFzZS9kYmh1YiIsImVudiI6eyJUUkFOU1BPUlQiOiJzdGRpbyIsIkRTTiI6InBvc3RncmVzOi8vdXNlcjpwYXNzd29yZEBsb2NhbGhvc3Q6NTQzMi9kYm5hbWU%2Fc3NsbW9kZT1kaXNhYmxlIiwiUkVBRE9OTFkiOiJ0cnVlIn19

```

</p>

- Cursor supports both `stdio` and `http`.

- Follow [Cursor MCP guide](https://docs.cursor.com/context/model-context-protocol) and make sure to use [Agent](https://docs.cursor.com/chat/agent) mode.

### VSCode + Copilot

Check https://code.visualstudio.com/docs/copilot/customization/mcp-servers

VSCode with GitHub Copilot can connect to DBHub via both `stdio` and `http` transports. This enables AI agents to interact with your development database through a secure interface.

- VSCode supports both `stdio` and `http` transports

- Configure MCP server in `.vscode/mcp.json`:

**Stdio Transport:**

```json

{

"servers": {

"dbhub": {

"command": "npx",

"args": [

"-y",

"@bytebase/dbhub",

"--transport",

"stdio",

"--dsn",

"postgres://user:password@localhost:5432/dbname"

]

}

},

"inputs": []

}

```

**HTTP Transport:**

```json

{

"servers": {

"dbhub": {

"url": "http://localhost:8080/message",

"type": "http"

}

},

"inputs": []

}

```

**Copilot Instructions:**

You can provide Copilot with context by creating `.github/copilot-instructions.md`:

```markdown

## Database Access

This project provides an MCP server (DBHub) for secure SQL access to the development database.

AI agents can execute SQL queries. In read-only mode (recommended for production):

- `SELECT * FROM users LIMIT 5;`

- `SHOW TABLES;`

- `DESCRIBE table_name;`

In read-write mode (development/testing):

- `INSERT INTO users (name, email) VALUES ('John', '[email protected]');`

- `UPDATE users SET status = 'active' WHERE id = 1;`

- `CREATE TABLE test_table (id INT PRIMARY KEY);`

Use `--readonly` flag to restrict to read-only operations for safety.

```

## Usage

### Read-only Mode

You can run DBHub in read-only mode, which restricts SQL query execution to read-only operations:

```bash

# Enable read-only mode

npx @bytebase/dbhub --readonly --dsn "postgres://user:password@localhost:5432/dbname"

```

In read-only mode, only [readonly SQL operations](https://github.com/bytebase/dbhub/blob/main/src/utils/allowed-keywords.ts) are allowed.

This provides an additional layer of security when connecting to production databases.

### Suffix Tool Names with ID

You can suffix tool names with a custom ID using the `--id` flag or `ID` environment variable. This is useful when running multiple DBHub instances (e.g., in Cursor) to allow the client to route queries to the correct database.

**Example configuration for multiple databases in Cursor:**

```json

{

"mcpServers": {

"dbhub-prod": {

"command": "npx",

"args": ["-y", "@bytebase/dbhub"],

"env": {

"ID": "prod",

"DSN": "postgres://user:password@prod-host:5432/dbname"

}

},

"dbhub-staging": {

"command": "npx",

"args": ["-y", "@bytebase/dbhub"],

"env": {

"ID": "staging",

"DSN": "mysql://user:password@staging-host:3306/dbname"

}

}

}

}

```

With this configuration:

- Production database tools: `execute_sql_prod`

- Staging database tools: `execute_sql_staging`

### Row Limiting

You can limit the number of rows returned from SELECT queries using the `--max-rows` parameter. This helps prevent accidentally retrieving too much data from large tables:

```bash

# Limit SELECT queries to return at most 1000 rows

npx @bytebase/dbhub --dsn "postgres://user:password@localhost:5432/dbname" --max-rows 1000

```

- Row limiting is only applied to SELECT statements, not INSERT/UPDATE/DELETE

- If your query already has a `LIMIT` or `TOP` clause, DBHub uses the smaller value

### SSL Connections

You can specify the SSL mode using the `sslmode` parameter in your DSN string:

| Database | `sslmode=disable` | `sslmode=require` | Default SSL Behavior |

| ---------- | :---------------: | :---------------: | :----------------------: |

| PostgreSQL | ✅ | ✅ | Certificate verification |

| MySQL | ✅ | ✅ | Certificate verification |

| MariaDB | ✅ | ✅ | Certificate verification |

| SQL Server | ✅ | ✅ | Certificate verification |

| SQLite | ❌ | ❌ | N/A (file-based) |

**SSL Mode Options:**

- `sslmode=disable`: All SSL/TLS encryption is turned off. Data is transmitted in plaintext.

- `sslmode=require`: Connection is encrypted, but the server's certificate is not verified. This provides protection against packet sniffing but not against man-in-the-middle attacks. You may use this for trusted self-signed CA.

Without specifying `sslmode`, most databases default to certificate verification, which provides the highest level of security.

Example usage:

```bash

# Disable SSL

postgres://user:password@localhost:5432/dbname?sslmode=disable

# Require SSL without certificate verification

postgres://user:password@localhost:5432/dbname?sslmode=require

# Standard SSL with certificate verification (default)

postgres://user:password@localhost:5432/dbname

```

### SSH Tunnel Support

DBHub supports connecting to databases through SSH tunnels, enabling secure access to databases in private networks or behind firewalls.

#### Using SSH Config File (Recommended)

DBHub can read SSH connection settings from your `~/.ssh/config` file. Simply use the host alias from your SSH config:

```bash

# If you have this in ~/.ssh/config:

# Host mybastion

# HostName bastion.example.com

# User ubuntu

# IdentityFile ~/.ssh/id_rsa

npx @bytebase/dbhub \

--dsn "postgres://dbuser:[email protected]:5432/mydb" \

--ssh-host mybastion

```

DBHub will automatically use the settings from your SSH config, including hostname, user, port, and identity file. If no identity file is specified in the config, DBHub will try common default locations (`~/.ssh/id_rsa`, `~/.ssh/id_ed25519`, etc.).

#### SSH with Password Authentication

```bash

npx @bytebase/dbhub \

--dsn "postgres://dbuser:[email protected]:5432/mydb" \

--ssh-host bastion.example.com \

--ssh-user ubuntu \

--ssh-password mypassword

```

#### SSH with Private Key Authentication

```bash

npx @bytebase/dbhub \

--dsn "postgres://dbuser:[email protected]:5432/mydb" \

--ssh-host bastion.example.com \

--ssh-user ubuntu \

--ssh-key ~/.ssh/id_rsa

```

#### SSH with Private Key and Passphrase

```bash

npx @bytebase/dbhub \

--dsn "postgres://dbuser:[email protected]:5432/mydb" \

--ssh-host bastion.example.com \

--ssh-port 2222 \

--ssh-user ubuntu \

--ssh-key ~/.ssh/id_rsa \

--ssh-passphrase mykeypassphrase

```

#### Using Environment Variables

```bash

export SSH_HOST=bastion.example.com

export SSH_USER=ubuntu

export SSH_KEY=~/.ssh/id_rsa

npx @bytebase/dbhub --dsn "postgres://dbuser:[email protected]:5432/mydb"

```

**Note**: When using SSH tunnels, the database host in your DSN should be the hostname/IP as seen from the SSH server (bastion host), not from your local machine.

### Configure your database connection

You can use DBHub in demo mode with a sample employee database for testing:

```bash

npx @bytebase/dbhub --demo

```

> [!WARNING]

> If your user/password contains special characters, you have two options:

>

> 1. Escape them in the DSN (e.g. `pass#word` should be escaped as `pass%23word`)

> 2. Use the individual database parameters method below (recommended)

For real databases, you can configure the database connection in two ways:

#### Method 1: Database Source Name (DSN)

- **Command line argument** (highest priority):

```bash

npx @bytebase/dbhub --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

```

- **Environment variable** (second priority):

```bash

export DSN="postgres://user:password@localhost:5432/dbname?sslmode=disable"

npx @bytebase/dbhub

```

- **Environment file** (third priority):

- For development: Create `.env.local` with your DSN

- For production: Create `.env` with your DSN

```

DSN=postgres://user:password@localhost:5432/dbname?sslmode=disable

```

#### Method 2: Individual Database Parameters

If your password contains special characters that would break URL parsing, use individual environment variables instead:

- **Environment variables**:

```bash

export DB_TYPE=postgres

export DB_HOST=localhost

export DB_PORT=5432

export DB_USER=myuser

export DB_PASSWORD='my@complex:password/with#special&chars'

export DB_NAME=mydatabase

npx @bytebase/dbhub

```

- **Environment file**:

```

DB_TYPE=postgres

DB_HOST=localhost

DB_PORT=5432

DB_USER=myuser

DB_PASSWORD=my@complex:password/with#special&chars

DB_NAME=mydatabase

```

**Supported DB_TYPE values**: `postgres`, `mysql`, `mariadb`, `sqlserver`, `sqlite`

**Default ports** (when DB_PORT is omitted):

- PostgreSQL: `5432`

- MySQL/MariaDB: `3306`

- SQL Server: `1433`

**For SQLite**: Only `DB_TYPE=sqlite` and `DB_NAME=/path/to/database.db` are required.

> [!TIP]

> Use the individual parameter method when your password contains special characters like `@`, `:`, `/`, `#`, `&`, `=` that would break DSN parsing.

> [!WARNING]

> When running in Docker, use `host.docker.internal` instead of `localhost` to connect to databases running on your host machine. For example: `mysql://user:[email protected]:3306/dbname`

DBHub supports the following database connection string formats:

| Database | DSN Format | Example |

| ---------- | -------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------- |

| MySQL | `mysql://[user]:[password]@[host]:[port]/[database]` | `mysql://user:password@localhost:3306/dbname?sslmode=disable` |

| MariaDB | `mariadb://[user]:[password]@[host]:[port]/[database]` | `mariadb://user:password@localhost:3306/dbname?sslmode=disable` |

| PostgreSQL | `postgres://[user]:[password]@[host]:[port]/[database]` | `postgres://user:password@localhost:5432/dbname?sslmode=disable` |

| SQL Server | `sqlserver://[user]:[password]@[host]:[port]/[database]` | `sqlserver://user:password@localhost:1433/dbname?sslmode=disable` |

| SQLite | `sqlite:///[path/to/file]` or `sqlite:///:memory:` | `sqlite:///path/to/database.db`, `sqlite:C:/Users/YourName/data/database.db (windows)` or `sqlite:///:memory:` |

#### SQL Server

Extra query parameters:

#### authentication

- `authentication=azure-active-directory-access-token`. Only applicable when running from Azure. See [DefaultAzureCredential](https://learn.microsoft.com/en-us/azure/developer/javascript/sdk/authentication/credential-chains#use-defaultazurecredential-for-flexibility).

### Transport

- **stdio** (default) - for direct integration with tools like Claude Desktop:

```bash

npx @bytebase/dbhub --transport stdio --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

```

- **http** - for browser and network clients:

```bash

npx @bytebase/dbhub --transport http --port 5678 --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

```

### Command line options

| Option | Environment Variable | Description | Default |

| -------------- | -------------------- | --------------------------------------------------------------------- | ---------------------------- |

| dsn | `DSN` | Database connection string | Required if not in demo mode |

| N/A | `DB_TYPE` | Database type: `postgres`, `mysql`, `mariadb`, `sqlserver`, `sqlite` | N/A |

| N/A | `DB_HOST` | Database server hostname (not needed for SQLite) | N/A |

| N/A | `DB_PORT` | Database server port (uses default if omitted, not needed for SQLite) | N/A |

| N/A | `DB_USER` | Database username (not needed for SQLite) | N/A |

| N/A | `DB_PASSWORD` | Database password (not needed for SQLite) | N/A |

| N/A | `DB_NAME` | Database name or SQLite file path | N/A |

| transport | `TRANSPORT` | Transport mode: `stdio` or `http` | `stdio` |

| port | `PORT` | HTTP server port (only applicable when using `--transport=http`) | `8080` |

| readonly | `READONLY` | Restrict SQL execution to read-only operations | `false` |

| max-rows | N/A | Limit the number of rows returned from SELECT queries | No limit |

| demo | N/A | Run in demo mode with sample employee database | `false` |

| id | `ID` | Instance identifier to suffix tool names (for multi-instance) | N/A |

| ssh-host | `SSH_HOST` | SSH server hostname for tunnel connection | N/A |

| ssh-port | `SSH_PORT` | SSH server port | `22` |

| ssh-user | `SSH_USER` | SSH username | N/A |

| ssh-password | `SSH_PASSWORD` | SSH password (for password authentication) | N/A |

| ssh-key | `SSH_KEY` | Path to SSH private key file | N/A |

| ssh-passphrase | `SSH_PASSPHRASE` | Passphrase for SSH private key | N/A |

The demo mode uses an in-memory SQLite database loaded with the [sample employee database](https://github.com/bytebase/dbhub/tree/main/resources/employee-sqlite) that includes tables for employees, departments, titles, salaries, department employees, and department managers. The sample database includes SQL scripts for table creation, data loading, and testing.

## Development

1. Install dependencies:

```bash

pnpm install

```

1. Run in development mode:

```bash

pnpm dev

```

1. Build for production:

```bash

pnpm build

pnpm start --transport stdio --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

```

### Testing

The project uses Vitest for comprehensive unit and integration testing:

- **Run all tests**: `pnpm test`

- **Run tests in watch mode**: `pnpm test:watch`

- **Run integration tests**: `pnpm test:integration`

#### Integration Tests

DBHub includes comprehensive integration tests for all supported database connectors using [Testcontainers](https://testcontainers.com/). These tests run against real database instances in Docker containers, ensuring full compatibility and feature coverage.

##### Prerequisites

- **Docker**: Ensure Docker is installed and running on your machine

- **Docker Resources**: Allocate sufficient memory (recommended: 4GB+) for multiple database containers

- **Network Access**: Ability to pull Docker images from registries

##### Running Integration Tests

**Note**: This command runs all integration tests in parallel, which may take 5-15 minutes depending on your system resources and network speed.

```bash

# Run all database integration tests

pnpm test:integration

```

```bash

# Run only PostgreSQL integration tests

pnpm test src/connectors/__tests__/postgres.integration.test.ts

# Run only MySQL integration tests

pnpm test src/connectors/__tests__/mysql.integration.test.ts

# Run only MariaDB integration tests

pnpm test src/connectors/__tests__/mariadb.integration.test.ts

# Run only SQL Server integration tests

pnpm test src/connectors/__tests__/sqlserver.integration.test.ts

# Run only SQLite integration tests

pnpm test src/connectors/__tests__/sqlite.integration.test.ts

# Run JSON RPC integration tests

pnpm test src/__tests__/json-rpc-integration.test.ts

```

All integration tests follow these patterns:

1. **Container Lifecycle**: Start database container → Connect → Setup test data → Run tests → Cleanup

2. **Shared Test Utilities**: Common test patterns implemented in `IntegrationTestBase` class

3. **Database-Specific Features**: Each database includes tests for unique features and capabilities

4. **Error Handling**: Comprehensive testing of connection errors, invalid SQL, and edge cases

##### Troubleshooting Integration Tests

**Container Startup Issues:**

```bash

# Check Docker is running

docker ps

# Check available memory

docker system df

# Pull images manually if needed

docker pull postgres:15-alpine

docker pull mysql:8.0

docker pull mariadb:10.11

docker pull mcr.microsoft.com/mssql/server:2019-latest

```

**SQL Server Timeout Issues:**

- SQL Server containers require significant startup time (3-5 minutes)

- Ensure Docker has sufficient memory allocated (4GB+ recommended)

- Consider running SQL Server tests separately if experiencing timeouts

**Network/Resource Issues:**

```bash

# Run tests with verbose output

pnpm test:integration --reporter=verbose

# Run single database test to isolate issues

pnpm test:integration -- --testNamePattern="PostgreSQL"

# Check Docker container logs if tests fail

docker logs <container_id>

```

#### Pre-commit Hooks (for Developers)

The project includes pre-commit hooks to run tests automatically before each commit:

1. After cloning the repository, set up the pre-commit hooks:

```bash

./scripts/setup-husky.sh

```

2. This ensures the test suite runs automatically whenever you create a commit, preventing commits that would break tests.

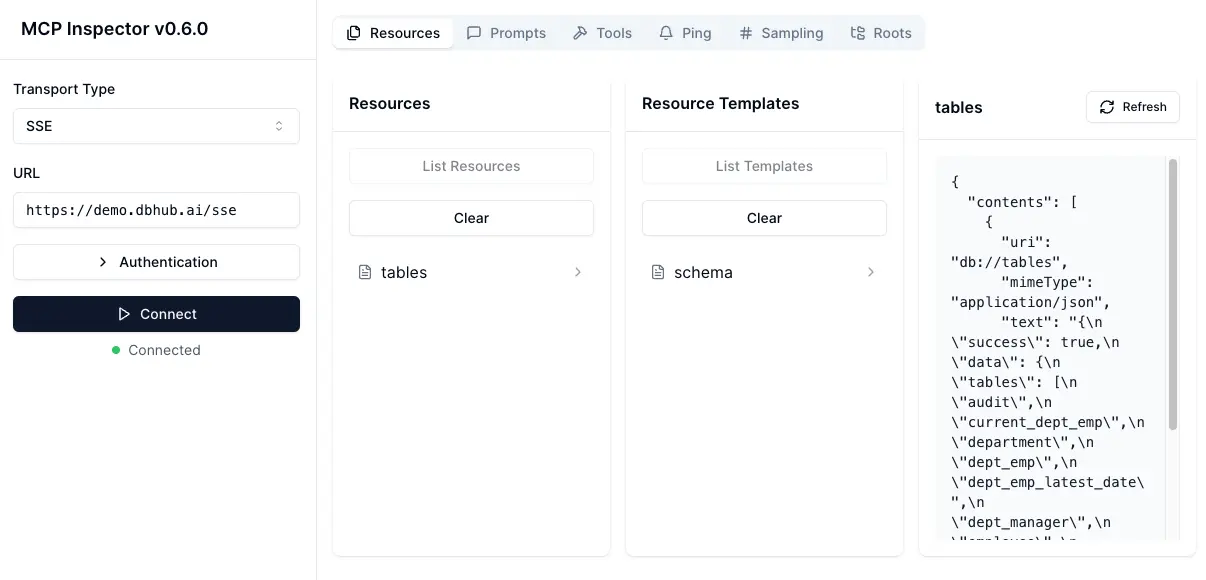

### Debug with [MCP Inspector](https://github.com/modelcontextprotocol/inspector)

#### stdio

```bash

# PostgreSQL example

TRANSPORT=stdio DSN="postgres://user:password@localhost:5432/dbname?sslmode=disable" npx @modelcontextprotocol/inspector node /path/to/dbhub/dist/index.js

```

#### HTTP

```bash

# Start DBHub with HTTP transport

pnpm dev --transport=http --port=8080

# Start the MCP Inspector in another terminal

npx @modelcontextprotocol/inspector

```

Connect to the DBHub server `/message` endpoint

## Contributors

<a href="https://github.com/bytebase/dbhub/graphs/contributors">

<img src="https://contrib.rocks/image?repo=bytebase/dbhub" />

</a>

## Star History

[](https://www.star-history.com/#bytebase/dbhub&Date)

```

--------------------------------------------------------------------------------

/CLAUDE.md:

--------------------------------------------------------------------------------

```markdown

# CLAUDE.md

This file provides guidance to Claude Code (claude.ai/code) when working with code in this repository.

# DBHub Development Guidelines

DBHub is a Universal Database Gateway implementing the Model Context Protocol (MCP) server interface. It bridges MCP-compatible clients (Claude Desktop, Claude Code, Cursor) with various database systems.

## Commands

- Build: `pnpm run build` - Compiles TypeScript to JavaScript using tsup

- Start: `pnpm run start` - Runs the compiled server

- Dev: `pnpm run dev` - Runs server with tsx (no compilation needed)

- Test: `pnpm test` - Run all tests

- Test Watch: `pnpm test:watch` - Run tests in watch mode

- Integration Tests: `pnpm test:integration` - Run database integration tests (requires Docker)

- Pre-commit: `./scripts/setup-husky.sh` - Setup git hooks for automated testing

## Architecture Overview

The codebase follows a modular architecture centered around the MCP protocol:

```

src/

├── connectors/ # Database-specific implementations

│ ├── postgres/ # PostgreSQL connector

│ ├── mysql/ # MySQL connector

│ ├── mariadb/ # MariaDB connector

│ ├── sqlserver/ # SQL Server connector

│ └── sqlite/ # SQLite connector

├── resources/ # MCP resource handlers (DB exploration)

│ ├── schemas.ts # Schema listing

│ ├── tables.ts # Table exploration

│ ├── indexes.ts # Index information

│ └── procedures.ts # Stored procedures

├── tools/ # MCP tool handlers

│ └── execute-sql.ts # SQL execution handler

├── prompts/ # AI prompt handlers

│ ├── generate-sql.ts # SQL generation

│ └── explain-db.ts # Database explanation

├── utils/ # Shared utilities

│ ├── dsn-obfuscator.ts# DSN security

│ ├── response-formatter.ts # Output formatting

│ └── allowed-keywords.ts # Read-only SQL validation

└── index.ts # Entry point with transport handling

```

Key architectural patterns:

- **Connector Registry**: Dynamic registration system for database connectors

- **Transport Abstraction**: Support for both stdio (desktop tools) and HTTP (network clients)

- **Resource/Tool/Prompt Handlers**: Clean separation of MCP protocol concerns

- **Integration Test Base**: Shared test utilities for consistent connector testing

## Environment

- Copy `.env.example` to `.env` and configure for your database connection

- Two ways to configure:

- Set `DSN` to a full connection string (recommended)

- Set `DB_CONNECTOR_TYPE` to select a connector with its default DSN

- Transport options:

- Set `--transport=stdio` (default) for stdio transport

- Set `--transport=http` for streamable HTTP transport with HTTP server

- Demo mode: Use `--demo` flag for bundled SQLite employee database

- Read-only mode: Use `--readonly` flag to restrict to read-only SQL operations

## Database Connectors

- Add new connectors in `src/connectors/{db-type}/index.ts`

- Implement the `Connector` and `DSNParser` interfaces from `src/interfaces/connector.ts`

- Register connector with `ConnectorRegistry.register(connector)`

- DSN Examples:

- PostgreSQL: `postgres://user:password@localhost:5432/dbname?sslmode=disable`

- MySQL: `mysql://user:password@localhost:3306/dbname?sslmode=disable`

- MariaDB: `mariadb://user:password@localhost:3306/dbname?sslmode=disable`

- SQL Server: `sqlserver://user:password@localhost:1433/dbname?sslmode=disable`

- SQLite: `sqlite:///path/to/database.db` or `sqlite:///:memory:`

- SSL modes: `sslmode=disable` (no SSL) or `sslmode=require` (SSL without cert verification)

## Testing Approach

- Unit tests for individual components and utilities

- Integration tests using Testcontainers for real database testing

- All connectors have comprehensive integration test coverage

- Pre-commit hooks run related tests automatically

- Test specific databases: `pnpm test src/connectors/__tests__/{db-type}.integration.test.ts`

- SSH tunnel tests: `pnpm test postgres-ssh-simple.integration.test.ts`

## SSH Tunnel Support

DBHub supports SSH tunnels for secure database connections through bastion hosts:

- Configuration via command-line options: `--ssh-host`, `--ssh-port`, `--ssh-user`, `--ssh-password`, `--ssh-key`, `--ssh-passphrase`

- Configuration via environment variables: `SSH_HOST`, `SSH_PORT`, `SSH_USER`, `SSH_PASSWORD`, `SSH_KEY`, `SSH_PASSPHRASE`

- SSH config file support: Automatically reads from `~/.ssh/config` when using host aliases

- Implementation in `src/utils/ssh-tunnel.ts` using the `ssh2` library

- SSH config parsing in `src/utils/ssh-config-parser.ts` using the `ssh-config` library

- Automatic tunnel establishment when SSH config is detected

- Support for both password and key-based authentication

- Default SSH key detection (tries `~/.ssh/id_rsa`, `~/.ssh/id_ed25519`, etc.)

- Tunnel lifecycle managed by `ConnectorManager`

## Code Style

- TypeScript with strict mode enabled

- ES modules with `.js` extension in imports

- Group imports: Node.js core modules → third-party → local modules

- Use camelCase for variables/functions, PascalCase for classes/types

- Include explicit type annotations for function parameters/returns

- Use try/finally blocks with DB connections (always release clients)

- Prefer async/await over callbacks and Promise chains

- Format error messages consistently

- Use parameterized queries for DB operations

- Validate inputs with zod schemas

- Include fallbacks for environment variables

- Use descriptive variable/function names

- Keep functions focused and single-purpose

```

--------------------------------------------------------------------------------

/src/types/sql.ts:

--------------------------------------------------------------------------------

```typescript

/**

* SQL dialect types supported by the application

*/

export type SQLDialect = "postgres" | "sqlite" | "mysql" | "mariadb" | "mssql" | "ansi";

```

--------------------------------------------------------------------------------

/pnpm-workspace.yaml:

--------------------------------------------------------------------------------

```yaml

packages:

- '.'

approvedBuilds:

- better-sqlite3

ignoredBuiltDependencies:

- cpu-features

- esbuild

- protobufjs

- ssh2

onlyBuiltDependencies:

- better-sqlite3

```

--------------------------------------------------------------------------------

/resources/employee-sqlite/show_elapsed.sql:

--------------------------------------------------------------------------------

```sql

-- SQLite doesn't have information_schema like MySQL

-- This is a simpler version that just shows when the script was run

SELECT 'Database loaded at ' || datetime('now', 'localtime') AS completion_time;

```

--------------------------------------------------------------------------------

/resources/employee-sqlite/load_department.sql:

--------------------------------------------------------------------------------

```sql

INSERT INTO department VALUES

('d001','Marketing'),

('d002','Finance'),

('d003','Human Resources'),

('d004','Production'),

('d005','Development'),

('d006','Quality Management'),

('d007','Sales'),

('d008','Research'),

('d009','Customer Service');

```

--------------------------------------------------------------------------------

/vitest.config.ts:

--------------------------------------------------------------------------------

```typescript

import { defineConfig } from 'vitest/config';

export default defineConfig({

test: {

globals: true,

environment: 'node',

include: ['src/**/*.{test,spec}.ts'],

coverage: {

provider: 'v8',

reporter: ['text', 'lcov'],

},

},

});

```

--------------------------------------------------------------------------------

/tsconfig.json:

--------------------------------------------------------------------------------

```json

{

"compilerOptions": {

"target": "ES2020",

"module": "NodeNext",

"moduleResolution": "NodeNext",

"esModuleInterop": true,

"outDir": "./dist",

"strict": true,

"lib": ["ES2020", "ES2021.Promise", "ES2022.Error"]

},

"include": ["src/**/*"]

}

```

--------------------------------------------------------------------------------

/scripts/setup-husky.sh:

--------------------------------------------------------------------------------

```bash

#!/bin/bash

# This script is used to set up Husky for development

# It should be run manually, not as part of production builds

echo "Setting up Husky for the project..."

npx husky init

# Create the pre-commit hook

cat > .husky/pre-commit << 'EOL'

#!/usr/bin/env sh

# Run lint-staged to check only the files that are being committed

pnpm lint-staged

# Run the test suite to ensure everything passes

pnpm test

EOL

chmod +x .husky/pre-commit

echo "Husky setup complete!"

```

--------------------------------------------------------------------------------

/.github/copilot-instructions.md:

--------------------------------------------------------------------------------

```markdown

## Database Access

This project provides an MCP server (DBHub) for secure SQL access to the development database.

AI agents can execute SQL queries. In read-only mode (recommended for production):

- `SELECT * FROM users LIMIT 5;`

- `SHOW TABLES;`

- `DESCRIBE table_name;`

In read-write mode (development/testing):

- `INSERT INTO users (name, email) VALUES ('John', '[email protected]');`

- `UPDATE users SET status = 'active' WHERE id = 1;`

- `CREATE TABLE test_table (id INT PRIMARY KEY);`

Use `--readonly` flag to restrict to read-only operations for safety.

```

--------------------------------------------------------------------------------

/src/utils/allowed-keywords.ts:

--------------------------------------------------------------------------------

```typescript

import { ConnectorType } from "../connectors/interface.js";

/**

* List of allowed keywords for SQL queries

* Not only SELECT queries are allowed,

* but also other queries that are not destructive

*/

export const allowedKeywords: Record<ConnectorType, string[]> = {

postgres: ["select", "with", "explain", "analyze", "show"],

mysql: ["select", "with", "explain", "analyze", "show", "describe", "desc"],

mariadb: ["select", "with", "explain", "analyze", "show", "describe", "desc"],

sqlite: ["select", "with", "explain", "analyze", "pragma"],

sqlserver: ["select", "with", "explain", "showplan"],

};

```

--------------------------------------------------------------------------------

/src/index.ts:

--------------------------------------------------------------------------------

```typescript

#!/usr/bin/env node

// Import connector modules to register them

import "./connectors/postgres/index.js"; // Register PostgreSQL connector

import "./connectors/sqlserver/index.js"; // Register SQL Server connector

import "./connectors/sqlite/index.js"; // SQLite connector

import "./connectors/mysql/index.js"; // MySQL connector

import "./connectors/mariadb/index.js"; // MariaDB connector

// Import main function from server.ts

import { main } from "./server.js";

/**

* Entry point for the DBHub MCP Server

* Handles top-level exceptions and starts the server

*/

main().catch((error) => {

console.error("Fatal error:", error);

process.exit(1);

});

```

--------------------------------------------------------------------------------

/src/tools/index.ts:

--------------------------------------------------------------------------------

```typescript

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { executeSqlToolHandler, executeSqlSchema } from "./execute-sql.js";

/**

* Register all tool handlers with the MCP server

* @param server - The MCP server instance

* @param id - Optional ID to suffix tool names (for Cursor multi-instance support)

*/

export function registerTools(server: McpServer, id?: string): void {

// Build tool name with optional suffix

const toolName = id ? `execute_sql_${id}` : "execute_sql";

// Tool to run a SQL query (read-only for safety)

server.tool(

toolName,

"Execute a SQL query on the current database",

executeSqlSchema,

executeSqlToolHandler

);

}

```

--------------------------------------------------------------------------------

/resources/employee-sqlite/load_dept_manager.sql:

--------------------------------------------------------------------------------

```sql

INSERT INTO dept_manager VALUES

(10002,'d001','1985-01-01','1991-10-01'),

(10039,'d001','1991-10-01','9999-01-01'),

(10085,'d002','1985-01-01','1989-12-17'),

(10114,'d002','1989-12-17','9999-01-01'),

(10183,'d003','1985-01-01','1992-03-21'),

(10228,'d003','1992-03-21','9999-01-01'),

(10303,'d004','1985-01-01','1988-09-09'),

(10344,'d004','1988-09-09','1992-08-02'),

(10386,'d004','1992-08-02','1996-08-30'),

(10420,'d004','1996-08-30','9999-01-01'),

(10511,'d005','1985-01-01','1992-04-25'),

(10567,'d005','1992-04-25','9999-01-01'),

(10725,'d006','1985-01-01','1989-05-06'),

(10765,'d006','1989-05-06','1991-09-12'),

(10800,'d006','1991-09-12','1994-06-28'),

(10854,'d006','1994-06-28','9999-01-01');

```

--------------------------------------------------------------------------------

/src/prompts/index.ts:

--------------------------------------------------------------------------------

```typescript

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { sqlGeneratorPromptHandler, sqlGeneratorSchema } from "./sql-generator.js";

import { dbExplainerPromptHandler, dbExplainerSchema } from "./db-explainer.js";

/**

* Register all prompt handlers with the MCP server

*/

export function registerPrompts(server: McpServer): void {

// Register SQL Generator prompt

server.prompt(

"generate_sql",

"Generate SQL queries from natural language descriptions",

sqlGeneratorSchema,

sqlGeneratorPromptHandler

);

// Register Database Explainer prompt

server.prompt(

"explain_db",

"Get explanations about database tables, columns, and structures",

dbExplainerSchema,

dbExplainerPromptHandler

);

}

```

--------------------------------------------------------------------------------

/src/resources/schemas.ts:

--------------------------------------------------------------------------------

```typescript

import { ConnectorManager } from "../connectors/manager.js";

import {

createResourceSuccessResponse,

createResourceErrorResponse,

} from "../utils/response-formatter.js";

/**

* Schemas resource handler

* Returns a list of all schemas in the database

*/

export async function schemasResourceHandler(uri: URL, _extra: any) {

const connector = ConnectorManager.getCurrentConnector();

try {

const schemas = await connector.getSchemas();

// Prepare response data

const responseData = {

schemas: schemas,

count: schemas.length,

};

// Use the utility to create a standardized response

return createResourceSuccessResponse(uri.href, responseData);

} catch (error) {

return createResourceErrorResponse(

uri.href,

`Error retrieving database schemas: ${(error as Error).message}`,

"SCHEMAS_RETRIEVAL_ERROR"

);

}

}

```

--------------------------------------------------------------------------------

/src/types/ssh.ts:

--------------------------------------------------------------------------------

```typescript

/**

* SSH Tunnel Configuration Types

*/

export interface SSHTunnelConfig {

/** SSH server hostname */

host: string;

/** SSH server port (default: 22) */

port?: number;

/** SSH username */

username: string;

/** SSH password (for password authentication) */

password?: string;

/** Path to SSH private key file */

privateKey?: string;

/** Passphrase for SSH private key */

passphrase?: string;

}

export interface SSHTunnelOptions {

/** Target database host (as seen from SSH server) */

targetHost: string;

/** Target database port */

targetPort: number;

/** Local port to bind the tunnel (0 for dynamic allocation) */

localPort?: number;

}

export interface SSHTunnelInfo {

/** Local port where the tunnel is listening */

localPort: number;

/** Original target host */

targetHost: string;

/** Original target port */

targetPort: number;

}

```

--------------------------------------------------------------------------------

/tsup.config.ts:

--------------------------------------------------------------------------------

```typescript

import { defineConfig } from 'tsup';

import fs from 'fs';

import path from 'path';

export default defineConfig({

entry: ['src/index.ts'],

format: ['esm'],

dts: true,

clean: true,

outDir: 'dist',

// Copy the employee-sqlite resources to dist

async onSuccess() {

// Create target directory

const targetDir = path.join('dist', 'resources', 'employee-sqlite');

fs.mkdirSync(targetDir, { recursive: true });

// Copy all SQL files from resources/employee-sqlite to dist/resources/employee-sqlite

const sourceDir = path.join('resources', 'employee-sqlite');

const files = fs.readdirSync(sourceDir);

for (const file of files) {

if (file.endsWith('.sql')) {

const sourcePath = path.join(sourceDir, file);

const targetPath = path.join(targetDir, file);

fs.copyFileSync(sourcePath, targetPath);

console.log(`Copied ${sourcePath} to ${targetPath}`);

}

}

},

});

```

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

```dockerfile

FROM node:22-alpine AS builder

WORKDIR /app

# Copy package.json and pnpm-lock.yaml

COPY package.json pnpm-lock.yaml ./

# Install pnpm

RUN corepack enable && corepack prepare pnpm@latest --activate

# Install dependencies

RUN pnpm install

# Copy source code

COPY . .

# Build the application

RUN pnpm run build

# Production stage

FROM node:22-alpine

WORKDIR /app

# Copy only production files

COPY --from=builder /app/package.json /app/pnpm-lock.yaml ./

# Install pnpm

RUN corepack enable && corepack prepare pnpm@latest --activate

RUN pnpm pkg set pnpm.onlyBuiltDependencies[0]=better-sqlite3

RUN pnpm add better-sqlite3

RUN node -e 'new require("better-sqlite3")(":memory:")'

# Install production dependencies only

RUN pnpm install --prod

# Copy built application from builder stage

COPY --from=builder /app/dist ./dist

# Expose ports

EXPOSE 8080

# Set environment variables

ENV NODE_ENV=production

# Run the server

ENTRYPOINT ["node", "dist/index.js"]

```

--------------------------------------------------------------------------------

/.github/workflows/run-tests.yml:

--------------------------------------------------------------------------------

```yaml

name: Run Tests

on:

pull_request:

branches: [ main ]

# Run when PR is opened, synchronized, or reopened

types: [opened, synchronize, reopened]

# Also allow manual triggering

workflow_dispatch:

jobs:

test:

name: Run Test Suite

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Install pnpm

uses: pnpm/action-setup@v2

with:

version: 8

run_install: false

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: '20'

cache: 'pnpm'

- name: Get pnpm store directory

id: pnpm-cache

shell: bash

run: |

echo "STORE_PATH=$(pnpm store path)" >> $GITHUB_OUTPUT

- name: Setup pnpm cache

uses: actions/cache@v3

with:

path: ${{ steps.pnpm-cache.outputs.STORE_PATH }}

key: ${{ runner.os }}-pnpm-store-${{ hashFiles('**/pnpm-lock.yaml') }}

restore-keys: |

${{ runner.os }}-pnpm-store-

- name: Install dependencies

run: pnpm install

- name: Run tests

run: pnpm test

```

--------------------------------------------------------------------------------

/src/resources/tables.ts:

--------------------------------------------------------------------------------

```typescript

import { ConnectorManager } from "../connectors/manager.js";

import {

createResourceSuccessResponse,

createResourceErrorResponse,

} from "../utils/response-formatter.js";

/**

* Tables resource handler

* Returns a list of all tables in the database or within a specific schema

*/

export async function tablesResourceHandler(uri: URL, variables: any, _extra: any) {

const connector = ConnectorManager.getCurrentConnector();

// Extract the schema name from URL variables if present

const schemaName =

variables && variables.schemaName

? Array.isArray(variables.schemaName)

? variables.schemaName[0]

: variables.schemaName

: undefined;

try {

// If a schema name was provided, verify that it exists

if (schemaName) {

const availableSchemas = await connector.getSchemas();

if (!availableSchemas.includes(schemaName)) {

return createResourceErrorResponse(

uri.href,

`Schema '${schemaName}' does not exist or cannot be accessed`,

"SCHEMA_NOT_FOUND"

);

}

}

// Get tables with optional schema filter

const tableNames = await connector.getTables(schemaName);

// Prepare response data

const responseData = {

tables: tableNames,

count: tableNames.length,

schema: schemaName,

};

// Use the utility to create a standardized response

return createResourceSuccessResponse(uri.href, responseData);

} catch (error) {

return createResourceErrorResponse(

uri.href,

`Error retrieving tables: ${(error as Error).message}`,

"TABLES_RETRIEVAL_ERROR"

);

}

}

```

--------------------------------------------------------------------------------

/resources/employee-sqlite/object.sql:

--------------------------------------------------------------------------------

```sql

-- SQLite implementation of views and functions

-- This is simplified compared to the MySQL version

-- Drop views if they exist

DROP VIEW IF EXISTS v_full_employee;

DROP VIEW IF EXISTS v_full_department;

DROP VIEW IF EXISTS emp_dept_current;

-- Create helper view to get current department for employees

CREATE VIEW emp_dept_current AS

SELECT

e.emp_no,

de.dept_no

FROM

employee e

JOIN

dept_emp de ON e.emp_no = de.emp_no

JOIN (

SELECT

emp_no,

MAX(from_date) AS max_from_date

FROM

dept_emp

GROUP BY

emp_no

) latest ON de.emp_no = latest.emp_no AND de.from_date = latest.max_from_date;

-- View that shows employee with their current department name

CREATE VIEW v_full_employee AS

SELECT

e.emp_no,

e.first_name,

e.last_name,

e.birth_date,

e.gender,

e.hire_date,

d.dept_name AS department

FROM

employee e

LEFT JOIN

emp_dept_current edc ON e.emp_no = edc.emp_no

LEFT JOIN

department d ON edc.dept_no = d.dept_no;

-- View to get current managers for departments

CREATE VIEW current_managers AS

SELECT

d.dept_no,

d.dept_name,

e.first_name || ' ' || e.last_name AS manager

FROM

department d

LEFT JOIN

dept_manager dm ON d.dept_no = dm.dept_no

JOIN (

SELECT

dept_no,

MAX(from_date) AS max_from_date

FROM

dept_manager

GROUP BY

dept_no

) latest ON dm.dept_no = latest.dept_no AND dm.from_date = latest.max_from_date

LEFT JOIN

employee e ON dm.emp_no = e.emp_no;

-- Create a view showing departments with their managers

CREATE VIEW v_full_department AS

SELECT

dept_no,

dept_name,

manager

FROM

current_managers;

```

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

```json

{

"name": "dbhub",

"version": "0.11.5",

"description": "Universal Database MCP Server",

"repository": {

"type": "git",

"url": "https://github.com/bytebase/dbhub.git"

},

"main": "dist/index.js",

"type": "module",

"bin": {

"dbhub": "dist/index.js"

},

"files": [

"dist",

"LICENSE",

"README.md"

],

"scripts": {

"build": "tsup",

"start": "node dist/index.js",

"dev": "NODE_ENV=development tsx src/index.ts",

"crossdev": "cross-env NODE_ENV=development tsx src/index.ts",

"test": "vitest run",

"test:watch": "vitest",

"test:integration": "vitest run --testNamePattern='Integration Tests'",

"prepare": "[[ \"$NODE_ENV\" != \"production\" ]] && husky || echo \"Skipping husky in production\"",

"pre-commit": "lint-staged"

},

"keywords": [],

"author": "",

"license": "MIT",

"dependencies": {

"@azure/identity": "^4.8.0",

"@modelcontextprotocol/sdk": "^1.12.1",

"better-sqlite3": "^11.9.0",

"dotenv": "^16.4.7",

"express": "^4.18.2",

"mariadb": "^3.4.0",

"mssql": "^11.0.1",

"mysql2": "^3.13.0",

"pg": "^8.13.3",

"ssh-config": "^5.0.3",

"ssh2": "^1.16.0",

"zod": "^3.24.2"

},

"devDependencies": {

"@testcontainers/mariadb": "^11.0.3",

"@testcontainers/mssqlserver": "^11.0.3",

"@testcontainers/mysql": "^11.0.3",

"@testcontainers/postgresql": "^11.0.3",

"@types/better-sqlite3": "^7.6.12",

"@types/express": "^4.17.21",

"@types/mssql": "^9.1.7",

"@types/node": "^22.13.10",

"@types/pg": "^8.11.11",

"@types/ssh2": "^1.15.5",

"cross-env": "^7.0.3",

"husky": "^9.0.11",

"lint-staged": "^15.2.2",

"prettier": "^3.5.3",

"testcontainers": "^11.0.3",

"ts-node": "^10.9.2",

"tsup": "^8.4.0",

"tsx": "^4.19.3",

"typescript": "^5.8.2",

"vitest": "^1.6.1"

},

"compilerOptions": {

"target": "ES2020",

"module": "NodeNext",

"moduleResolution": "NodeNext",

"esModuleInterop": true,

"strict": true,

"outDir": "dist",

"rootDir": "src"

},

"include": [

"src/**/*"

],

"lint-staged": {

"*.{js,ts}": "vitest related --run"

}

}

```

--------------------------------------------------------------------------------

/src/resources/index.ts:

--------------------------------------------------------------------------------

```typescript

import { McpServer, ResourceTemplate } from "@modelcontextprotocol/sdk/server/mcp.js";

import { tablesResourceHandler } from "./tables.js";

import { tableStructureResourceHandler } from "./schema.js";

import { schemasResourceHandler } from "./schemas.js";

import { indexesResourceHandler } from "./indexes.js";

import { proceduresResourceHandler, procedureDetailResourceHandler } from "./procedures.js";

// Export all resource handlers

export { tablesResourceHandler } from "./tables.js";

export { tableStructureResourceHandler } from "./schema.js";

export { schemasResourceHandler } from "./schemas.js";

export { indexesResourceHandler } from "./indexes.js";

export { proceduresResourceHandler, procedureDetailResourceHandler } from "./procedures.js";

/**

* Register all resource handlers with the MCP server

*/

export function registerResources(server: McpServer): void {

// Resource for listing all schemas

server.resource("schemas", "db://schemas", schemasResourceHandler);

// Allow listing tables within a specific schema

server.resource(

"tables_in_schema",

new ResourceTemplate("db://schemas/{schemaName}/tables", { list: undefined }),

tablesResourceHandler

);

// Resource for getting table structure within a specific database schema

server.resource(

"table_structure_in_schema",

new ResourceTemplate("db://schemas/{schemaName}/tables/{tableName}", { list: undefined }),

tableStructureResourceHandler

);

// Resource for getting indexes for a table within a specific database schema

server.resource(

"indexes_in_table",

new ResourceTemplate("db://schemas/{schemaName}/tables/{tableName}/indexes", {

list: undefined,

}),

indexesResourceHandler

);

// Resource for listing stored procedures within a schema

server.resource(

"procedures_in_schema",

new ResourceTemplate("db://schemas/{schemaName}/procedures", { list: undefined }),

proceduresResourceHandler

);

// Resource for getting procedure detail within a schema

server.resource(

"procedure_detail_in_schema",

new ResourceTemplate("db://schemas/{schemaName}/procedures/{procedureName}", {

list: undefined,

}),

procedureDetailResourceHandler

);

}

```

--------------------------------------------------------------------------------

/src/resources/indexes.ts:

--------------------------------------------------------------------------------

```typescript

import { ConnectorManager } from "../connectors/manager.js";

import {

createResourceSuccessResponse,

createResourceErrorResponse,

} from "../utils/response-formatter.js";

/**

* Indexes resource handler

* Returns information about indexes on a table

*/

export async function indexesResourceHandler(uri: URL, variables: any, _extra: any) {

const connector = ConnectorManager.getCurrentConnector();

// Extract schema and table names from URL variables

const schemaName =

variables && variables.schemaName

? Array.isArray(variables.schemaName)

? variables.schemaName[0]

: variables.schemaName

: undefined;

const tableName =

variables && variables.tableName

? Array.isArray(variables.tableName)

? variables.tableName[0]

: variables.tableName

: undefined;

if (!tableName) {

return createResourceErrorResponse(uri.href, "Table name is required", "MISSING_TABLE_NAME");

}

try {

// If a schema name was provided, verify that it exists

if (schemaName) {

const availableSchemas = await connector.getSchemas();

if (!availableSchemas.includes(schemaName)) {

return createResourceErrorResponse(

uri.href,

`Schema '${schemaName}' does not exist or cannot be accessed`,

"SCHEMA_NOT_FOUND"

);

}

}

// Check if table exists

const tableExists = await connector.tableExists(tableName, schemaName);

if (!tableExists) {

return createResourceErrorResponse(

uri.href,

`Table '${tableName}' does not exist in schema '${schemaName || "default"}'`,

"TABLE_NOT_FOUND"

);

}

// Get indexes for the table

const indexes = await connector.getTableIndexes(tableName, schemaName);

// Prepare response data

const responseData = {

table: tableName,

schema: schemaName,

indexes: indexes,

count: indexes.length,

};

// Use the utility to create a standardized response

return createResourceSuccessResponse(uri.href, responseData);

} catch (error) {

return createResourceErrorResponse(

uri.href,

`Error retrieving indexes: ${(error as Error).message}`,

"INDEXES_RETRIEVAL_ERROR"

);

}

}

```

--------------------------------------------------------------------------------

/src/config/demo-loader.ts:

--------------------------------------------------------------------------------

```typescript

/**

* Demo data loader for SQLite in-memory database

*

* This module loads the sample employee database into the SQLite in-memory database

* when the --demo flag is specified.

*/

import fs from "fs";

import path from "path";

import { fileURLToPath } from "url";

// Create __dirname equivalent for ES modules

const __filename = fileURLToPath(import.meta.url);

const __dirname = path.dirname(__filename);

// Path to sample data files - will be bundled with the package

// Try different paths to find the SQL files in development or production

let DEMO_DATA_DIR: string;

const projectRootPath = path.join(__dirname, "..", "..", "..");

const projectResourcesPath = path.join(projectRootPath, "resources", "employee-sqlite");

const distPath = path.join(__dirname, "resources", "employee-sqlite");

// First try the project root resources directory (for development)

if (fs.existsSync(projectResourcesPath)) {

DEMO_DATA_DIR = projectResourcesPath;

}

// Then try dist directory (for production)

else if (fs.existsSync(distPath)) {

DEMO_DATA_DIR = distPath;

}

// Fallback to a relative path from the current directory

else {

DEMO_DATA_DIR = path.join(process.cwd(), "resources", "employee-sqlite");

if (!fs.existsSync(DEMO_DATA_DIR)) {

throw new Error(`Could not find employee-sqlite resources in any of the expected locations:

- ${projectResourcesPath}

- ${distPath}

- ${DEMO_DATA_DIR}`);

}

}

/**

* Load SQL file contents

*/

export function loadSqlFile(fileName: string): string {

const filePath = path.join(DEMO_DATA_DIR, fileName);

return fs.readFileSync(filePath, "utf8");

}

/**

* Get SQLite DSN for in-memory database

*/

export function getInMemorySqliteDSN(): string {

return "sqlite:///:memory:";

}

/**

* Load SQL files sequentially

*/

export function getSqliteInMemorySetupSql(): string {

// First, load the schema

let sql = loadSqlFile("employee.sql");

// Replace .read directives with the actual file contents

// This is necessary because in-memory SQLite can't use .read

const readRegex = /\.read\s+([a-zA-Z0-9_]+\.sql)/g;

let match;

while ((match = readRegex.exec(sql)) !== null) {

const includePath = match[1];

const includeContent = loadSqlFile(includePath);

// Replace the .read line with the file contents

sql = sql.replace(match[0], includeContent);

}

return sql;

}

```

--------------------------------------------------------------------------------

/src/utils/__tests__/ssh-tunnel.test.ts:

--------------------------------------------------------------------------------

```typescript

import { describe, it, expect } from 'vitest';

import { SSHTunnel } from '../ssh-tunnel.js';

import type { SSHTunnelConfig } from '../../types/ssh.js';

describe('SSHTunnel', () => {

describe('Initial State', () => {

it('should have initial state as disconnected', () => {

const tunnel = new SSHTunnel();

expect(tunnel.getIsConnected()).toBe(false);

expect(tunnel.getTunnelInfo()).toBeNull();

});

});

describe('Tunnel State Management', () => {

it('should prevent establishing multiple tunnels', async () => {

const tunnel = new SSHTunnel();

// Set tunnel as connected (simulating a connected state)

(tunnel as any).isConnected = true;

const config: SSHTunnelConfig = {

host: 'ssh.example.com',

username: 'testuser',

password: 'testpass',

};

const options = {

targetHost: 'database.local',

targetPort: 5432,

};

await expect(tunnel.establish(config, options)).rejects.toThrow(

'SSH tunnel is already established'

);

});

it('should handle close when not connected', async () => {

const tunnel = new SSHTunnel();

// Should not throw when closing disconnected tunnel

await expect(tunnel.close()).resolves.toBeUndefined();

});

});

describe('Configuration Validation', () => {

it('should validate authentication requirements', () => {

// Test that config validation logic exists

const validConfigWithPassword: SSHTunnelConfig = {

host: 'ssh.example.com',

username: 'testuser',

password: 'testpass',

};

const validConfigWithKey: SSHTunnelConfig = {

host: 'ssh.example.com',

username: 'testuser',

privateKey: '/path/to/key',

};

const validConfigWithKeyAndPassphrase: SSHTunnelConfig = {

host: 'ssh.example.com',

port: 2222,

username: 'testuser',

privateKey: '/path/to/key',

passphrase: 'keypassphrase',

};

// These should be valid configurations

expect(validConfigWithPassword.host).toBe('ssh.example.com');

expect(validConfigWithPassword.username).toBe('testuser');

expect(validConfigWithPassword.password).toBe('testpass');

expect(validConfigWithKey.privateKey).toBe('/path/to/key');

expect(validConfigWithKeyAndPassphrase.passphrase).toBe('keypassphrase');

expect(validConfigWithKeyAndPassphrase.port).toBe(2222);

});

});

});

```

--------------------------------------------------------------------------------

/src/utils/dsn-obfuscate.ts:

--------------------------------------------------------------------------------

```typescript

import type { SSHTunnelConfig } from '../types/ssh.js';

/**

* Obfuscates the password in a DSN string for logging purposes

* @param dsn The original DSN string

* @returns DSN string with password replaced by asterisks

*/

export function obfuscateDSNPassword(dsn: string): string {

if (!dsn) {

return dsn;

}

try {

// Handle different DSN formats

const protocolMatch = dsn.match(/^([^:]+):/);

if (!protocolMatch) {

return dsn; // Not a recognizable DSN format

}

const protocol = protocolMatch[1];

// For SQLite file paths, don't obfuscate

if (protocol === 'sqlite') {

return dsn;

}

// For other databases, look for password pattern: ://user:password@host

// We need to be careful with @ in passwords, so we'll find the last @ that separates password from host

const protocolPart = dsn.split('://')[1];

if (!protocolPart) {

return dsn;

}

// Find the last @ to separate credentials from host

const lastAtIndex = protocolPart.lastIndexOf('@');

if (lastAtIndex === -1) {

return dsn; // No @ found, no password to obfuscate

}

const credentialsPart = protocolPart.substring(0, lastAtIndex);

const hostPart = protocolPart.substring(lastAtIndex + 1);

// Check if there's a colon in credentials (user:password format)

const colonIndex = credentialsPart.indexOf(':');

if (colonIndex === -1) {

return dsn; // No colon found, no password to obfuscate

}

const username = credentialsPart.substring(0, colonIndex);

const password = credentialsPart.substring(colonIndex + 1);

const obfuscatedPassword = '*'.repeat(Math.min(password.length, 8));

return `${protocol}://${username}:${obfuscatedPassword}@${hostPart}`;

} catch (error) {

// If any error occurs during obfuscation, return the original DSN

// This ensures we don't break functionality due to obfuscation issues

return dsn;

}

}

/**

* Obfuscates sensitive information in SSH configuration for logging

* @param config The SSH tunnel configuration

* @returns SSH config with sensitive data replaced by asterisks

*/

export function obfuscateSSHConfig(config: SSHTunnelConfig): Partial<SSHTunnelConfig> {

const obfuscated: Partial<SSHTunnelConfig> = {

host: config.host,

port: config.port,

username: config.username,

};

if (config.password) {

obfuscated.password = '*'.repeat(8);

}

if (config.privateKey) {

obfuscated.privateKey = config.privateKey; // Keep path as-is

}

if (config.passphrase) {

obfuscated.passphrase = '*'.repeat(8);

}

return obfuscated;

}

```

--------------------------------------------------------------------------------

/src/resources/schema.ts:

--------------------------------------------------------------------------------

```typescript

import { ConnectorManager } from "../connectors/manager.js";

import { Variables } from "@modelcontextprotocol/sdk/shared/uriTemplate.js";

import {

createResourceSuccessResponse,

createResourceErrorResponse,

} from "../utils/response-formatter.js";

/**

* Schema resource handler

* Returns schema information for a specific table, optionally within a specific database schema

*/

export async function tableStructureResourceHandler(uri: URL, variables: Variables, _extra: any) {

const connector = ConnectorManager.getCurrentConnector();

// Handle tableName which could be a string or string array from URL template

const tableName = Array.isArray(variables.tableName)

? variables.tableName[0]

: (variables.tableName as string);

// Extract schemaName if present

const schemaName = variables.schemaName

? Array.isArray(variables.schemaName)

? variables.schemaName[0]

: (variables.schemaName as string)

: undefined;

try {

// If a schema name was provided, verify that it exists

if (schemaName) {

const availableSchemas = await connector.getSchemas();

if (!availableSchemas.includes(schemaName)) {

return createResourceErrorResponse(

uri.href,

`Schema '${schemaName}' does not exist or cannot be accessed`,

"SCHEMA_NOT_FOUND"

);

}

}

// Check if the table exists in the schema before getting its structure

const tableExists = await connector.tableExists(tableName, schemaName);

if (!tableExists) {

const schemaInfo = schemaName ? ` in schema '${schemaName}'` : "";

return createResourceErrorResponse(

uri.href,

`Table '${tableName}'${schemaInfo} does not exist or cannot be accessed`,

"TABLE_NOT_FOUND"

);

}