This is page 17 of 22. Use http://codebase.md/id/docs/get_started/create/basic_markup.html?lines=false&page={x} to view the full context.

# Directory Structure

```

├── .ci

│ ├── check-markdownfmt.sh

│ ├── check-metadata.sh

│ ├── check-pr-no-readme.sh

│ ├── check-required-files.sh

│ ├── check-short.sh

│ ├── check-ymlfmt.sh

│ └── get-markdownfmt.sh

├── .common-templates

│ ├── maintainer-community.md

│ ├── maintainer-docker.md

│ ├── maintainer-hashicorp.md

│ └── maintainer-influxdata.md

├── .dockerignore

├── .github

│ └── workflows

│ └── ci.yml

├── .template-helpers

│ ├── arches.sh

│ ├── autogenerated-warning.md

│ ├── compose.md

│ ├── generate-dockerfile-links-partial.sh

│ ├── generate-dockerfile-links-partial.tmpl

│ ├── get-help.md

│ ├── issues.md

│ ├── license-common.md

│ ├── template.md

│ ├── variant-alpine.md

│ ├── variant-default-buildpack-deps.md

│ ├── variant-default-debian.md

│ ├── variant-default-ubuntu.md

│ ├── variant-onbuild.md

│ ├── variant-slim.md

│ ├── variant-windowsservercore.md

│ ├── variant.md

│ └── variant.sh

├── adminer

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── aerospike

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── almalinux

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── alpine

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── alt

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── amazoncorretto

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── amazonlinux

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── api-firewall

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── arangodb

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── archlinux

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── backdrop

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── bash

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── bonita

│ ├── compose.yaml

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── buildpack-deps

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── busybox

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-glibc.md

│ ├── variant-musl.md

│ ├── variant-uclibc.md

│ └── variant.md

├── caddy

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo-120.png

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── cassandra

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── chronograf

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── cirros

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── clearlinux

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── clefos

│ ├── content.md

│ ├── deprecated.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── clickhouse

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── clojure

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── composer

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── convertigo

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── couchbase

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── couchdb

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── crate

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── dart

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── debian

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-slim.md

│ └── variant.md

├── docker

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-rootless.md

│ └── variant-windowsservercore.md

├── Dockerfile

├── drupal

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-fpm.md

├── eclipse-mosquitto

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── eclipse-temurin

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── eggdrop

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── elasticsearch

│ ├── compose.yaml

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-alpine.md

├── elixir

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── emqx

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── erlang

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── fedora

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── flink

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── fluentd

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── friendica

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── gazebo

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── gcc

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── generate-repo-stub-readme.sh

├── geonetwork

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-postgres.md

│ └── variant.md

├── get-categories.sh

├── ghost

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── golang

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-alpine.md

│ └── variant-tip.md

├── gradle

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── groovy

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── haproxy

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── haskell

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-slim.md

├── haxe

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── hello-world

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── update.sh

├── hitch

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── httpd

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── hylang

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── ibm-semeru-runtimes

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── ibmjava

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── influxdb

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-data.md

│ └── variant-meta.md

├── irssi

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── jetty

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── joomla

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── jruby

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── julia

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── kapacitor

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── kibana

│ ├── compose.yaml

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── kong

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── krakend

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo-120.png

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── LICENSE

├── lightstreamer

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── liquibase

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── logstash

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-alpine.md

├── mageia

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── mariadb

│ ├── compose.yaml

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── markdownfmt.sh

├── matomo

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── maven

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── mediawiki

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── memcached

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── metadata.json

├── metadata.sh

├── mongo

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── mongo-express

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── monica

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── mono

│ ├── content.md

│ ├── deprecated.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── mysql

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── nats

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── neo4j

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── neurodebian

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── nextcloud

│ ├── content.md

│ ├── deprecated.md

│ ├── github-repo

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── nginx

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-perl.md

├── node

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── notary

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── odoo

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── open-liberty

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── openjdk

│ ├── content.md

│ ├── deprecated.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-alpine.md

│ ├── variant-oracle.md

│ └── variant-slim.md

├── oraclelinux

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-slim.md

├── orientdb

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── parallel-update.sh

├── percona

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── perl

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── photon

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── php

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-apache.md

│ ├── variant-cli.md

│ ├── variant-fpm.md

│ └── variant.md

├── php-zendserver

│ ├── content.md

│ ├── deprecated.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── phpmyadmin

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── plone

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── postfixadmin

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-apache.md

│ ├── variant-fpm-alpine.md

│ └── variant-fpm.md

├── postgres

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── push.pl

├── push.sh

├── pypy

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── python

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-slim.md

├── r-base

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── rabbitmq

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── rakudo-star

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── README.md

├── redis

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── redmine

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── registry

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── rethinkdb

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── rocket.chat

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── rockylinux

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── ros

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── ruby

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── rust

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── sapmachine

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── satosa

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── scratch

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── silverpeas

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── solr

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── sonarqube

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── spark

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── spiped

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── storm

│ ├── compose.yaml

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── swift

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── swipl

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── teamspeak

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── telegraf

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── tomcat

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── tomee

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── traefik

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-alpine.md

├── ubuntu

│ ├── content.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── unit

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── update.sh

├── varnish

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── websphere-liberty

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── wordpress

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ ├── variant-cli.md

│ └── variant-fpm.md

├── xwiki

│ ├── content.md

│ ├── get-help.md

│ ├── github-repo

│ ├── issues.md

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ └── README.md

├── ymlfmt.sh

├── yourls

│ ├── compose.yaml

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.svg

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-fpm.md

├── znc

│ ├── content.md

│ ├── github-repo

│ ├── license.md

│ ├── logo.png

│ ├── maintainer.md

│ ├── metadata.json

│ ├── README-short.txt

│ ├── README.md

│ └── variant-slim.md

└── zookeeper

├── compose.yaml

├── content.md

├── github-repo

├── license.md

├── logo.png

├── maintainer.md

├── metadata.json

├── README-short.txt

└── README.md

```

# Files

--------------------------------------------------------------------------------

/mongo-express/content.md:

--------------------------------------------------------------------------------

```markdown

# What is mongo-express?

mongo-express is a web-based MongoDB admin interface written in Node.js, Express.js, and Bootstrap3.

> [github.com/mongo-express/mongo-express](https://github.com/mongo-express/mongo-express)

%%LOGO%%

# How to use this image

```console

$ docker run --network some-network -e ME_CONFIG_MONGODB_SERVER=some-mongo -p 8081:8081 %%IMAGE%%

```

Then you can hit `http://localhost:8081` or `http://host-ip:8081` in your browser.

## Security Notice

JSON documents are parsed through a javascript virtual machine, so the web interface can be used for executing malicious javascript on a server.

**mongo-express should only be used privately for development purposes.**

# Configuration

Environment vairables are passed to the `run` command for configuring a mongo-express container.

Name | Default | Description

--------------------------------|-----------------|------------

ME_CONFIG_BASICAUTH_USERNAME | '' | mongo-express web username

ME_CONFIG_BASICAUTH_PASSWORD | '' | mongo-express web password

ME_CONFIG_MONGODB_ENABLE_ADMIN | 'true' | Enable admin access to all databases. Send strings: `"true"` or `"false"`

ME_CONFIG_MONGODB_ADMINUSERNAME | '' | MongoDB admin username

ME_CONFIG_MONGODB_ADMINPASSWORD | '' | MongoDB admin password

ME_CONFIG_MONGODB_PORT | 27017 | MongoDB port

ME_CONFIG_MONGODB_SERVER | 'mongo' | MongoDB container name. Use comma delimited list of host names for replica sets.

ME_CONFIG_OPTIONS_EDITORTHEME | 'default' | mongo-express editor color theme, [more here](http://codemirror.net/demo/theme.html)

ME_CONFIG_REQUEST_SIZE | '100kb' | Maximum payload size. CRUD operations above this size will fail in [body-parser](https://www.npmjs.com/package/body-parser).

ME_CONFIG_SITE_BASEURL | '/' | Set the baseUrl to ease mounting at a subdirectory. Remember to include a leading and trailing slash.

ME_CONFIG_SITE_COOKIESECRET | 'cookiesecret' | String used by [cookie-parser middleware](https://www.npmjs.com/package/cookie-parser) to sign cookies.

ME_CONFIG_SITE_SESSIONSECRET | 'sessionsecret' | String used to sign the session ID cookie by [express-session middleware](https://www.npmjs.com/package/express-session).

ME_CONFIG_SITE_SSL_ENABLED | 'false' | Enable SSL.

ME_CONFIG_SITE_SSL_CRT_PATH | '' | SSL certificate file.

ME_CONFIG_SITE_SSL_KEY_PATH | '' | SSL key file.

The following are only needed if `ME_CONFIG_MONGODB_ENABLE_ADMIN` is **"false"**

Name | Default | Description

--------------------------------|-----------------|------------

ME_CONFIG_MONGODB_AUTH_DATABASE | 'db' | Database name

ME_CONFIG_MONGODB_AUTH_USERNAME | 'admin' | Database username

ME_CONFIG_MONGODB_AUTH_PASSWORD | 'pass' | Database password

## Example

```console

$ docker run -it --rm \

--network web_default \

--name mongo-express \

-p 8081:8081 \

-e ME_CONFIG_OPTIONS_EDITORTHEME="ambiance" \

-e ME_CONFIG_MONGODB_SERVER="web_db_1" \

-e ME_CONFIG_BASICAUTH_USERNAME="user" \

-e ME_CONFIG_BASICAUTH_PASSWORD="fairly long password" \

%%IMAGE%%

```

This example links to a container name typical of `docker compose`, changes the editor's color theme, and enables basic authentication.

```

--------------------------------------------------------------------------------

/adminer/content.md:

--------------------------------------------------------------------------------

```markdown

# Adminer

## What is Adminer?

Adminer (formerly phpMinAdmin) is a full-featured database management tool written in PHP. Conversely to phpMyAdmin, it consist of a single file ready to deploy to the target server. Adminer is available for MySQL, PostgreSQL, SQLite, MS SQL, Oracle, Firebird, SimpleDB, Elasticsearch and MongoDB.

> [adminer.org](https://www.adminer.org)

%%LOGO%%

## How to use this image

### Standalone

```console

$ docker run --link some_database:db -p 8080:8080 %%IMAGE%%

```

Then you can hit `http://localhost:8080` or `http://host-ip:8080` in your browser.

### FastCGI

If you are already running a FastCGI capable web server you might prefer running Adminer via FastCGI:

```console

$ docker run --link some_database:db -p 9000:9000 %%IMAGE%%:fastcgi

```

Then point your web server to port 9000 of the container.

Note: This exposes the FastCGI socket to the Internet. Make sure to add proper firewall rules or use a private Docker network instead to prevent a direct access.

### %%COMPOSE%%

Run `docker compose up`, wait for it to initialize completely, and visit `http://localhost:8080` or `http://host-ip:8080` (as appropriate).

### Loading plugins

This image bundles all official Adminer plugins. You can find the list of plugins on GitHub: https://github.com/vrana/adminer/tree/master/plugins.

To load plugins you can pass a list of filenames in `ADMINER_PLUGINS`:

```console

$ docker run --link some_database:db -p 8080:8080 -e ADMINER_PLUGINS='tables-filter tinymce' %%IMAGE%%

```

If a plugin *requires* parameters to work correctly instead of adding the plugin to `ADMINER_PLUGINS`, you need to add a custom file to the container:

```console

$ docker run --link some_database:db -p 8080:8080 -e ADMINER_PLUGINS='login-servers' %%IMAGE%%

Unable to load plugin file "login-servers", because it has required parameters: servers

Create a file "/var/www/html/plugins-enabled/login-servers.php" with the following contents to load the plugin:

<?php

require_once('plugins/login-servers.php');

/** Set supported servers

* @param array array($domain) or array($domain => $description) or array($category => array())

* @param string

*/

return new AdminerLoginServers(

$servers = ???,

$driver = 'server'

);

```

To load a custom plugin you can add PHP scripts that return the instance of the plugin object to `/var/www/html/plugins-enabled/`.

### Choosing a design

The image bundles all the designs that are available in the source package of adminer. You can find the list of designs on GitHub: https://github.com/vrana/adminer/tree/master/designs.

To use a bundled design you can pass its name in `ADMINER_DESIGN`:

```console

$ docker run --link some_database:db -p 8080:8080 -e ADMINER_DESIGN='nette' %%IMAGE%%

```

To use a custom design you can add a file called `/var/www/html/adminer.css`.

### Usage with external server

You can specify the default host with the `ADMINER_DEFAULT_SERVER` environment variable. This is useful if you are connecting to an external server or a docker container named something other than the default `db`.

```console

docker run -p 8080:8080 -e ADMINER_DEFAULT_SERVER=mysql %%IMAGE%%

```

## Supported Drivers

While Adminer supports a wide range of database drivers this image only supports the following out of the box:

- MySQL

- PostgreSQL

- SQLite

- SimpleDB

- Elasticsearch

To add support for the other drivers you will need to install the following PHP extensions on top of this image:

- `pdo_dblib` (MS SQL)

- `oci8` (Oracle)

- `interbase` (Firebird)

- `mongodb` (MongoDB)

```

--------------------------------------------------------------------------------

/geonetwork/compose.yaml:

--------------------------------------------------------------------------------

```yaml

# GeoNetwork

#

# Access via "http://localhost:8080/geonetwork"

#

# Default user: admin

# Default password: admin

volumes:

geonetwork:

esdata:

pgdata:

pglog:

services:

geonetwork:

image: geonetwork:4.4

healthcheck:

test: curl http://localhost:8080/

interval: 5s

timeout: 5s

retries: 30

restart: always

volumes:

- geonetwork:/catalogue-data

depends_on:

database:

condition: service_healthy

ports:

- 8080:8080

environment:

WEBAPP_CONTEXT_PATH: /geonetwork

DATA_DIR: /catalogue-data

TZ: Europe/Amsterdam

JAVA_OPTS: >-

--add-opens=jdk.management/com.sun.management.internal=ALL-UNNAMED

-Djava.security.egd=file:/dev/./urandom -Djava.awt.headless=true

-Xms512M -Xss512M -Xmx2G -XX:+UseConcMarkSweepGC

-Djetty.httpConfig.requestHeaderSize=32768

-Dorg.eclipse.jetty.server.Request.maxFormContentSize=500000

-Dorg.eclipse.jetty.server.Request.maxFormKeys=4000

# For remote debug

# -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=5005

GN_CONFIG_PROPERTIES: >-

-Dgeonetwork.dir=/catalogue-data

-Dgeonetwork.formatter.dir=/catalogue-data/data/formatter

-Dgeonetwork.schema.dir=/opt/geonetwork/WEB-INF/data/config/schema_plugins

-Dgeonetwork.indexConfig.dir=/opt/geonetwork/WEB-INF/data/config/index

-Dgeonetwork.schemapublication.dir=/opt/geonetwork/WEB-INF/data/resources/schemapublication

-Dgeonetwork.htmlcache.dir=/opt/geonetwork/WEB-INF/data/resources/htmlcache

-Des.host=elasticsearch

-Des.protocol=http

-Des.port=9200

-Des.url=http://elasticsearch:9200

-Des.username=

-Des.password=

-Dgeonetwork.ESFeaturesProxy.targetUri=http://elasticsearch:9200/gn-features/{_}

-Dgeonetwork.HttpDashboardProxy.targetUri=http://kibana:5601

GEONETWORK_DB_TYPE: postgres-postgis

GEONETWORK_DB_HOST: database

GEONETWORK_DB_PORT: 5432

GEONETWORK_DB_NAME: geonetwork

GEONETWORK_DB_USERNAME: geonetwork

GEONETWORK_DB_PASSWORD: geonetwork

database:

image: postgis/postgis:16-3.4

environment:

POSTGRES_USER: geonetwork

POSTGRES_PASSWORD: geonetwork

POSTGRES_DB: geonetwork

command: [postgres, -c, log_statement=all, -c, logging_collector=true, -c, log_file_mode=0644,

-c, log_directory=/var/log/postgresql, -c, log_filename=postgresql.log]

healthcheck:

test: [CMD-SHELL, pg_isready -U postgres]

interval: 5s

timeout: 5s

retries: 5

volumes:

- pgdata:/var/lib/postgresql/data

- pglog:/var/log/postgresql

elasticsearch:

image: elasticsearch:7.17.15

ports:

- 9200:9200

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

healthcheck:

test: curl -s http://localhost:9200 >/dev/null || exit 1

interval: 10s

timeout: 2s

retries: 10

start_period: 2m

environment:

ES_JAVA_OPTS: -Xms1G -Xmx1G

discovery.type: single-node

volumes:

- esdata:/usr/share/elasticsearch/data

kibana:

image: kibana:7.17.15

environment:

SERVER_NAME: kibana

ELASTICSEARCH_URL: http://elasticsearch:9200/

SERVER_BASEPATH: /geonetwork/dashboards

SERVER_REWRITEBASEPATH: 'false'

KIBANA_INDEX: .dashboards

XPACK_MONITORING_UI_CONTAINER_ELASTICSEARCH_ENABLED: 'true'

depends_on:

elasticsearch:

condition: service_healthy

```

--------------------------------------------------------------------------------

/traefik/content.md:

--------------------------------------------------------------------------------

```markdown

%%LOGO%%

[Traefik](https://traefik.io) is a modern HTTP reverse proxy and ingress controller that makes deploying microservices easy.

Traefik integrates with your existing infrastructure components ([Kubernetes](https://kubernetes.io), [Docker](https://www.docker.com/), [Swarm](https://docs.docker.com/engine/swarm/), [Consul](https://www.consul.io/), [Nomad](https://www.nomadproject.io/), [etcd](https://coreos.com/etcd/), [Amazon ECS](https://aws.amazon.com/ecs), ...) and configures itself automatically and dynamically.

Pointing Traefik at your orchestrator should be the *only* configuration step you need.

## Traefik v3 - Example usage

Enable `docker` provider and dashboard UI:

```yml

## traefik.yml

# Docker configuration backend

providers:

docker:

defaultRule: "Host(`{{ trimPrefix `/` .Name }}.docker.localhost`)"

# API and dashboard configuration

api:

insecure: true

```

Start Traefik v3:

```sh

docker run -d -p 8080:8080 -p 80:80 \

-v $PWD/traefik.yml:/etc/traefik/traefik.yml \

-v /var/run/docker.sock:/var/run/docker.sock \

%%IMAGE%%:v3

```

Start a backend server using the `traefik/whoami` image:

```sh

docker run -d --name test traefik/whoami

```

Access the whoami service through Traefik via the defined rule `test.docker.localhost`:

```console

$ curl test.docker.localhost

Hostname: 0693100b16de

IP: 127.0.0.1

IP: ::1

IP: 192.168.215.4

RemoteAddr: 192.168.215.3:57618

GET / HTTP/1.1

Host: test.docker.localhost

User-Agent: curl/8.7.1

Accept: */*

Accept-Encoding: gzip

X-Forwarded-For: 192.168.215.1

X-Forwarded-Host: test.docker.localhost

X-Forwarded-Port: 80

X-Forwarded-Proto: http

X-Forwarded-Server: 8a37fd4f35fb

X-Real-Ip: 192.168.215.1

```

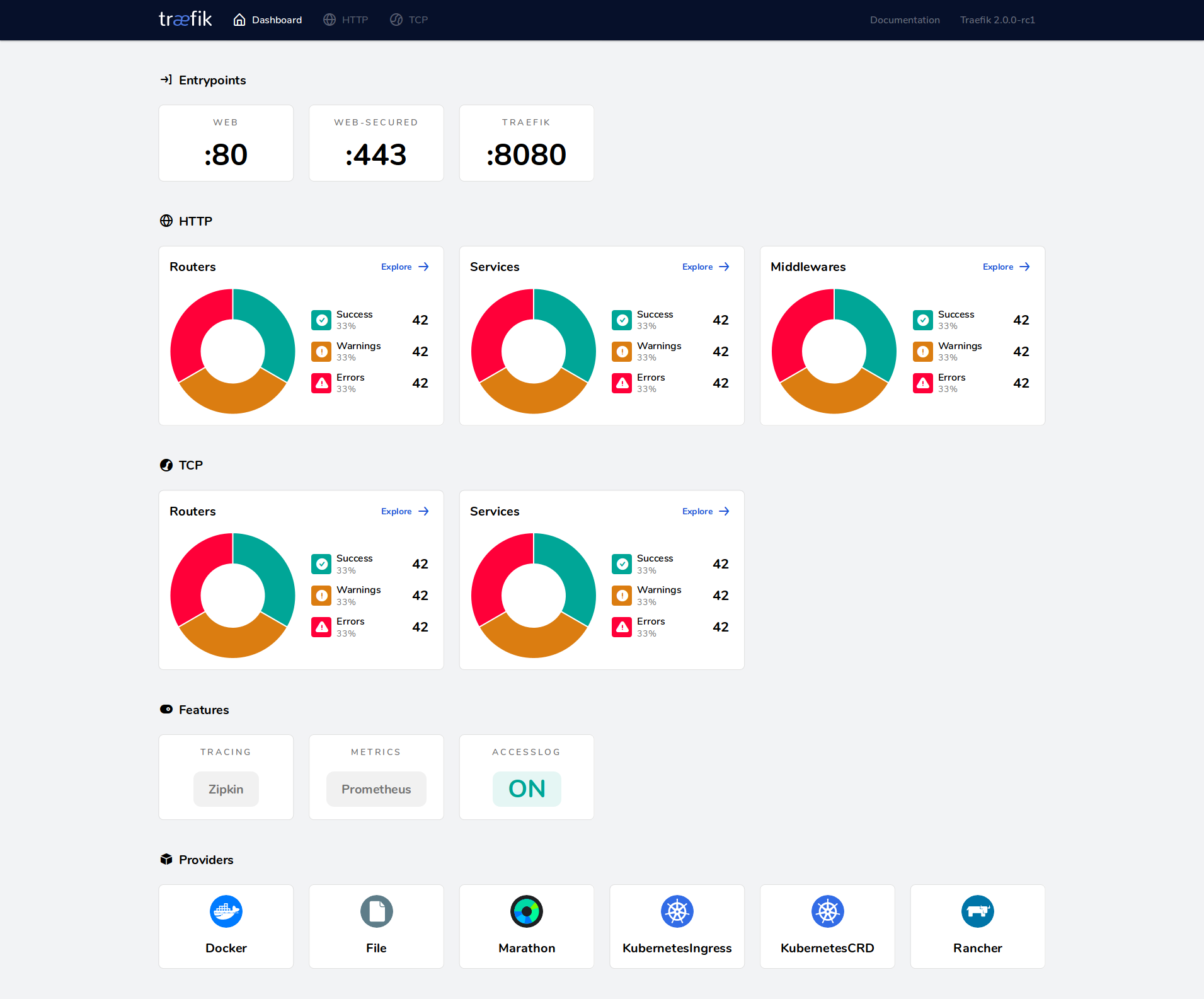

Access the Traefik Dashboard:

Open your web browser and navigate to `http://localhost:8080` to access the Traefik dashboard. This will provide an overview of routers, services, and middlewares.

## Traefik v2 - Example usage

Enable `docker` provider and dashboard UI:

```yml

## traefik.yml

# Docker configuration backend

providers:

docker:

defaultRule: "Host(`{{ trimPrefix `/` .Name }}.docker.localhost`)"

# API and dashboard configuration

api:

insecure: true

```

Start Traefik v2:

```sh

docker run -d -p 8080:8080 -p 80:80 \

-v $PWD/traefik.yml:/etc/traefik/traefik.yml \

-v /var/run/docker.sock:/var/run/docker.sock \

%%IMAGE%%:v2.11

```

Start a backend server using the `traefik/whoami` image:

```sh

docker run -d --name test traefik/whoami

```

Access the whoami service through Traefik via the defined rule `test.docker.localhost`:

```console

$ curl test.docker.localhost

Hostname: 390a880bdfab

IP: 127.0.0.1

IP: 172.17.0.3

GET / HTTP/1.1

Host: test.docker.localhost

User-Agent: curl/7.65.3

Accept: */*

Accept-Encoding: gzip

X-Forwarded-For: 172.17.0.1

X-Forwarded-Host: test.docker.localhost

X-Forwarded-Port: 80

X-Forwarded-Proto: http

X-Forwarded-Server: 7e073cb54211

X-Real-Ip: 172.17.0.1

```

Access the Traefik Dashboard:

Open your web browser and navigate to `http://localhost:8080` to access the Traefik dashboard. This will provide an overview of routers, services, and middlewares.

## Documentation

You can find the complete documentation:

- for [v3.x](https://doc.traefik.io/traefik/)

- for [v2.11](https://doc.traefik.io/traefik/v2.11)

A community support is available at [https://community.traefik.io](https://community.traefik.io)

```

--------------------------------------------------------------------------------

/amazonlinux/content.md:

--------------------------------------------------------------------------------

```markdown

## What is Amazon Linux?

Amazon Linux is provided by Amazon Web Services (AWS). It is designed to provide a stable, secure, and high-performance execution environment for applications running on Amazon EC2. The full distribution includes packages that enable easy integration with AWS, including launch configuration tools and many popular AWS libraries and tools. AWS provides ongoing security and maintenance updates to all instances running Amazon Linux.

The Amazon Linux container image contains a minimal set of packages. To install additional packages, [use `yum`](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/managing-software.html).

AWS provides three versions of Amazon Linux:

- [Amazon Linux 2023](https://aws.amazon.com/linux/amazon-linux-2023/) (recommended, latest)

- [Amazon Linux 2](https://aws.amazon.com/amazon-linux-2/)

- [Amazon Linux AMI](https://aws.amazon.com/amazon-linux-ami/)

For information on security updates for Amazon Linux, please refer to:

- [Amazon Linux 2023 Security Advisories](https://alas.aws.amazon.com/alas2023.html)

- [Amazon Linux 2 Security Advisories](https://alas.aws.amazon.com/alas2.html)

- [Amazon Linux AMI Security Advisories](https://alas.aws.amazon.com/)

Note that Docker Hub's vulnerability scanning for Amazon Linux is currently based on RPM versions, which does not reflect the state of backported patches for vulnerabilities.

%%LOGO%%

## What is Amazon Linux 2023?

- Amazon Linux 2023: https://aws.amazon.com/linux/amazon-linux-2023/

- 2023 Release Notes: https://docs.aws.amazon.com/linux/al2023/release-notes/relnotes.html

- FAQs: https://aws.amazon.com/linux/amazon-linux-2023/faqs/

- What's New: https://aws.amazon.com/about-aws/whats-new/2023/03/amazon-linux-2023/

- User Guide: https://docs.aws.amazon.com/linux/al2023/ug/what-is-amazon-linux.html

## Where can I run Amazon Linux container images?

You can run Amazon Linux container images in any Docker based environment. Examples include, your laptop, in AWS EC2 instances, and ECS clusters.

## What packages are available in the Amazon Linux containers?

Amazon Linux Docker container images contain a subset of the packages in the images for use on EC2 and as VMs in on-premises scenarios. The container images can be configured to use any of the full set of packages in images for EC2 and on-premises use.

## Will updates be available for Amazon Linux containers?

Similar to the Amazon Linux images for AWS EC2 and on-premises use, Amazon Linux container images will get ongoing updates from Amazon in the form of security updates, bug fix updates, and other enhancements. Security bulletins for Amazon Linux are available at https://alas.aws.amazon.com/

## What support is available for Amazon Linux outside AWS?

- GitHub Issues: https://github.com/amazonlinux/container-images/issues

- Documentation: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/amazon-linux-ami-basics.html

- Amazon Linux Forums: https://forums.aws.amazon.com/forum.jspa?forumID=228

- Paid Support from AWS: https://aws.amazon.com/premiumsupport/

## Will AWS support the current versions of Amazon Linux going forward?

Yes; in order to avoid any disruption to your existing applications and to facilitate migration to Amazon Linux 2023, AWS will provide regular security updates for Amazon Linux 2 and Amazon Linux 2018.03. Please refer to their FAQs for more information. You can also use all your existing support channels such as AWS Premium Support and Amazon Linux Discussion Forum to continue to submit support requests.

## FAQs

### Amazon Linux 2023

- FAQs: https://aws.amazon.com/linux/amazon-linux-2023/faqs/

### Amazon Linux 2

- FAQs: https://aws.amazon.com/amazon-linux-2/faqs/

### Amazon Linux 1

- FAQs: https://aws.amazon.com/amazon-linux-ami/faqs/

```

--------------------------------------------------------------------------------

/storm/content.md:

--------------------------------------------------------------------------------

```markdown

# What is Apache Storm?

Apache Storm is a free and open source distributed realtime computation system. Apache Storm makes it easy to reliably process unbounded streams of data, doing for realtime processing what Hadoop did for batch processing. Apache Storm is simple, can be used with any programming language, and is a lot of fun to use!

Apache Storm has many use cases: realtime analytics, online machine learning, continuous computation, distributed RPC, ETL, and more. Apache Storm is fast: a benchmark clocked it at over a million tuples processed per second per node. It is scalable, fault-tolerant, guarantees your data will be processed, and is easy to set up and operate.

Apache Storm integrates with the queueing and database technologies you already use. An Apache Storm topology consumes streams of data and processes those streams in arbitrarily complex ways, repartitioning the streams between each stage of the computation however needed.

> [wikipedia.org/wiki/Storm_(event_processor)](https://en.wikipedia.org/wiki/Storm_(event_processor))

%%LOGO%%

# How to use this image

## Running topologies in local mode

Assuming you have `topology.jar` in the current directory.

```console

$ docker run -it -v $(pwd)/topology.jar:/topology.jar %%IMAGE%% storm jar /topology.jar org.apache.storm.starter.ExclamationTopology

```

## Setting up a minimal Storm cluster

1. [Apache Zookeeper](https://zookeeper.apache.org/) is a must for running a Storm cluster. Start it first. Since the Zookeeper "fails fast" it's better to always restart it.

```console

$ docker run -d --restart always --name some-zookeeper zookeeper

```

2. The Nimbus daemon has to be connected with the Zookeeper. It's also a "fail fast" system.

```console

$ docker run -d --restart always --name some-nimbus --link some-zookeeper:zookeeper %%IMAGE%% storm nimbus

```

3. Finally start a single Supervisor node. It will talk to the Nimbus and Zookeeper.

```console

$ docker run -d --restart always --name supervisor --link some-zookeeper:zookeeper --link some-nimbus:nimbus %%IMAGE%% storm supervisor

```

4. Now you can submit a topology to our cluster.

```console

$ docker run --link some-nimbus:nimbus -it --rm -v $(pwd)/topology.jar:/topology.jar %%IMAGE%% storm jar /topology.jar org.apache.storm.starter.WordCountTopology topology

```

5. Optionally, you can start the Storm UI.

```console

$ docker run -d -p 8080:8080 --restart always --name ui --link some-nimbus:nimbus %%IMAGE%% storm ui

```

## %%COMPOSE%%

Run `docker compose up` and wait for it to initialize completely. The Nimbus will be available at `http://localhost:6627` or `http://host-ip:6627` (as appropriate).

## Configuration

This image uses [default configuration](https://github.com/apache/storm/blob/v2.0.0/conf/defaults.yaml) of the Apache Storm. There are two main ways to change it.

1. Using command line arguments.

```console

$ docker run -d --restart always --name nimbus %%IMAGE%% storm nimbus -c storm.zookeeper.servers='["zookeeper"]'

```

2. Assuming you have `storm.yaml` in the current directory you can mount it as a volume.

```console

$ docker run -it -v $(pwd)/storm.yaml:/conf/storm.yaml %%IMAGE%% storm nimbus

```

## Logging

This image uses [default logging configuration](https://github.com/apache/storm/tree/v2.0.0/log4j2). All logs go to the `/logs` directory by default.

## Data persistence

No data are persisted by default. For convenience there are `/data` and `/logs` directories in the image owned by `storm` user. Use them accordingly to persist data and logs using volumes.

```console

$ docker run -it -v /logs -v /data %%IMAGE%% storm nimbus

```

*Please be noticed that using paths other than those predefined is likely to cause permission denied errors. It's because for [security reasons](https://docs.docker.com/engine/userguide/eng-image/dockerfile_best-practices/#user) the Storm is running under the non-root `storm` user.*

```

--------------------------------------------------------------------------------

/amazoncorretto/content.md:

--------------------------------------------------------------------------------

```markdown

### What is Amazon Corretto?

Corretto is a binary distribution of the Open Java Development Kit (OpenJDK) with long-term support from Amazon. Corretto is certified using the Java Technical Compatibility Kit (TCK) to ensure it meets the Java SE standard and is available on Linux, Windows, and macOS. It includes [patches from Amazon](https://docs.aws.amazon.com/corretto/latest/corretto-8-ug/patches.html) that have proven useful in running our own services.

%%LOGO%%

### Why should I use Corretto?

Corretto is a reliable binary distribution of OpenJDK with the assurance of long-term support provided at no cost to you. Amazon runs Corretto internally on thousands of production services. Every modification we make to Corretto fixes or mitigates a problem we found running OpenJDK. Amazon also plans to apply urgent fixes (including security) when they are available and ready to use, outside of the regular quarterly cycle.

### How is Corretto different from OpenJDK?

Corretto is a distribution of Open JDK with patches included by Amazon that are not yet integrated in the corresponding OpenJDK update projects. We focus on patches that improve performance or stability in OpenJDK, chosen based on Amazon's observations running large services.

### What kinds of patches does Amazon intend to include in Corretto?

Patches will include security fixes, performance enhancements (e.g., speeding up frequently used functions), garbage collection scheduling, and preventing out-of-memory situations, as well as improved monitoring, reporting, and thread management.

### Is there any cost associated with using Corretto?

Corretto is distributed by Amazon under an Open Source license at no cost to you. It is licensed under the terms of the GNU Public License version 2 with the Class Path Exception (GPLv2 with CPE). Amazon does not charge for its use or distribution.

### What is included in Corretto's long-term support?

Amazon will provide security updates for Corretto 8 until at least June 2023. Updates are planned to be released quarterly. Corretto 11, corresponding to OpenJDK 11, will be available during the first half of 2019. Amazon will support Corretto 11 with quarterly updates until at least August 2024.

### Can I use Corretto as a drop-in replacement for other JDKs?

Corretto is designed as a drop-in replacement for all Java SE distributions unless you are using features (e.g., Java Flight Recorder) not available in OpenJDK. Once Corretto binaries are installed on a host and correctly invoked to run your Java applications (e.g., using the alternatives command on Linux), existing command-line options, tuning parameters, monitoring, and anything else in place will continue to work as before.

### Why does security scanner show that a docker image has a CVE?

If a security scanner reports that an amazoncorretto image includes a CVE, the first recommended action is to pull an updated version of this image.

If no updated image is available, run the appropriate command to update packages for the platform, ie. run "apk -U upgrade" for Alpine or "yum update -y --security" for AmazonLinux in your Dockerfiles or systems to resolve the issue immediately.

If no updated package is available, please treat this as a potential security issue and follow [these instructions](https://aws.amazon.com/security/vulnerability-reporting/) or email AWS security directly at [[email protected]](mailto:[email protected]).

It is the responsibility of the base docker image supplier to provide timely security updates to images and packages. The amazoncorretto images are automatically rebuilt when a new base image is made available, but we do not make changes to our Dockerfiles to pull in one-off package updates. If a new base image has not yet been made generally available by a base docker image maintainer, please contact that maintainer to request that the issue be addressed.

Note that there are multiple reasons why a CVE may appear to be present in a docker image, as explained in the [docker library FAQs](https://github.com/docker-library/faq/tree/73f10b0daf2fb8e7b38efaccc0e90b3510919d51#why-does-my-security-scanner-show-that-an-image-has-cves).

```

--------------------------------------------------------------------------------

/tomee/content.md:

--------------------------------------------------------------------------------

```markdown

# What is TomEE?

[Apache TomEE](http://tomee.apache.org/), pronounced "Tommy", is an all-Apache Java EE Web Profile certified stack where Apache Tomcat is top dog. Apache TomEE is assembled from a vanilla Apache Tomcat zip file. We start with Apache Tomcat, add our jars and zip up the rest. The result is Tomcat with added EE features - TomEE.

%%LOGO%%

Apache TomEE comes with four different flavors, Web Profile, JAX-RS, Plus and Plume.

- Apache TomEE Web Profile delivers Servlets, JSP, JSF, JTA, JPA, CDI, Bean Validation and EJB Lite.

- Apache TomEE JAX-RS (RESTfull Services) delivers the Web Profile plus JAX-RS (RESTfull Services).

- Apache TomEE Plus delivers all that is in the Web Profile and JAX-RS (RESTfull Services), plus EJB Full, Java EE Connector Architecture, JMS (Java Message Service) and JAX-WS (Web Services).

- Apache TomEE Plume delivers all that is in the Plus Profile, but includes Mojarra and EclipseLink support.

All Dockerfile files of supported versions can be found at https://github.com/tomitribe/docker-tomee

# Apache TomEE and Tomitribe

Tomitribe provides commercial support, professional services and training for Apache TomEE. We offers a new business model that furthers the development and growth of the Open Source project all the while fulfilling business requirement for production support. The Tomitribe Community Partnership Program connects businesses with the community.

All of Tomitribe developers are committers of TomEE and are influencers of the project's direction and development. We want to extend this influence to the people and companies that matter, users of TomEE. How? The Tomitribe Community Partnership Program.

Below are some press releases that might be helpful:

- Tomitribe Announces [Enterprise Service Support for Apache TomEE](http://www.tomitribe.com/company/press/tomitribe_enterprise_service_support_for_apache_tomee_javaone_2013/)

- Tomitribe Introduces [Community Partnership Program Conference](http://www.tomitribe.com/company/press/tomitribe-introduces-community-partnership-program-and-presents-java-ee-sessions-at-javaone-2014-conference/)

# Apache TomEE and ManageCat

Another commercial support company provides enterprise support for Apache TomEE is ManageCat. ManageCat involves with a lot of Apache Java EE projects to contribute open source Java EE ecosystem. ManageCat co-founder is also a founder of open source Apache OpenWebBeans project (http://openwebbans.apache.org) which is heavily used in Apache TomEE application server for CDI integration.

ManageCat is a cloud management and service platform for Apache Tomcat and Apache TomEE servers. Involving with a lot of Apache Java EE projects, we want to transfer not only our knowledge about Apache TomEE and also other Java EE technologies including JPA, EJB, CDI, JSF, JSTL, JTA, JMS. We will help our customers to develop and deploy their production based Java EE applications smoothly.

- ManageCat Announces [Enterprise Service Support for Apache Tomcat and TomEE](http://managecat.com/index.php/enterprise-tomcat-support)

# How to use this image.

Run the default TomEE server (`CMD ["catalina.sh", "run"]`):

```console

$ docker run -it --rm %%IMAGE%%:<java-version>\-<tomeeversion>\-<flavour>

```

For example running Apache TomEE 1.7.2 with JRE 8 and Webprofile flavour will be:

```console

$ docker run -it --rm %%IMAGE%%:8-jre-1.7.2-webprofile

```

You can test it by visiting `http://container-ip:8080` in a browser or, if you need access outside the host, on port 8888:

```console

$ docker run -it --rm -p 8888:8080 %%IMAGE%%:<java-version>\-<tomeeversion>\-<flavour>

```

You can then go to `http://localhost:8888` or `http://host-ip:8888` in a browser.

The configuration files are available in `/usr/local/tomee/conf/`. By default, no user is included in the "manager-gui" role required to operate the "/manager/html" web application. If you wish to use this app, you must define such a user in `tomcat-users.xml`.

You can also use this image as base image for deploying your war application. To do it you need to create a Dockerfile based on Tomee Docker image and add the war file in `webapps` directory:

```dockerfile

COPY <locationofapplication>/<warfile> /usr/local/tomee/webapps/<warfile>

```

```

--------------------------------------------------------------------------------

/.template-helpers/variant.sh:

--------------------------------------------------------------------------------

```bash

#!/bin/bash

set -eo pipefail

repo="$1"

if [ -z "$repo" ]; then

echo >&2 "usage: $0 repo"

echo >&2 " ie: $0 hylang"

exit 1

fi

dir="$(dirname "$(readlink -f "$BASH_SOURCE")")"

repoDir="$dir/../$repo"

# prints "$2$1$3$1...$N"

join() {

local sep="$1"; shift

local out; printf -v out "${sep//%/%%}%s" "$@"

echo "${out#$sep}"

}

commaJoin() {

local items=( $(xargs -n1 <<<"$1" | sort -u) ); shift

local sep=', '

case "${#items[@]}" in

0)

return

;;

1)

echo "$items"

return

;;

2)

sep=' '

;;

esac

items[-1]="or ${items[-1]}"

join "$sep" "${items[@]}"

}

tagFiles() {

local tag="$1"; shift

local tagUltimate="${tag##*-}" # 3.6-stretch -> stretch

local tagPenultimate="${tag%-*}" # 2.7.15-windowsservercore-1803 -> 2.7.15-windowsservercore

tagPenultimate="${tagPenultimate##*-}" # 2.7.15-windowsservercore -> windowsservercore

echo \

"$repoDir/variant-$tag.md" \

"$repoDir/variant-$tagUltimate.md" \

"$repoDir/variant-$tagPenultimate.md" \

"$dir/variant-$tag.md" \

"$dir/variant-$tagUltimate.md" \

"$dir/variant-$tagPenultimate.md"

}

_repo() {

local repo=$1; shift

# if we haven't set BASHBREW_LIBRARY explicitly (like Jenkins does, for example), don't trust the local library

if [ -z "${BASHBREW_LIBRARY:-}" ]; then

repo="https://github.com/docker-library/official-images/raw/master/library/$repo"

fi

echo "$repo"

}

bbRepo="$(_repo "$repo")"

IFS=$'\n'

tags=( $(bashbrew cat -f '

{{- $archSpecific := getenv "ARCH_SPECIFIC_DOCS" -}}

{{- range ($archSpecific | ternary (archFilter arch .Entries) .Entries) -}}

{{- join "\n" .Tags -}}

{{- "\n" -}}

{{- end -}}

' "$bbRepo") )

unset IFS

text=

declare -A includedFiles=()

for tag in "${tags[@]}"; do

for f in $(tagFiles "$tag"); do

if [ -n "${includedFiles[$f]}" ]; then

# make sure we don't duplicate variant sections

break

fi

if [ -f "$f" ]; then

includedFiles[$f]=1

if [ -s "$f" ]; then

# an empty file can be used to disable a specific "variant" section for an image

text+=$'\n' # give a little space

text+="$(< "$f")"

text+=$'\n' # parameter expansion eats the trailing newline

fi

break

fi

done

done

if [ -n "$text" ]; then

default="$([ -f "$repoDir/variant.md" ] && cat "$repoDir/variant.md" || cat "$dir/variant.md")"

default+=$'\n' # parameter expansion eats the trailing newline

if [ "$repo" != 'debian' ] && [ "$repo" != 'ubuntu' ]; then

# what is 'bullseye', 'buster' and 'sid'

# https://github.com/docker-library/python/issues/343

debian=( $(bashbrew list --uniq "$(_repo 'debian')" | grep -vE 'stable|slim|backports|experimental|testing' | cut -d: -f2) )

ubuntu=( $(bashbrew list "$(_repo 'ubuntu')" | grep -vE 'devel|latest|[0-9]' | cut -d: -f2) )

foundDebianTags=

foundUbuntuTags=

for tag in ${tags[@]}; do

for suite in "${debian[@]}"; do

case "$tag" in

*-"$suite" | "$suite"-* | *-"$suite"-* | "$suite" )

foundDebianTags+=" $suite"

;;

esac

done

for suite in "${ubuntu[@]}"; do

case "$tag" in

*-"$suite" | "$suite"-* | *-"$suite"-* | "$suite" )

foundUbuntuTags+=" $suite"

;;

esac

done

done

if [ -n "$foundDebianTags" ]; then

default+=$'\n' # give a little space

default+="$( sed -e 's/%%DEB-SUITES%%/'"$(commaJoin "$foundDebianTags")"'/' "$dir/variant-default-debian.md" )"

default+=$'\n' # parameter expansion eats the trailing newline

fi

if [ -n "$foundUbuntuTags" ]; then

default+=$'\n' # give a little space

default+="$( sed -e 's/%%DEB-SUITES%%/'"$(commaJoin "$foundUbuntuTags")"'/' "$dir/variant-default-ubuntu.md" )"

default+=$'\n' # parameter expansion eats the trailing newline

fi

fi

# buildpack-deps text

potentialTags="$(bashbrew list --uniq "$bbRepo" | cut -d: -f2)"

for tag in $potentialTags; do

baseImage="$(bashbrew cat -f '{{ .ArchLastStageFrom (.TagEntry.Architectures | first) .TagEntry }}' "$bbRepo:$tag" 2>/dev/null)"

case "$baseImage" in

buildpack-deps:*-*) ;; # "scm", "curl" -- not large images

buildpack-deps:*)

default+=$'\n' # give a little space

default+="$(< "$dir/variant-default-buildpack-deps.md")"

default+=$'\n' # parameter expansion eats the trailing newline

break

;;

esac

done

echo

echo

echo -n "$default"

echo "$text"

fi

```

--------------------------------------------------------------------------------

/kapacitor/content.md:

--------------------------------------------------------------------------------

```markdown

# Kapacitor

Kapacitor is an open source data processing engine written in Go. It can process both stream and batch data.

[Kapacitor Official Documentation](https://docs.influxdata.com/kapacitor/latest/introduction/getting_started/)

%%LOGO%%

## Using this image

### Using the default configuration

Start the Kapacitor container with default options:

```console

$ docker run -p 9092:9092 %%IMAGE%%

```

Start the Kapacitor container sharing the data directory with the host:

```console

$ docker run -p 9092:9092 \

-v $PWD:/var/lib/kapacitor \

%%IMAGE%%

```

Modify `$PWD` to the directory where you want to store data associated with the Kapacitor container.

You can also have Docker control the volume mountpoint by using a named volume.

```console

$ docker run -p 9092:9092 \

-v kapacitor:/var/lib/kapacitor \

%%IMAGE%%

```

### Configuration

Kapacitor can be either configured from a config file or using environment variables. To mount a configuration file and use it with the server, you can use this command:

Generate the default configuration file:

```console

$ docker run --rm %%IMAGE%% kapacitord config > kapacitor.conf

```

Modify the default configuration, which will now be available under `$PWD`. Then start the Kapacitor container.

```console

$ docker run -p 9092:9092 \

-v $PWD/kapacitor.conf:/etc/kapacitor/kapacitor.conf:ro \

%%IMAGE%%

```

Modify `$PWD` to the directory where you want to store the configuration file.

For environment variables, the format is `KAPACITOR_$SECTION_$NAME`. All dashes (`-`) are replaced with underscores (`_`). If the variable isn't in a section, then omit that part. If the config section is an array, use a number to set the nth value in the configuration file.

Examples:

```console

KAPACITOR_HOSTNAME=kapacitor

KAPACITOR_LOGGING_LEVEL=INFO

KAPACITOR_REPORTING_ENABLED=false

KAPACITOR_INFLUXDB_0_URLS_0=http://influxdb:8086

```

Find more about configuring Kapacitor [here](https://docs.influxdata.com/kapacitor/latest/introduction/installation/)

#### Running as root

Starting in v1.7.4, Kapacitor no longer run as the root user by default. If a user wants to revert this change they can set `KAPACITOR_AS_ROOT=true` as an environment variable.

### Exposed Ports

- 9092 TCP -- HTTP API endpoint

#### Subscriptions

Subscriptions allow InfluxDB to push data to Kapacitor for faster alerting instead of requiring Kapacitor to pull data from InfluxDB.

These examples assume you are using a custom configuration file that takes advantage of Docker's built-in service discovery capability. In order to do so, we'll first create a new network:

```console

$ docker network create influxdb

```

Next, we'll start our InfluxDB container named `influxdb`:

```console

$ docker run -d --name=influxdb \

--net=influxdb \

influxdb

```

Start the Kapacitor container with the container hostname matching the container name so Kapacitor can automatically create subscriptions correctly and with the `KAPACITOR_INFLUXDB_0_URLS_0` value set to point at InfluxDB.

```console

$ docker run -p 9092:9092 \

--name=kapacitor \

-h kapacitor \

--net=influxdb \

-e KAPACITOR_INFLUXDB_0_URLS_0=http://influxdb:8086 \

%%IMAGE%%

```

You can also start Kapacitor sharing the same network interface of the InfluxDB container. If you do this, Docker will act as if both processes were being run on the same machine.

```console

$ docker run -p 9092:9092 \

--name=kapacitor \

--net=container:influxdb \

%%IMAGE%%

```

When run like this, InfluxDB can be communicated with over `localhost`.

### CLI / SHELL

Start the container:

```console

$ docker run --name=kapacitor -d -p 9092:9092 %%IMAGE%%

```

Run another container linked to the `kapacitor` container for using the client. Set the env `KAPACITOR_URL` so the client knows how to connect to Kapacitor. Mount in your current directory for accessing TICKscript files.

```console

$ docker run --rm --net=container:kapacitor \

-v $PWD:/root -w=/root -it \

%%IMAGE%% bash -l

```

Then, from within the container, you can use the `kapacitor` command to interact with the daemon.

See [this](https://docs.influxdata.com/kapacitor/latest/introduction/getting_started/) for a more detailed getting started guide with Kapacitor.

```

--------------------------------------------------------------------------------

/joomla/content.md:

--------------------------------------------------------------------------------

```markdown

# What is Joomla?