# Directory Structure

```

├── .gitignore

├── .python-version

├── LICENSE

├── Makefile

├── mcp_alchemy

│ ├── __init__.py

│ └── server.py

├── pyproject.toml

├── README.md

├── screenshot.png

├── tests

│ ├── Chinook_Sqlite.sqlite

│ ├── claude_desktop_config.json

│ ├── docker-compose.yml

│ ├── test_report.md

│ └── test.py

├── TESTS.md

└── uv.lock

```

# Files

--------------------------------------------------------------------------------

/.python-version:

--------------------------------------------------------------------------------

```

1 | 3.12

2 |

```

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

```

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | share/python-wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 | MANIFEST

28 |

29 | # PyInstaller

30 | # Usually these files are written by a python script from a template

31 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

32 | *.manifest

33 | *.spec

34 |

35 | # Installer logs

36 | pip-log.txt

37 | pip-delete-this-directory.txt

38 |

39 | # Unit test / coverage reports

40 | htmlcov/

41 | .tox/

42 | .nox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | *.py,cover

50 | .hypothesis/

51 | .pytest_cache/

52 | cover/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | .pybuilder/

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | # For a library or package, you might want to ignore these files since the code is

87 | # intended to run in multiple environments; otherwise, check them in:

88 | # .python-version

89 |

90 | # pipenv

91 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

92 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

93 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

94 | # install all needed dependencies.

95 | #Pipfile.lock

96 |

97 | # UV

98 | # Similar to Pipfile.lock, it is generally recommended to include uv.lock in version control.

99 | # This is especially recommended for binary packages to ensure reproducibility, and is more

100 | # commonly ignored for libraries.

101 | #uv.lock

102 |

103 | # poetry

104 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

105 | # This is especially recommended for binary packages to ensure reproducibility, and is more

106 | # commonly ignored for libraries.

107 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

108 | #poetry.lock

109 |

110 | # pdm

111 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

112 | #pdm.lock

113 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

114 | # in version control.

115 | # https://pdm.fming.dev/latest/usage/project/#working-with-version-control

116 | .pdm.toml

117 | .pdm-python

118 | .pdm-build/

119 |

120 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

121 | __pypackages__/

122 |

123 | # Celery stuff

124 | celerybeat-schedule

125 | celerybeat.pid

126 |

127 | # SageMath parsed files

128 | *.sage.py

129 |

130 | # Environments

131 | .env

132 | .venv

133 | env/

134 | venv/

135 | ENV/

136 | env.bak/

137 | venv.bak/

138 |

139 | # Spyder project settings

140 | .spyderproject

141 | .spyproject

142 |

143 | # Rope project settings

144 | .ropeproject

145 |

146 | # mkdocs documentation

147 | /site

148 |

149 | # mypy

150 | .mypy_cache/

151 | .dmypy.json

152 | dmypy.json

153 |

154 | # Pyre type checker

155 | .pyre/

156 |

157 | # pytype static type analyzer

158 | .pytype/

159 |

160 | # Cython debug symbols

161 | cython_debug/

162 |

163 | # PyCharm

164 | # JetBrains specific template is maintained in a separate JetBrains.gitignore that can

165 | # be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

166 | # and can be added to the global gitignore or merged into this file. For a more nuclear

167 | # option (not recommended) you can uncomment the following to ignore the entire idea folder.

168 | .idea/

169 |

170 | # PyPI configuration file

171 | .pypirc

172 | .aider*

173 |

```

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

```markdown

1 | # MCP Alchemy

2 |

3 | <a href="https://www.pulsemcp.com/servers/runekaagaard-alchemy"><img src="https://www.pulsemcp.com/badge/top-pick/runekaagaard-alchemy" width="400" alt="PulseMCP Badge"></a>

4 |

5 | **Status: Works great and is in daily use without any known bugs.**

6 |

7 | **Status2: I just added the package to PyPI and updated the usage instructions. Please report any issues :)**

8 |

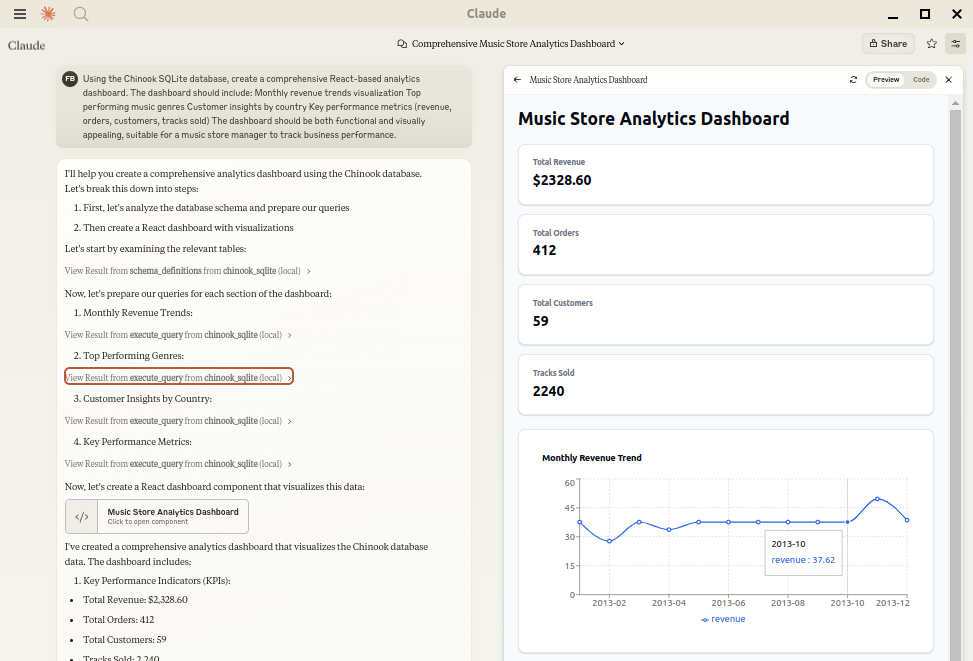

9 | Let Claude be your database expert! MCP Alchemy connects Claude Desktop directly to your databases, allowing it to:

10 |

11 | - Help you explore and understand your database structure

12 | - Assist in writing and validating SQL queries

13 | - Displays relationships between tables

14 | - Analyze large datasets and create reports

15 | - Claude Desktop Can analyse and create artifacts for very large datasets using [claude-local-files](https://github.com/runekaagaard/claude-local-files).

16 |

17 | Works with PostgreSQL, MySQL, MariaDB, SQLite, Oracle, MS SQL Server, CrateDB, Vertica,

18 | and a host of other [SQLAlchemy-compatible](https://docs.sqlalchemy.org/en/20/dialects/) databases.

19 |

20 |

21 |

22 | ## Installation

23 |

24 | Ensure you have uv installed:

25 | ```bash

26 | # Install uv if you haven't already

27 | curl -LsSf https://astral.sh/uv/install.sh | sh

28 | ```

29 |

30 | ## Usage with Claude Desktop

31 |

32 | Add to your `claude_desktop_config.json`. You need to add the appropriate database driver in the ``--with`` parameter.

33 |

34 | _Note: After a new version release there might be a period of up to 600 seconds while the cache clears locally

35 | cached causing uv to raise a versioning error. Restarting the MCP client once again solves the error._

36 |

37 | ### SQLite (built into Python)

38 | ```json

39 | {

40 | "mcpServers": {

41 | "my_sqlite_db": {

42 | "command": "uvx",

43 | "args": ["--from", "mcp-alchemy==2025.8.15.91819",

44 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

45 | "env": {

46 | "DB_URL": "sqlite:////absolute/path/to/database.db"

47 | }

48 | }

49 | }

50 | }

51 | ```

52 |

53 | ### PostgreSQL

54 | ```json

55 | {

56 | "mcpServers": {

57 | "my_postgres_db": {

58 | "command": "uvx",

59 | "args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "psycopg2-binary",

60 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

61 | "env": {

62 | "DB_URL": "postgresql://user:password@localhost/dbname"

63 | }

64 | }

65 | }

66 | }

67 | ```

68 |

69 | ### MySQL/MariaDB

70 | ```json

71 | {

72 | "mcpServers": {

73 | "my_mysql_db": {

74 | "command": "uvx",

75 | "args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "pymysql",

76 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

77 | "env": {

78 | "DB_URL": "mysql+pymysql://user:password@localhost/dbname"

79 | }

80 | }

81 | }

82 | }

83 | ```

84 |

85 | ### Microsoft SQL Server

86 | ```json

87 | {

88 | "mcpServers": {

89 | "my_mssql_db": {

90 | "command": "uvx",

91 | "args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "pymssql",

92 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

93 | "env": {

94 | "DB_URL": "mssql+pymssql://user:password@localhost/dbname"

95 | }

96 | }

97 | }

98 | }

99 | ```

100 |

101 | ### Oracle

102 | ```json

103 | {

104 | "mcpServers": {

105 | "my_oracle_db": {

106 | "command": "uvx",

107 | "args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "oracledb",

108 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

109 | "env": {

110 | "DB_URL": "oracle+oracledb://user:password@localhost/dbname"

111 | }

112 | }

113 | }

114 | }

115 | ```

116 |

117 | ### CrateDB

118 | ```json

119 | {

120 | "mcpServers": {

121 | "my_cratedb": {

122 | "command": "uvx",

123 | "args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "sqlalchemy-cratedb>=0.42.0.dev1",

124 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

125 | "env": {

126 | "DB_URL": "crate://user:password@localhost:4200/?schema=testdrive"

127 | }

128 | }

129 | }

130 | }

131 | ```

132 | For connecting to CrateDB Cloud, use a URL like

133 | `crate://user:[email protected]:4200?ssl=true`.

134 |

135 | ### Vertica

136 | ```json

137 | {

138 | "mcpServers": {

139 | "my_vertica_db": {

140 | "command": "uvx",

141 | "args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "vertica-python",

142 | "--refresh-package", "mcp-alchemy", "mcp-alchemy"],

143 | "env": {

144 | "DB_URL": "vertica+vertica_python://user:password@localhost:5433/dbname",

145 | "DB_ENGINE_OPTIONS": "{\"connect_args\": {\"ssl\": false}}"

146 | }

147 | }

148 | }

149 | }

150 | ```

151 |

152 | ## Environment Variables

153 |

154 | - `DB_URL`: SQLAlchemy [database URL](https://docs.sqlalchemy.org/en/20/core/engines.html#database-urls) (required)

155 | - `CLAUDE_LOCAL_FILES_PATH`: Directory for full result sets (optional)

156 | - `EXECUTE_QUERY_MAX_CHARS`: Maximum output length (optional, default 4000)

157 | - `DB_ENGINE_OPTIONS`: JSON string containing additional SQLAlchemy engine options (optional)

158 |

159 | ## Connection Pooling

160 |

161 | MCP Alchemy uses connection pooling optimized for long-running MCP servers. The default settings are:

162 |

163 | - `pool_pre_ping=True`: Tests connections before use to handle database timeouts and network issues

164 | - `pool_size=1`: Maintains 1 persistent connection (MCP servers typically handle one request at a time)

165 | - `max_overflow=2`: Allows up to 2 additional connections for burst capacity

166 | - `pool_recycle=3600`: Refreshes connections older than 1 hour (prevents timeout issues)

167 | - `isolation_level='AUTOCOMMIT'`: Ensures each query commits automatically

168 |

169 | These defaults work well for most databases, but you can override them via `DB_ENGINE_OPTIONS`:

170 |

171 | ```json

172 | {

173 | "DB_ENGINE_OPTIONS": "{\"pool_size\": 5, \"max_overflow\": 10, \"pool_recycle\": 1800}"

174 | }

175 | ```

176 |

177 | For databases with aggressive timeout settings (like MySQL's 8-hour default), the combination of `pool_pre_ping` and `pool_recycle` ensures reliable connections.

178 |

179 | ## API

180 |

181 | ### Tools

182 |

183 | - **all_table_names**

184 | - Return all table names in the database

185 | - No input required

186 | - Returns comma-separated list of tables

187 | ```

188 | users, orders, products, categories

189 | ```

190 |

191 | - **filter_table_names**

192 | - Find tables matching a substring

193 | - Input: `q` (string)

194 | - Returns matching table names

195 | ```

196 | Input: "user"

197 | Returns: "users, user_roles, user_permissions"

198 | ```

199 |

200 | - **schema_definitions**

201 | - Get detailed schema for specified tables

202 | - Input: `table_names` (string[])

203 | - Returns table definitions including:

204 | - Column names and types

205 | - Primary keys

206 | - Foreign key relationships

207 | - Nullable flags

208 | ```

209 | users:

210 | id: INTEGER, primary key, autoincrement

211 | email: VARCHAR(255), nullable

212 | created_at: DATETIME

213 |

214 | Relationships:

215 | id -> orders.user_id

216 | ```

217 |

218 | - **execute_query**

219 | - Execute SQL query with vertical output format

220 | - Inputs:

221 | - `query` (string): SQL query

222 | - `params` (object, optional): Query parameters

223 | - Returns results in clean vertical format:

224 | ```

225 | 1. row

226 | id: 123

227 | name: John Doe

228 | created_at: 2024-03-15T14:30:00

229 | email: NULL

230 |

231 | Result: 1 rows

232 | ```

233 | - Features:

234 | - Smart truncation of large results

235 | - Full result set access via [claude-local-files](https://github.com/runekaagaard/claude-local-files) integration

236 | - Clean NULL value display

237 | - ISO formatted dates

238 | - Clear row separation

239 |

240 | ## Claude Local Files

241 |

242 | When [claude-local-files](https://github.com/runekaagaard/claude-local-files) is configured:

243 |

244 | - Access complete result sets beyond Claude's context window

245 | - Generate detailed reports and visualizations

246 | - Perform deep analysis on large datasets

247 | - Export results for further processing

248 |

249 | The integration automatically activates when `CLAUDE_LOCAL_FILES_PATH` is set.

250 |

251 | ## Developing

252 |

253 | First clone the github repository, install the dependencies and your database driver(s) of choice:

254 |

255 | ```

256 | git clone [email protected]:runekaagaard/mcp-alchemy.git

257 | cd mcp-alchemy

258 | uv sync

259 | uv pip install psycopg2-binary

260 | ```

261 |

262 | Then set this in claude_desktop_config.json:

263 |

264 | ```

265 | ...

266 | "command": "uv",

267 | "args": ["run", "--directory", "/path/to/mcp-alchemy", "-m", "mcp_alchemy.server", "main"],

268 | ...

269 | ```

270 |

271 | ## My Other LLM Projects

272 |

273 | - **[MCP Redmine](https://github.com/runekaagaard/mcp-redmine)** - Let Claude Desktop manage your Redmine projects and issues.

274 | - **[MCP Notmuch Sendmail](https://github.com/runekaagaard/mcp-notmuch-sendmail)** - Email assistant for Claude Desktop using notmuch.

275 | - **[Diffpilot](https://github.com/runekaagaard/diffpilot)** - Multi-column git diff viewer with file grouping and tagging.

276 | - **[Claude Local Files](https://github.com/runekaagaard/claude-local-files)** - Access local files in Claude Desktop artifacts.

277 |

278 | ## MCP Directory Listings

279 |

280 | MCP Alchemy is listed in the following MCP directory sites and repositories:

281 |

282 | - [PulseMCP](https://www.pulsemcp.com/servers/runekaagaard-alchemy)

283 | - [Glama](https://glama.ai/mcp/servers/@runekaagaard/mcp-alchemy)

284 | - [MCP.so](https://mcp.so/server/mcp-alchemy)

285 | - [MCP Archive](https://mcp-archive.com/server/mcp-alchemy)

286 | - [Playbooks MCP](https://playbooks.com/mcp/runekaagaard-alchemy)

287 | - [Awesome MCP Servers](https://github.com/punkpeye/awesome-mcp-servers)

288 |

289 | ## Contributing

290 |

291 | Contributions are warmly welcomed! Whether it's bug reports, feature requests, documentation improvements, or code contributions - all input is valuable. Feel free to:

292 |

293 | - Open an issue to report bugs or suggest features

294 | - Submit pull requests with improvements

295 | - Enhance documentation or share your usage examples

296 | - Ask questions and share your experiences

297 |

298 | The goal is to make database interaction with Claude even better, and your insights and contributions help achieve that.

299 |

300 | ## License

301 |

302 | Mozilla Public License Version 2.0

303 |

```

--------------------------------------------------------------------------------

/mcp_alchemy/__init__.py:

--------------------------------------------------------------------------------

```python

1 |

```

--------------------------------------------------------------------------------

/tests/claude_desktop_config.json:

--------------------------------------------------------------------------------

```json

1 | {

2 | "mcpServers": {

3 | "chinook_sqlite": {

4 | "command": "uv",

5 | "args": [

6 | "--directory",

7 | "/home/r/ws/mcp-alchemy",

8 | "run",

9 | "server.py"

10 | ],

11 | "env": {

12 | "DB_URL": "sqlite:////home/r/ws/mcp-alchemy/tests/Chinook_Sqlite.sqlite"

13 | }

14 | },

15 | "chinook_mysql": {

16 | "command": "uv",

17 | "args": [

18 | "--directory",

19 | "/home/r/ws/mcp-alchemy",

20 | "run",

21 | "server.py"

22 | ],

23 | "env": {

24 | "DB_URL": "mysql+pymysql://chinook:chinook@localhost:3307/Chinook"

25 | }

26 | },

27 | "chinook_postgres": {

28 | "command": "uv",

29 | "args": [

30 | "--directory",

31 | "/home/r/ws/mcp-alchemy",

32 | "run",

33 | "server.py"

34 | ],

35 | "env": {

36 | "DB_URL": "postgresql://chinook:chinook@localhost:5434/chinook_db"

37 | }

38 | }

39 | }

40 | }

41 |

```

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

```toml

1 | [project]

2 | name = "mcp-alchemy"

3 | version = "2025.8.15.91819"

4 | description = "A MCP server that connects to your database"

5 | readme = "README.md"

6 | requires-python = ">=3.10"

7 | dependencies = [

8 | "mcp[cli]>=1.2.0rc1",

9 | "sqlalchemy>=2.0.36",

10 | ]

11 | authors = [

12 | { name="Rune Kaagaard" },

13 | ]

14 | classifiers = [

15 | "Programming Language :: Python :: 3",

16 | "Operating System :: OS Independent",

17 | ]

18 | license-files = ["LICENSE"]

19 | packages = [

20 | {include = "mcp_alchemy"}

21 | ]

22 |

23 | [project.scripts]

24 | mcp-alchemy = "mcp_alchemy.server:main"

25 |

26 | [project.urls]

27 | Homepage = "https://github.com/runekaagaard/mcp-alchemy"

28 | Issues = "https://github.com/runekaagaard/mcp-alchemy/issues"

29 | Disussions = "https://github.com/runekaagaard/mcp-alchemy/discussions"

30 |

31 | [build-system]

32 | requires = ["hatchling>=1.27"]

33 | build-backend = "hatchling.build"

34 |

35 | [tool.uv]

36 | package = true

37 |

38 | [dependency-groups]

39 | dev = [

40 | "build>=1.2.2.post1",

41 | "hatchling>=1.27.0",

42 | ]

43 |

```

--------------------------------------------------------------------------------

/tests/docker-compose.yml:

--------------------------------------------------------------------------------

```yaml

1 | version: '3.8'

2 | name: chinook

3 | services:

4 | #----------------------------------------------------------------------------

5 | # MySQL Container

6 | # https://hub.docker.com/_/mysql

7 | # Commands:

8 | # mysql -u chinook -p Chinook

9 | #----------------------------------------------------------------------------

10 | mysql:

11 | image: mysql:latest

12 | environment:

13 | MYSQL_USER: chinook

14 | MYSQL_PASSWORD: chinook

15 | MYSQL_ROOT_PASSWORD: chinook

16 | MYSQL_DATABASE: Chinook

17 | network_mode: "host"

18 | command: --port=3307

19 | volumes:

20 | - ./ChinookDatabase/DataSources/Chinook_MySql.sql:/docker-entrypoint-initdb.d/Chinook_MySql.sql

21 | - ./ChinookDatabase/DataSources/Chinook_MySql_AutoIncrementPKs.sql:/docker-entrypoint-initdb.d/Chinook_MySql_AutoIncrementPKs.sql

22 |

23 | #----------------------------------------------------------------------------

24 | # PostgreSQL Container

25 | # https://hub.docker.com/_/postgres

26 | # Commands:

27 | # psql -U chinook chinook

28 | #----------------------------------------------------------------------------

29 | postgres:

30 | image: postgres:15.3-alpine

31 | environment:

32 | POSTGRES_USER: chinook

33 | POSTGRES_PASSWORD: chinook

34 | POSTGRES_DB: chinook_db

35 | network_mode: "host"

36 | command: -p 5434

37 | volumes:

38 | - ./ChinookDatabase/DataSources/Chinook_PostgreSql.sql:/docker-entrypoint-initdb.d/Chinook_PostgreSql.sql

39 | - ./ChinookDatabase/DataSources/Chinook_PostgreSql_AutoIncrementPKs.sql:/docker-entrypoint-initdb.d/Chinook_PostgreSql_AutoIncrementPKs.sql

40 | - ./ChinookDatabase/DataSources/Chinook_PostgreSql_SerialPKs.sql:/docker-entrypoint-initdb.d/Chinook_PostgreSql_SerialPKs.sql

41 |

```

--------------------------------------------------------------------------------

/TESTS.md:

--------------------------------------------------------------------------------

```markdown

1 | # Testing MCP Alchemy

2 |

3 | This guide explains how to test MCP Alchemy with multiple databases using Docker and Claude Desktop.

4 |

5 | ## Setup Test Databases

6 |

7 | 1. Start the test databases using docker-compose:

8 | ```bash

9 | cd tests

10 | docker-compose up -d

11 | ```

12 |

13 | This will create:

14 | - MySQL database on port 3307

15 | - PostgreSQL database on port 5433

16 | - The Chinook sample database will be loaded into both

17 |

18 | 2. Verify the databases are running:

19 | ```bash

20 | # Check MySQL

21 | mysql -h 127.0.0.1 -P 3307 -u chinook -pchinook Chinook -e "SELECT COUNT(*) FROM Album;"

22 |

23 | # Check PostgreSQL

24 | PGPASSWORD=chinook psql -h localhost -p 5433 -U chinook chinook_db -c "SELECT COUNT(*) FROM \"Album\";"

25 | ```

26 |

27 | ## Configure Claude Desktop

28 |

29 | The provided `tests/claude_desktop_config.json` contains configurations for:

30 | - SQLite Chinook database

31 | - MySQL Chinook database

32 | - PostgreSQL Chinook database

33 |

34 | Copy it to your Claude Desktop config location:

35 | ```bash

36 | cp tests/claude_desktop_config.json ~/.config/claude-desktop/config.json

37 | ```

38 |

39 | ## Sample Test Prompt

40 |

41 | Here's a comprehensive prompt to test all three databases:

42 |

43 | ```

44 | I'd like to explore the Chinook database across different database engines. Let's:

45 |

46 | 1. First, list all tables in each database (SQLite, MySQL, and PostgreSQL) to verify they're identical

47 | 2. Get the schema for the Album and Artist tables from each database

48 | 3. Run this query on each database:

49 | SELECT ar.Name as ArtistName, COUNT(al.AlbumId) as AlbumCount

50 | FROM Artist ar

51 | LEFT JOIN Album al ON ar.ArtistId = al.ArtistId

52 | GROUP BY ar.ArtistId, ar.Name

53 | HAVING COUNT(al.AlbumId) > 5

54 | ORDER BY AlbumCount DESC;

55 | 4. Compare the results - they should be identical across all three databases

56 |

57 | Can you help me with this analysis?

58 | ```

59 |

60 | This will test:

61 | - Database connectivity to all three databases

62 | - Table listing functionality

63 | - Schema inspection

64 | - Complex query execution

65 | - Result formatting

66 | - Cross-database consistency

67 |

68 | ## Expected Results

69 |

70 | The results should show:

71 | - 11 tables in each database

72 | - Identical schema definitions

73 | - Same query results across all databases

74 | - Proper handling of NULL values and formatting

75 |

76 | If any discrepancies are found, check:

77 | 1. Docker container status

78 | 2. Database connection strings

79 | 3. Database initialization scripts

80 |

```

--------------------------------------------------------------------------------

/tests/test_report.md:

--------------------------------------------------------------------------------

```markdown

1 | # MCP Alchemy Test Report

2 |

3 | ## Overview

4 |

5 | This report documents the testing of MCP Alchemy, a tool that connects Claude Desktop directly to databases, allowing Claude to explore database structures, write and validate SQL queries, display relationships between tables, and analyze large datasets.

6 |

7 | **Test Date:** July 9, 2025

8 | **MCP Alchemy Version:** 2025.6.19.201831 (with connection pooling improvements)

9 | **Database Engine:** MySQL 5.7.36

10 |

11 | ## Test Environment

12 |

13 | Testing was performed on a MySQL database with the following characteristics:

14 | - Over 350 tables

15 | - Complex schema with extensive relationships

16 | - Real-world data structure with various data types

17 |

18 | ## Features Tested

19 |

20 | ### 1. Database Information Retrieval

21 |

22 | - **Get Database Info**: Successfully verified connection information

23 | - **List All Tables**: Retrieved complete list of all 367 database tables

24 | - **Filter Tables**: Successfully filtered tables by substring pattern

25 |

26 | ### 2. Schema Analysis

27 |

28 | - **Table Schema Definition**: Successfully retrieved detailed schema including:

29 | - Column names and types

30 | - Primary keys

31 | - Foreign key relationships

32 | - Nullable flags

33 | - Default values

34 |

35 | - **Complex Table Relationships**: Successfully mapped and displayed relationships between tables

36 |

37 | ### 3. Query Execution

38 |

39 | - **Basic Queries**: Executed simple SELECT queries to retrieve data

40 | - **Parameterized Queries**: Successfully used parameterized queries with the params argument

41 | - **Complex Joins**: Successfully performed queries with multiple joins across related tables

42 | - **Error Handling**: Properly handled and reported errors for invalid queries

43 | - **SQL Injection Protection**: Verified that SQL injection attempts are properly neutralized

44 |

45 | ### 4. Output Formatting

46 |

47 | - **Vertical Display Format**: Confirmed that query results are displayed in clear vertical format

48 | - **NULL Value Display**: Properly formats NULL values as "NULL"

49 | - **Row Counting**: Correctly displays the number of rows returned

50 | - **Output Truncation**: Properly truncates large result sets with appropriate notifications

51 | - **Full Result Access**: Successfully generated URLs for complete result sets via claude-local-files

52 |

53 | ## Test Results

54 |

55 | ### Functionality Tests

56 |

57 | | Feature | Status | Notes |

58 | |---------|--------|-------|

59 | | Database Connection | ✅ Pass | Successfully connected to MySQL 5.7.36 |

60 | | Table Listing | ✅ Pass | Successfully retrieved all 367 tables |

61 | | Table Filtering | ✅ Pass | Correctly filtered tables by substring |

62 | | Schema Definition | ✅ Pass | Retrieved detailed schema with relationships |

63 | | Basic Queries | ✅ Pass | Successfully executed simple SELECT queries |

64 | | Parameterized Queries | ✅ Pass | Parameterized queries worked correctly |

65 | | Complex Joins | ✅ Pass | Successfully joined multiple tables |

66 | | Error Handling | ✅ Pass | Properly handled and reported query errors |

67 | | SQL Injection Protection | ✅ Pass | Parameters sanitized correctly |

68 | | Result Formatting | ✅ Pass | Clean vertical format with row numbers |

69 | | Truncation | ✅ Pass | Large results properly truncated |

70 | | Full Result Access | ✅ Pass | Generated valid URLs for complete result access |

71 |

72 | ### Edge Cases Tested

73 |

74 | | Test Case | Result | Notes |

75 | |-----------|--------|-------|

76 | | Query with no results | ✅ Pass | Returns "No rows returned" |

77 | | Invalid table query | ✅ Pass | Returns appropriate error message |

78 | | SQL syntax error | ✅ Pass | Returns detailed error with location |

79 | | SQL injection attempt | ✅ Pass | Parameters properly sanitized |

80 | | Very large result set | ✅ Pass | Truncates and provides full result URL |

81 | | Unicode/special characters | ✅ Pass | Properly handles non-ASCII data |

82 |

83 | ## Performance Observations

84 |

85 | - Query execution is fast for simple to moderately complex queries

86 | - Schema retrieval performs well even on large tables

87 | - Result truncation works properly for large result sets

88 | - Full result access via URLs provides efficient access to large datasets

89 |

90 | ## Connection Pooling Tests (July 9, 2025) - UPDATED

91 |

92 | ### Initial Test (Before Fix Applied)

93 |

94 | | Test | Result | Notes |

95 | |------|--------|-------|

96 | | Initial Connection Count | ✅ Pass | Started with 3 threads connected |

97 | | Connection Reuse | ❌ Fail | Connections incrementing (3→7) suggesting new engines being created |

98 | | Connection ID Tracking | ✅ Pass | Different connection IDs showing connection creation |

99 | | Pool Behavior | ❌ Fail | Connections kept growing instead of stabilizing |

100 |

101 | ### Final Test (After Fix Applied)

102 |

103 | | Test | Result | Notes |

104 | |------|--------|-------|

105 | | Version Tracking | ✅ Pass | @mcp_alchemy_version = '2025.6.19.201831' correctly set |

106 | | Engine Reuse | ✅ Pass | Single engine instance reused across all requests |

107 | | Connection Pool Size | ✅ Pass | Stabilized at expected count (pool_size + overflow) |

108 | | Connection Rotation | ✅ Pass | Pool properly rotates connections (different IDs but stable count) |

109 | | All Core Features | ✅ Pass | all_table_names, filter_table_names, schema_definitions, execute_query |

110 | | Parameterized Queries | ✅ Pass | SQL injection protection working correctly |

111 | | Error Handling | ✅ Pass | Errors properly caught and reported |

112 |

113 | ### Observations

114 |

115 | The connection pooling fix is working correctly:

116 | - Single ENGINE instance is created and reused (confirmed via debugging)

117 | - Connection count stabilizes at pool configuration limits

118 | - Different connection IDs are normal - SQLAlchemy rotates through pool connections

119 | - All features continue to work properly with the new pooling implementation

120 |

121 | ### Key Improvements

122 |

123 | 1. **Resource Efficiency**: No more connection exhaustion after 5 queries

124 | 2. **Reliability**: Automatic reconnection on database failures

125 | 3. **Performance**: Connection reuse reduces overhead

126 | 4. **Monitoring**: Version tracking via @mcp_alchemy_version session variable

127 |

128 | ## Conclusion

129 |

130 | MCP Alchemy successfully passed all functional tests. It correctly connects to MySQL databases, retrieves schema information, executes queries with proper parameter handling, and formats results clearly. The SQL injection protection works as expected, properly sanitizing user input.

131 |

132 | The tool is well-suited for its intended purpose: allowing Claude to interact with databases and assist users with database exploration, query execution, and data analysis.

133 |

```

--------------------------------------------------------------------------------

/tests/test.py:

--------------------------------------------------------------------------------

```python

1 | import shutil, os, difflib, sys

2 |

3 | from mcp_alchemy.server import *

4 |

5 | d = dict

6 |

7 | GDI1 = """

8 | Connected to sqlite version 3.37.2 database tests/Chinook_Sqlite.sqlite.

9 | """

10 |

11 | ATN1 = "Album, Artist, Customer, Employee, Genre, Invoice, InvoiceLine, MediaType, Playlist, PlaylistTrack, Track"

12 |

13 | FTN1 = "MediaType, Playlist, PlaylistTrack, Track"

14 |

15 | SD1 = """

16 | Customer:

17 | CustomerId: primary key, INTEGER, primary_key=1

18 | FirstName: NVARCHAR(40)

19 | LastName: NVARCHAR(20)

20 | Company: NVARCHAR(80), nullable

21 | Address: NVARCHAR(70), nullable

22 | City: NVARCHAR(40), nullable

23 | State: NVARCHAR(40), nullable

24 | Country: NVARCHAR(40), nullable

25 | PostalCode: NVARCHAR(10), nullable

26 | Phone: NVARCHAR(24), nullable

27 | Fax: NVARCHAR(24), nullable

28 | Email: NVARCHAR(60)

29 | SupportRepId: INTEGER, nullable

30 |

31 | Relationships:

32 | SupportRepId -> Employee.EmployeeId

33 | Track:

34 | TrackId: primary key, INTEGER, primary_key=1

35 | Name: NVARCHAR(200)

36 | AlbumId: INTEGER, nullable

37 | MediaTypeId: INTEGER

38 | GenreId: INTEGER, nullable

39 | Composer: NVARCHAR(220), nullable

40 | Milliseconds: INTEGER

41 | Bytes: INTEGER, nullable

42 | UnitPrice: NUMERIC(10, 2)

43 |

44 | Relationships:

45 | MediaTypeId -> MediaType.MediaTypeId

46 | GenreId -> Genre.GenreId

47 | AlbumId -> Album.AlbumId

48 | """

49 |

50 | EQ1 = """

51 | 1. row

52 | AlbumId: 1

53 | Title: For Those About To Rock We Salute You

54 | ArtistId: 1

55 |

56 | 2. row

57 | AlbumId: 2

58 | Title: Balls to the Wall

59 | ArtistId: 2

60 |

61 | Result: 2 rows

62 | """

63 |

64 | BASE2 = """

65 | 1. row

66 | CustomerId: 1

67 | FirstName: Luís

68 | LastName: Gonçalves

69 | Company: Embraer - Empresa Brasileira de Aeronáutica S.A.

70 | Address: Av. Brigadeiro Faria Lima, 2170

71 | City: São José dos Campos

72 | State: SP

73 | Country: Brazil

74 | PostalCode: 12227-000

75 | Phone: +55 (12) 3923-5555

76 | Fax: +55 (12) 3923-5566

77 | Email: [email protected]

78 | SupportRepId: 3

79 |

80 | 2. row

81 | CustomerId: 2

82 | FirstName: Leonie

83 | LastName: Köhler

84 | Company: NULL

85 | Address: Theodor-Heuss-Straße 34

86 | City: Stuttgart

87 | State: NULL

88 | Country: Germany

89 | PostalCode: 70174

90 | Phone: +49 0711 2842222

91 | Fax: NULL

92 | Email: [email protected]

93 | SupportRepId: 5

94 |

95 | 3. row

96 | CustomerId: 3

97 | FirstName: François

98 | LastName: Tremblay

99 | Company: NULL

100 | Address: 1498 rue Bélanger

101 | City: Montréal

102 | State: QC

103 | Country: Canada

104 | PostalCode: H2G 1A7

105 | Phone: +1 (514) 721-4711

106 | Fax: NULL

107 | Email: [email protected]

108 | SupportRepId: 3

109 |

110 | 4. row

111 | CustomerId: 4

112 | FirstName: Bjørn

113 | LastName: Hansen

114 | Company: NULL

115 | Address: Ullevålsveien 14

116 | City: Oslo

117 | State: NULL

118 | Country: Norway

119 | PostalCode: 0171

120 | Phone: +47 22 44 22 22

121 | Fax: NULL

122 | Email: [email protected]

123 | SupportRepId: 4

124 |

125 | 5. row

126 | CustomerId: 5

127 | FirstName: František

128 | LastName: Wichterlová

129 | Company: JetBrains s.r.o.

130 | Address: Klanova 9/506

131 | City: Prague

132 | State: NULL

133 | Country: Czech Republic

134 | PostalCode: 14700

135 | Phone: +420 2 4172 5555

136 | Fax: +420 2 4172 5555

137 | Email: [email protected]

138 | SupportRepId: 4

139 |

140 | 6. row

141 | CustomerId: 6

142 | FirstName: Helena

143 | LastName: Holý

144 | Company: NULL

145 | Address: Rilská 3174/6

146 | City: Prague

147 | State: NULL

148 | Country: Czech Republic

149 | PostalCode: 14300

150 | Phone: +420 2 4177 0449

151 | Fax: NULL

152 | Email: [email protected]

153 | SupportRepId: 5

154 |

155 | 7. row

156 | CustomerId: 7

157 | FirstName: Astrid

158 | LastName: Gruber

159 | Company: NULL

160 | Address: Rotenturmstraße 4, 1010 Innere Stadt

161 | City: Vienne

162 | State: NULL

163 | Country: Austria

164 | PostalCode: 1010

165 | Phone: +43 01 5134505

166 | Fax: NULL

167 | Email: [email protected]

168 | SupportRepId: 5

169 |

170 | 8. row

171 | CustomerId: 8

172 | FirstName: Daan

173 | LastName: Peeters

174 | Company: NULL

175 | Address: Grétrystraat 63

176 | City: Brussels

177 | State: NULL

178 | Country: Belgium

179 | PostalCode: 1000

180 | Phone: +32 02 219 03 03

181 | Fax: NULL

182 | Email: [email protected]

183 | SupportRepId: 4

184 |

185 | 9. row

186 | CustomerId: 9

187 | FirstName: Kara

188 | LastName: Nielsen

189 | Company: NULL

190 | Address: Sønder Boulevard 51

191 | City: Copenhagen

192 | State: NULL

193 | Country: Denmark

194 | PostalCode: 1720

195 | Phone: +453 3331 9991

196 | Fax: NULL

197 | Email: [email protected]

198 | SupportRepId: 4

199 |

200 | 10. row

201 | CustomerId: 10

202 | FirstName: Eduardo

203 | LastName: Martins

204 | Company: Woodstock Discos

205 | Address: Rua Dr. Falcão Filho, 155

206 | City: São Paulo

207 | State: SP

208 | Country: Brazil

209 | PostalCode: 01007-010

210 | Phone: +55 (11) 3033-5446

211 | Fax: +55 (11) 3033-4564

212 | Email: [email protected]

213 | SupportRepId: 4

214 |

215 | 11. row

216 | CustomerId: 11

217 | FirstName: Alexandre

218 | LastName: Rocha

219 | Company: Banco do Brasil S.A.

220 | Address: Av. Paulista, 2022

221 | City: São Paulo

222 | State: SP

223 | Country: Brazil

224 | PostalCode: 01310-200

225 | Phone: +55 (11) 3055-3278

226 | Fax: +55 (11) 3055-8131

227 | Email: [email protected]

228 | SupportRepId: 5

229 |

230 | 12. row

231 | CustomerId: 12

232 | FirstName: Roberto

233 | LastName: Almeida

234 | Company: Riotur

235 | Address: Praça Pio X, 119

236 | City: Rio de Janeiro

237 | State: RJ

238 | Country: Brazil

239 | PostalCode: 20040-020

240 | Phone: +55 (21) 2271-7000

241 | Fax: +55 (21) 2271-7070

242 | Email: [email protected]

243 | SupportRepId: 3

244 |

245 | 13. row

246 | CustomerId: 13

247 | FirstName: Fernanda

248 | LastName: Ramos

249 | Company: NULL

250 | Address: Qe 7 Bloco G

251 | City: Brasília

252 | State: DF

253 | Country: Brazil

254 | PostalCode: 71020-677

255 | Phone: +55 (61) 3363-5547

256 | Fax: +55 (61) 3363-7855

257 | Email: [email protected]

258 | SupportRepId: 4

259 |

260 | 14. row

261 | CustomerId: 14

262 | FirstName: Mark

263 | LastName: Philips

264 | Company: Telus

265 | Address: 8210 111 ST NW

266 | City: Edmonton

267 | State: AB

268 | Country: Canada

269 | PostalCode: T6G 2C7

270 | Phone: +1 (780) 434-4554

271 | Fax: +1 (780) 434-5565

272 | Email: [email protected]

273 | SupportRepId: 5

274 |

275 | 15. row

276 | CustomerId: 15

277 | FirstName: Jennifer

278 | LastName: Peterson

279 | Company: Rogers Canada

280 | Address: 700 W Pender Street

281 | City: Vancouver

282 | State: BC

283 | Country: Canada

284 | PostalCode: V6C 1G8

285 | Phone: +1 (604) 688-2255

286 | Fax: +1 (604) 688-8756

287 | Email: [email protected]

288 | SupportRepId: 3

289 | """

290 |

291 | EQ2 = BASE2 + """

292 | Result: showing first 15 rows (output truncated)

293 | """

294 |

295 | EQ2B = BASE2 + """

296 | Result: 59 rows (output truncated)

297 | Full result set url: https://cdn.jsdelivr.net/pyodide/claude-local-files/38d911af2df61f48ae5850491aaa32aff40569233d6aa2a870960a45108067ff.json (format: [[row1_value1, row1_value2, ...], [row2_value1, row2_value2, ...], ...]]) (ALWAYS prefer fetching this url in artifacts instead of hardcoding the values if at all possible)"""

298 |

299 | EQ3 = """

300 | Error: (sqlite3.OperationalError) no such column: id

301 | [SQL: SELECT * FROM Customer WHERE id=1]

302 | (Background on this error at: https://sqlalche.me/e/20/e3q8)

303 | """

304 |

305 | EQ4 = """

306 | 1. row

307 | AlbumId: 5

308 | Title: Big Ones

309 | ArtistId: 3

310 |

311 | Result: 1 rows

312 | """

313 |

314 | EQ5 = """

315 | Error: (sqlite3.OperationalError) near "ZOOP": syntax error

316 | [SQL: ZOOP BOOP LOOP]

317 | (Background on this error at: https://sqlalche.me/e/20/e3q8)

318 | """

319 |

320 | EQMC1 = """

321 | 1. row

322 | AlbumId: 1

323 | Title: For Those About To Rock We Salute You

324 | ArtistId: 1

325 |

326 | Result: showing first 1 rows (output truncated)

327 | """

328 |

329 | def h1(s):

330 | print(s)

331 | print("=" * len(s))

332 | print()

333 |

334 | def diff(wanted_result, actual_result):

335 | """Show git-like diff between two strings."""

336 | diff_lines = difflib.unified_diff(wanted_result.splitlines(keepends=True),

337 | actual_result.splitlines(keepends=True), fromfile='wanted_result',

338 | tofile='actual_result', n=3)

339 |

340 | return ''.join(line.replace('\n', '') + '\n' for line in diff_lines)

341 |

342 | def test_func(func, tests):

343 | for args, wanted_result in tests:

344 | wanted_result = wanted_result.strip()

345 | actual_result = func(*args)

346 | if actual_result != wanted_result:

347 | print(f"{func.__name__}({args})")

348 | h1("Wanted result")

349 | print(wanted_result)

350 | h1("Actual result")

351 | print(actual_result)

352 | h1("Diff")

353 | print(diff(wanted_result, actual_result))

354 | sys.exit(1)

355 |

356 | def main():

357 | test_func(get_db_info, [([], GDI1)])

358 | test_func(all_table_names, [([], ATN1)])

359 | test_func(filter_table_names, [(["a"], FTN1)])

360 | test_func(schema_definitions, [([["Customer", "Track"]], SD1)])

361 | test_func(execute_query, [

362 | (["SELECT * FROM Album LIMIT 2"], EQ1),

363 | (["SELECT * FROM Customer"], EQ2),

364 | (["SELECT * FROM Customer WHERE id=1"], EQ3),

365 | (["SELECT * FROM Album WHERE AlbumId=:AlbumId", d(AlbumId=5)], EQ4),

366 | (["SELECT * FROM Album WHERE AlbumId=:AlbumId",

367 | d(AlbumId=-1)], "No rows returned"),

368 | (["UPDATE Album SET AlbumId=:AlbumId WHERE AlbumId=:AlbumId",

369 | d(AlbumId=-1)], "Success: 0 rows affected"),

370 | (["ZOOP BOOP LOOP"], EQ5),

371 | ])

372 |

373 | # EXECUTE_QUERY_MAX_CHARS setting

374 | tmp = EXECUTE_QUERY_MAX_CHARS

375 | tests_set_global("EXECUTE_QUERY_MAX_CHARS", 100)

376 | test_func(execute_query, [

377 | (["SELECT * FROM Album LIMIT 2"], EQMC1),

378 | ])

379 | tests_set_global("EXECUTE_QUERY_MAX_CHARS", tmp)

380 |

381 | # CLAUDE_LOCAL_FILES_PATH setting

382 | tmp = "/tmp/mcp-alchemy/claude-local-files"

383 | os.makedirs(tmp, exist_ok=True)

384 | tests_set_global("CLAUDE_LOCAL_FILES_PATH", tmp)

385 | test_func(execute_query, [

386 | (["SELECT * FROM Customer"], EQ2B),

387 | ])

388 | shutil.rmtree(tmp)

389 |

390 | if __name__ == "__main__":

391 | main()

392 |

```

--------------------------------------------------------------------------------

/mcp_alchemy/server.py:

--------------------------------------------------------------------------------

```python

1 | import os, json, hashlib

2 | from datetime import datetime, date

3 |

4 | from mcp.server.fastmcp import FastMCP

5 | from mcp.server.fastmcp.utilities.logging import get_logger

6 |

7 | from sqlalchemy import create_engine, inspect, text

8 |

9 | ### Helpers ###

10 |

11 | def tests_set_global(k, v):

12 | globals()[k] = v

13 |

14 | ### Database ###

15 |

16 | logger = get_logger(__name__)

17 | ENGINE = None

18 |

19 | def create_new_engine():

20 | """Create engine with MCP-optimized settings to handle long-running connections"""

21 | db_engine_options = os.environ.get('DB_ENGINE_OPTIONS')

22 | user_options = json.loads(db_engine_options) if db_engine_options else {}

23 |

24 | # MCP-optimized defaults that can be overridden by user

25 | options = {

26 | 'isolation_level': 'AUTOCOMMIT',

27 | # Test connections before use (handles MySQL 8hr timeout, network drops)

28 | 'pool_pre_ping': True,

29 | # Keep minimal connections (MCP typically handles one request at a time)

30 | 'pool_size': 1,

31 | # Allow temporary burst capacity for edge cases

32 | 'max_overflow': 2,

33 | # Force refresh connections older than 1hr (well under MySQL's 8hr default)

34 | 'pool_recycle': 3600,

35 | # User can override any of the above

36 | **user_options

37 | }

38 |

39 | return create_engine(os.environ['DB_URL'], **options)

40 |

41 | def get_connection():

42 | global ENGINE

43 |

44 | try:

45 | try:

46 | if ENGINE is None:

47 | ENGINE = create_new_engine()

48 |

49 | connection = ENGINE.connect()

50 |

51 | # Set version variable for databases that support it

52 | try:

53 | connection.execute(text(f"SET @mcp_alchemy_version = '{VERSION}'"))

54 | except Exception:

55 | # Some databases don't support session variables

56 | pass

57 |

58 | return connection

59 |

60 | except Exception as e:

61 | logger.warning(f"First connection attempt failed: {e}")

62 |

63 | # Database might have restarted or network dropped - start fresh

64 | if ENGINE is not None:

65 | try:

66 | ENGINE.dispose()

67 | except Exception:

68 | pass

69 |

70 | # One retry with fresh engine handles most transient failures

71 | ENGINE = create_new_engine()

72 | connection = ENGINE.connect()

73 |

74 | return connection

75 |

76 | except Exception as e:

77 | logger.exception("Failed to get database connection after retry")

78 | raise

79 |

80 | def get_db_info():

81 | with get_connection() as conn:

82 | engine = conn.engine

83 | url = engine.url

84 | result = [

85 | f"Connected to {engine.dialect.name}",

86 | f"version {'.'.join(str(x) for x in engine.dialect.server_version_info)}",

87 | f"database {url.database}",

88 | ]

89 |

90 | if url.host:

91 | result.append(f"on {url.host}")

92 |

93 | if url.username:

94 | result.append(f"as user {url.username}")

95 |

96 | return " ".join(result) + "."

97 |

98 | ### Constants ###

99 |

100 | VERSION = "2025.8.15.91819"

101 | DB_INFO = get_db_info()

102 | EXECUTE_QUERY_MAX_CHARS = int(os.environ.get('EXECUTE_QUERY_MAX_CHARS', 4000))

103 | CLAUDE_LOCAL_FILES_PATH = os.environ.get('CLAUDE_LOCAL_FILES_PATH')

104 |

105 | ### MCP ###

106 |

107 | mcp = FastMCP("MCP Alchemy")

108 | get_logger(__name__).info(f"Starting MCP Alchemy version {VERSION}")

109 |

110 | @mcp.tool(description=f"Return all table names in the database separated by comma. {DB_INFO}")

111 | def all_table_names() -> str:

112 | with get_connection() as conn:

113 | inspector = inspect(conn)

114 | return ", ".join(inspector.get_table_names())

115 |

116 | @mcp.tool(

117 | description=f"Return all table names in the database containing the substring 'q' separated by comma. {DB_INFO}"

118 | )

119 | def filter_table_names(q: str) -> str:

120 | with get_connection() as conn:

121 | inspector = inspect(conn)

122 | return ", ".join(x for x in inspector.get_table_names() if q in x)

123 |

124 | @mcp.tool(description=f"Returns schema and relation information for the given tables. {DB_INFO}")

125 | def schema_definitions(table_names: list[str]) -> str:

126 | def format(inspector, table_name):

127 | columns = inspector.get_columns(table_name)

128 | foreign_keys = inspector.get_foreign_keys(table_name)

129 | primary_keys = set(inspector.get_pk_constraint(table_name)["constrained_columns"])

130 | result = [f"{table_name}:"]

131 |

132 | # Process columns

133 | show_key_only = {"nullable", "autoincrement"}

134 | for column in columns:

135 | if "comment" in column:

136 | del column["comment"]

137 | name = column.pop("name")

138 | column_parts = (["primary key"] if name in primary_keys else []) + [str(

139 | column.pop("type"))] + [k if k in show_key_only else f"{k}={v}" for k, v in column.items() if v]

140 | result.append(f" {name}: " + ", ".join(column_parts))

141 |

142 | # Process relationships

143 | if foreign_keys:

144 | result.extend(["", " Relationships:"])

145 | for fk in foreign_keys:

146 | constrained_columns = ", ".join(fk['constrained_columns'])

147 | referred_table = fk['referred_table']

148 | referred_columns = ", ".join(fk['referred_columns'])

149 | result.append(f" {constrained_columns} -> {referred_table}.{referred_columns}")

150 |

151 | return "\n".join(result)

152 |

153 | with get_connection() as conn:

154 | inspector = inspect(conn)

155 | return "\n".join(format(inspector, table_name) for table_name in table_names)

156 |

157 | def execute_query_description():

158 | parts = [

159 | f"Execute a SQL query and return results in a readable format. Results will be truncated after {EXECUTE_QUERY_MAX_CHARS} characters."

160 | ]

161 | if CLAUDE_LOCAL_FILES_PATH:

162 | parts.append("Claude Desktop may fetch the full result set via an url for analysis and artifacts.")

163 | parts.append(

164 | "IMPORTANT: You MUST use the params parameter for query parameter substitution (e.g. 'WHERE id = :id' with "

165 | "params={'id': 123}) to prevent SQL injection. Direct string concatenation is a serious security risk.")

166 | parts.append(DB_INFO)

167 | return " ".join(parts)

168 |

169 | @mcp.tool(description=execute_query_description())

170 | def execute_query(query: str, params: dict = {}) -> str:

171 | def format_value(val):

172 | """Format a value for display, handling None and datetime types"""

173 | if val is None:

174 | return "NULL"

175 | if isinstance(val, (datetime, date)):

176 | return val.isoformat()

177 | return str(val)

178 |

179 | def format_result(cursor_result):

180 | """Format rows in a clean vertical format"""

181 | result, full_results = [], []

182 | size, i, did_truncate = 0, 0, False

183 |

184 | i = 0

185 | while row := cursor_result.fetchone():

186 | i += 1

187 | if CLAUDE_LOCAL_FILES_PATH:

188 | full_results.append(row)

189 | if did_truncate:

190 | continue

191 |

192 | sub_result = []

193 | sub_result.append(f"{i}. row")

194 | for col, val in zip(cursor_result.keys(), row):

195 | sub_result.append(f"{col}: {format_value(val)}")

196 |

197 | sub_result.append("")

198 |

199 | size += sum(len(x) + 1 for x in sub_result) # +1 is for line endings

200 |

201 | if size > EXECUTE_QUERY_MAX_CHARS:

202 | did_truncate = True

203 | if not CLAUDE_LOCAL_FILES_PATH:

204 | break

205 | else:

206 | result.extend(sub_result)

207 |

208 | if i == 0:

209 | return ["No rows returned"], full_results

210 | elif did_truncate:

211 | if CLAUDE_LOCAL_FILES_PATH:

212 | result.append(f"Result: {i} rows (output truncated)")

213 | else:

214 | result.append(f"Result: showing first {i-1} rows (output truncated)")

215 | return result, full_results

216 | else:

217 | result.append(f"Result: {i} rows")

218 | return result, full_results

219 |

220 | def save_full_results(full_results):

221 | """Save complete result set for Claude if configured"""

222 | if not CLAUDE_LOCAL_FILES_PATH:

223 | return None

224 |

225 | def serialize_row(row):

226 | return [format_value(val) for val in row]

227 |

228 | data = [serialize_row(row) for row in full_results]

229 | file_hash = hashlib.sha256(json.dumps(data).encode()).hexdigest()

230 | file_name = f"{file_hash}.json"

231 |

232 | with open(os.path.join(CLAUDE_LOCAL_FILES_PATH, file_name), 'w') as f:

233 | json.dump(data, f)

234 |

235 | return (

236 | f"Full result set url: https://cdn.jsdelivr.net/pyodide/claude-local-files/{file_name}"

237 | " (format: [[row1_value1, row1_value2, ...], [row2_value1, row2_value2, ...], ...]])"

238 | " (ALWAYS prefer fetching this url in artifacts instead of hardcoding the values if at all possible)")

239 |

240 | try:

241 | with get_connection() as connection:

242 | cursor_result = connection.execute(text(query), params)

243 |

244 | if not cursor_result.returns_rows:

245 | return f"Success: {cursor_result.rowcount} rows affected"

246 |

247 | output, full_results = format_result(cursor_result)

248 |

249 | if full_results_message := save_full_results(full_results):

250 | output.append(full_results_message)

251 |

252 | return "\n".join(output)

253 | except Exception as e:

254 | return f"Error: {str(e)}"

255 |

256 | def main():

257 | mcp.run()

258 |

259 | if __name__ == "__main__":

260 | main()

261 |

```