This is page 1 of 4. Use http://codebase.md/zilliztech/claude-context?page={x} to view the full context.

# Directory Structure

```

├── .env.example

├── .eslintrc.js

├── .github

│ ├── ISSUE_TEMPLATE

│ │ └── bug_report.md

│ └── workflows

│ ├── ci.yml

│ └── release.yml

├── .gitignore

├── .npmrc

├── .vscode

│ ├── extensions.json

│ ├── launch.json

│ ├── settings.json

│ └── tasks.json

├── assets

│ ├── Architecture.png

│ ├── claude-context.png

│ ├── docs

│ │ ├── file-inclusion-flow.png

│ │ ├── indexing-flow-diagram.png

│ │ └── indexing-sequence-diagram.png

│ ├── file_synchronizer.png

│ ├── mcp_efficiency_analysis_chart.png

│ ├── signup_and_create_cluster.jpeg

│ ├── signup_and_get_apikey.png

│ ├── vscode-setup.png

│ └── zilliz_cloud_dashboard.jpeg

├── build-benchmark.json

├── CONTRIBUTING.md

├── docs

│ ├── dive-deep

│ │ ├── asynchronous-indexing-workflow.md

│ │ └── file-inclusion-rules.md

│ ├── getting-started

│ │ ├── environment-variables.md

│ │ ├── prerequisites.md

│ │ └── quick-start.md

│ ├── README.md

│ └── troubleshooting

│ ├── faq.md

│ └── troubleshooting-guide.md

├── evaluation

│ ├── .python-version

│ ├── analyze_and_plot_mcp_efficiency.py

│ ├── case_study

│ │ ├── django_14170

│ │ │ ├── both_conversation.log

│ │ │ ├── both_result.json

│ │ │ ├── grep_conversation.log

│ │ │ ├── grep_result.json

│ │ │ └── README.md

│ │ ├── pydata_xarray_6938

│ │ │ ├── both_conversation.log

│ │ │ ├── both_result.json

│ │ │ ├── grep_conversation.log

│ │ │ ├── grep_result.json

│ │ │ └── README.md

│ │ └── README.md

│ ├── client.py

│ ├── generate_subset_json.py

│ ├── pyproject.toml

│ ├── README.md

│ ├── retrieval

│ │ ├── __init__.py

│ │ ├── base.py

│ │ └── custom.py

│ ├── run_evaluation.py

│ ├── servers

│ │ ├── __init__.py

│ │ ├── edit_server.py

│ │ ├── grep_server.py

│ │ └── read_server.py

│ ├── utils

│ │ ├── __init__.py

│ │ ├── constant.py

│ │ ├── file_management.py

│ │ ├── format.py

│ │ └── llm_factory.py

│ └── uv.lock

├── examples

│ ├── basic-usage

│ │ ├── index.ts

│ │ ├── package.json

│ │ └── README.md

│ └── README.md

├── LICENSE

├── package.json

├── packages

│ ├── chrome-extension

│ │ ├── CONTRIBUTING.md

│ │ ├── package.json

│ │ ├── README.md

│ │ ├── src

│ │ │ ├── background.ts

│ │ │ ├── config

│ │ │ │ └── milvusConfig.ts

│ │ │ ├── content.ts

│ │ │ ├── icons

│ │ │ │ ├── icon128.png

│ │ │ │ ├── icon16.png

│ │ │ │ ├── icon32.png

│ │ │ │ └── icon48.png

│ │ │ ├── manifest.json

│ │ │ ├── milvus

│ │ │ │ └── chromeMilvusAdapter.ts

│ │ │ ├── options.html

│ │ │ ├── options.ts

│ │ │ ├── storage

│ │ │ │ └── indexedRepoManager.ts

│ │ │ ├── stubs

│ │ │ │ └── milvus-vectordb-stub.ts

│ │ │ ├── styles.css

│ │ │ └── vm-stub.js

│ │ ├── tsconfig.json

│ │ └── webpack.config.js

│ ├── core

│ │ ├── CONTRIBUTING.md

│ │ ├── package.json

│ │ ├── README.md

│ │ ├── src

│ │ │ ├── context.ts

│ │ │ ├── embedding

│ │ │ │ ├── base-embedding.ts

│ │ │ │ ├── gemini-embedding.ts

│ │ │ │ ├── index.ts

│ │ │ │ ├── ollama-embedding.ts

│ │ │ │ ├── openai-embedding.ts

│ │ │ │ └── voyageai-embedding.ts

│ │ │ ├── index.ts

│ │ │ ├── splitter

│ │ │ │ ├── ast-splitter.ts

│ │ │ │ ├── index.ts

│ │ │ │ └── langchain-splitter.ts

│ │ │ ├── sync

│ │ │ │ ├── merkle.ts

│ │ │ │ └── synchronizer.ts

│ │ │ ├── types.ts

│ │ │ ├── utils

│ │ │ │ ├── env-manager.ts

│ │ │ │ └── index.ts

│ │ │ └── vectordb

│ │ │ ├── index.ts

│ │ │ ├── milvus-restful-vectordb.ts

│ │ │ ├── milvus-vectordb.ts

│ │ │ ├── types.ts

│ │ │ └── zilliz-utils.ts

│ │ └── tsconfig.json

│ ├── mcp

│ │ ├── CONTRIBUTING.md

│ │ ├── package.json

│ │ ├── README.md

│ │ ├── src

│ │ │ ├── config.ts

│ │ │ ├── embedding.ts

│ │ │ ├── handlers.ts

│ │ │ ├── index.ts

│ │ │ ├── snapshot.ts

│ │ │ ├── sync.ts

│ │ │ └── utils.ts

│ │ └── tsconfig.json

│ └── vscode-extension

│ ├── CONTRIBUTING.md

│ ├── copy-assets.js

│ ├── LICENSE

│ ├── package.json

│ ├── README.md

│ ├── resources

│ │ ├── activity_bar.svg

│ │ └── icon.png

│ ├── src

│ │ ├── commands

│ │ │ ├── indexCommand.ts

│ │ │ ├── searchCommand.ts

│ │ │ └── syncCommand.ts

│ │ ├── config

│ │ │ └── configManager.ts

│ │ ├── extension.ts

│ │ ├── stubs

│ │ │ ├── ast-splitter-stub.js

│ │ │ └── milvus-vectordb-stub.js

│ │ └── webview

│ │ ├── scripts

│ │ │ └── semanticSearch.js

│ │ ├── semanticSearchProvider.ts

│ │ ├── styles

│ │ │ └── semanticSearch.css

│ │ ├── templates

│ │ │ └── semanticSearch.html

│ │ └── webviewHelper.ts

│ ├── tsconfig.json

│ ├── wasm

│ │ ├── tree-sitter-c_sharp.wasm

│ │ ├── tree-sitter-cpp.wasm

│ │ ├── tree-sitter-go.wasm

│ │ ├── tree-sitter-java.wasm

│ │ ├── tree-sitter-javascript.wasm

│ │ ├── tree-sitter-python.wasm

│ │ ├── tree-sitter-rust.wasm

│ │ ├── tree-sitter-scala.wasm

│ │ └── tree-sitter-typescript.wasm

│ └── webpack.config.js

├── pnpm-lock.yaml

├── pnpm-workspace.yaml

├── python

│ ├── README.md

│ ├── test_context.ts

│ ├── test_endtoend.py

│ └── ts_executor.py

├── README.md

├── scripts

│ └── build-benchmark.js

└── tsconfig.json

```

# Files

--------------------------------------------------------------------------------

/evaluation/.python-version:

--------------------------------------------------------------------------------

```

3.10

```

--------------------------------------------------------------------------------

/.npmrc:

--------------------------------------------------------------------------------

```

# Enable shell emulator for cross-platform script execution

shell-emulator=true

# Ignore workspace root check warning (already configured in package.json)

ignore-workspace-root-check=true

# Build performance optimizations

prefer-frozen-lockfile=true

auto-install-peers=true

dedupe-peer-dependents=true

# Enhanced caching

store-dir=~/.pnpm-store

cache-dir=~/.pnpm-cache

# Parallel execution optimization

child-concurrency=4

```

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

```

# Dependencies

node_modules/

*.pnp

.pnp.js

# Production builds

dist/

build/

*.tsbuildinfo

# Environment variables

.env

.env.local

.env.development.local

.env.production.local

# Logs

npm-debug.log*

yarn-debug.log*

yarn-error.log*

pnpm-debug.log*

lerna-debug.log*

# Runtime data

pids

*.pid

*.seed

*.pid.lock

# IDE

# .vscode/

.idea/

*.swp

*.swo

*~

# OS

.DS_Store

.DS_Store?

._*

.Spotlight-V100

.Trashes

ehthumbs.db

Thumbs.db

# Temporary files

*.tmp

*.temp

# Extension specific

*.vsix

*.crx

*.pem

__pycache__/

*.log

!evaluation/case_study/**/*.log

.claude/*

CLAUDE.md

.cursor/*

evaluation/repos

repos

evaluation/retrieval_results*

.venv/

```

--------------------------------------------------------------------------------

/.eslintrc.js:

--------------------------------------------------------------------------------

```javascript

module.exports = {

root: true,

parser: '@typescript-eslint/parser',

plugins: ['@typescript-eslint'],

extends: [

'eslint:recommended',

'@typescript-eslint/recommended',

],

parserOptions: {

ecmaVersion: 2020,

sourceType: 'module',

},

env: {

node: true,

es6: true,

},

rules: {

'@typescript-eslint/no-unused-vars': ['error', { argsIgnorePattern: '^_' }],

'@typescript-eslint/explicit-function-return-type': 'off',

'@typescript-eslint/explicit-module-boundary-types': 'off',

'@typescript-eslint/no-explicit-any': 'warn',

},

ignorePatterns: [

'dist',

'node_modules',

'*.js',

'*.d.ts',

],

};

```

--------------------------------------------------------------------------------

/.env.example:

--------------------------------------------------------------------------------

```

# Claude Context Environment Variables Example

#

# Copy this file to ~/.context/.env as a global setting, modify the values as needed

#

# Usage: cp env.example ~/.context/.env

#

# Note: This file does not work if you put it in your codebase directory (because it may conflict with the environment variables of your codebase project)

# =============================================================================

# Embedding Provider Configuration

# =============================================================================

# Embedding provider: OpenAI, VoyageAI, Gemini, Ollama

EMBEDDING_PROVIDER=OpenAI

# Embedding model (provider-specific)

EMBEDDING_MODEL=text-embedding-3-small

# Embedding batch size for processing (default: 100)

# You can customize it according to the throughput of your embedding model. Generally, larger batch size means less indexing time.

EMBEDDING_BATCH_SIZE=100

# =============================================================================

# OpenAI Configuration

# =============================================================================

# OpenAI API key

OPENAI_API_KEY=your-openai-api-key-here

# OpenAI base URL (optional, for custom endpoints)

# OPENAI_BASE_URL=https://api.openai.com/v1

# =============================================================================

# VoyageAI Configuration

# =============================================================================

# VoyageAI API key

# VOYAGEAI_API_KEY=your-voyageai-api-key-here

# =============================================================================

# Gemini Configuration

# =============================================================================

# Google Gemini API key

# GEMINI_API_KEY=your-gemini-api-key-here

# Gemini API base URL (optional, for custom endpoints)

# GEMINI_BASE_URL=https://generativelanguage.googleapis.com/v1beta

# =============================================================================

# Ollama Configuration

# =============================================================================

# Ollama model name

# OLLAMA_MODEL=

# Ollama host (default: http://localhost:11434)

# OLLAMA_HOST=http://localhost:11434

# =============================================================================

# Vector Database Configuration (Milvus/Zilliz)

# =============================================================================

# Milvus server address. It's optional when you get Zilliz Personal API Key.

MILVUS_ADDRESS=your-zilliz-cloud-public-endpoint

# Milvus authentication token. You can refer to this guide to get Zilliz Personal API Key as your Milvus token.

# https://github.com/zilliztech/claude-context/blob/master/assets/signup_and_get_apikey.png

MILVUS_TOKEN=your-zilliz-cloud-api-key

# =============================================================================

# Code Splitter Configuration

# =============================================================================

# Code splitter type: ast, langchain

SPLITTER_TYPE=ast

# =============================================================================

# Custom File Processing Configuration

# =============================================================================

# Additional file extensions to include beyond defaults (comma-separated)

# Example: .vue,.svelte,.astro,.twig,.blade.php

# CUSTOM_EXTENSIONS=.vue,.svelte,.astro

# Additional ignore patterns to exclude files/directories (comma-separated)

# Example: temp/**,*.backup,private/**,uploads/**

# CUSTOM_IGNORE_PATTERNS=temp/**,*.backup,private/**

# Whether to use hybrid search mode. If true, it will use both dense vector and BM25; if false, it will use only dense vector search.

# HYBRID_MODE=true

```

--------------------------------------------------------------------------------

/examples/README.md:

--------------------------------------------------------------------------------

```markdown

# Claude Context Examples

This directory contains usage examples for Claude Context.

```

--------------------------------------------------------------------------------

/evaluation/case_study/README.md:

--------------------------------------------------------------------------------

```markdown

# Case Study

This directory includes some case analysis. We compare the both method(grep + Claude Context semantic search) and the traditional grep only method.

These cases are selected from the Princeton NLP's [SWE-bench_Verified](https://openai.com/index/introducing-swe-bench-verified/) dataset. The results and the logs are generated by the [run_evaluation.py](../run_evaluation.py) script. For more details, please refer to the [evaluation README.md](../README.md) file.

- 📁 [django_14170](./django_14170/): Query optimization in YearLookup breaks filtering by "__iso_year"

- 📁 [pydata_xarray_6938](./pydata_xarray_6938/): `.swap_dims()` can modify original object

Each case study includes:

- **Original Issue**: The GitHub issue description and requirements

- **Problem Analysis**: Technical breakdown of the bug and expected solution

- **Method Comparison**: Detailed comparison of both approaches

- **Conversation Logs**: The interaction records showing how the LLM agent call the ols and generate the final answer.

- **Results**: Performance metrics and outcome analysis

## Key Results

Compared with traditional grep only, the both method(grep + Claude Context semantic search) is more efficient and accurate.

## Why Grep Fails

1. **Information Overload** - Generates hundreds of irrelevant matches

2. **No Semantic Understanding** - Only literal text matching

3. **Context Loss** - Can't understand code relationships

4. **Inefficient Navigation** - Produces many irrelevant results

## How Grep + Semantic Search Wins

1. **Intelligent Filtering** - Automatically ranks by relevance

2. **Conceptual Understanding** - Grasps code meaning and relationships

3. **Efficient Navigation** - Direct targeting of relevant sections

```

--------------------------------------------------------------------------------

/python/README.md:

--------------------------------------------------------------------------------

```markdown

# Python → TypeScript Claude Context Bridge

A simple utility to call TypeScript Claude Context methods from Python.

## What's This?

This directory contains a basic bridge that allows you to run Claude Context TypeScript functions from Python scripts. It's not a full SDK - just a simple way to test and use the TypeScript codebase from Python.

## Files

- `ts_executor.py` - Executes TypeScript methods from Python

- `test_context.ts` - TypeScript test script with Claude Context workflow

- `test_endtoend.py` - Python script that calls the TypeScript test

## Prerequisites

```bash

# Make sure you have Node.js dependencies installed

cd .. && pnpm install

# Set your OpenAI API key (required for actual indexing)

export OPENAI_API_KEY="your-openai-api-key"

# Optional: Set Milvus address (defaults to localhost:19530)

export MILVUS_ADDRESS="localhost:19530"

```

## Quick Usage

```bash

# Run the end-to-end test

python test_endtoend.py

```

This will:

1. Create embeddings using OpenAI

2. Connect to Milvus vector database

3. Index the `packages/core/src` codebase

4. Perform a semantic search

5. Show results

## Manual Usage

```python

from ts_executor import TypeScriptExecutor

executor = TypeScriptExecutor()

result = executor.call_method(

'./test_context.ts',

'testContextEndToEnd',

{

'openaiApiKey': 'sk-your-key',

'milvusAddress': 'localhost:19530',

'codebasePath': '../packages/core/src',

'searchQuery': 'vector database configuration'

}

)

print(result)

```

## How It Works

1. `ts_executor.py` creates temporary TypeScript wrapper files

2. Runs them with `ts-node`

3. Captures JSON output and returns to Python

4. Supports async functions and complex parameters

That's it! This is just a simple bridge for testing purposes.

```

--------------------------------------------------------------------------------

/docs/README.md:

--------------------------------------------------------------------------------

```markdown

# Claude Context Documentation

Welcome to the Claude Context documentation! Claude Context is a powerful tool that adds semantic code search capabilities to AI coding assistants through MCP.

## 🚀 Quick Navigation

### Getting Started

- [🛠️ Prerequisites](getting-started/prerequisites.md) - What you need before starting

- [🔍 Environment Variables](getting-started/environment-variables.md) - How to configure environment variables for MCP

- [⚡ Quick Start Guide](getting-started/quick-start.md) - Get up and running in 1 minutes

### Components

- [MCP Server](../packages/mcp/README.md) - The MCP server of Claude Context

- [VSCode Extension](../packages/vscode-extension/README.md) - The VSCode extension of Claude Context

- [Core Package](../packages/core/README.md) - The core package of Claude Context

### Dive Deep

- [File Inclusion & Exclusion Rules](dive-deep/file-inclusion-rules.md) - Detailed explanation of file inclusion and exclusion rules

- [Asynchronous Indexing Workflow](dive-deep/asynchronous-indexing-workflow.md) - Detailed explanation of asynchronous indexing workflow

### Troubleshooting

- [❓ FAQ](troubleshooting/faq.md) - Frequently asked questions

- [🐛 Troubleshooting Guide](troubleshooting/troubleshooting-guide.md) - Troubleshooting guide

## 🔗 External Resources

- [GitHub Repository](https://github.com/zilliztech/claude-context)

- [VSCode Marketplace](https://marketplace.visualstudio.com/items?itemName=zilliz.semanticcodesearch)

- [npm - Core Package](https://www.npmjs.com/package/@zilliz/claude-context-core)

- [npm - MCP Server](https://www.npmjs.com/package/@zilliz/claude-context-mcp)

- [Zilliz Cloud](https://cloud.zilliz.com)

## 💬 Support

- **Issues**: [GitHub Issues](https://github.com/zilliztech/claude-context/issues)

- **Discord**: [Join our Discord](https://discord.gg/mKc3R95yE5)

```

--------------------------------------------------------------------------------

/examples/basic-usage/README.md:

--------------------------------------------------------------------------------

```markdown

# Basic Usage Example

This example demonstrates the basic usage of Claude Context.

## Prerequisites

1. **OpenAI API Key**: Set your OpenAI API key for embeddings:

```bash

export OPENAI_API_KEY="your-openai-api-key"

```

2. **Milvus Server**: Make sure Milvus server is running:

- You can also use fully managed Milvus on [Zilliz Cloud](https://zilliz.com/cloud).

In this case, set the `MILVUS_ADDRESS` as the Public Endpoint and `MILVUS_TOKEN` as the Token like this:

```bash

export MILVUS_ADDRESS="https://your-cluster.zillizcloud.com"

export MILVUS_TOKEN="your-zilliz-token"

```

- You can also set up a Milvus server on [Docker or Kubernetes](https://milvus.io/docs/install-overview.md). In this setup, please use the server address and port as your `uri`, e.g.`http://localhost:19530`. If you enable the authentication feature on Milvus, set the `token` as `"<your_username>:<your_password>"`, otherwise there is no need to set the token.

```bash

export MILVUS_ADDRESS="http://localhost:19530"

export MILVUS_TOKEN="<your_username>:<your_password>"

```

## Running the Example

1. Install dependencies:

```bash

pnpm install

```

2. Set environment variables (see examples above)

3. Run the example:

```bash

pnpm run start

```

## What This Example Does

1. **Indexes Codebase**: Indexes the entire Claude Context project

2. **Performs Searches**: Executes semantic searches for different code patterns

3. **Shows Results**: Displays search results with similarity scores and file locations

## Expected Output

```

🚀 Claude Context Real Usage Example

===============================

...

🔌 Connecting to vector database at: ...

📖 Starting to index codebase...

🗑️ Existing index found, clearing it first...

📊 Indexing stats: 45 files, 234 code chunks

🔍 Performing semantic search...

🔎 Search: "vector database operations"

1. Similarity: 89.23%

File: /path/to/packages/core/src/vectordb/milvus-vectordb.ts

Language: typescript

Lines: 147-177

Preview: async search(collectionName: string, queryVector: number[], options?: SearchOptions)...

🎉 Example completed successfully!

```

```

--------------------------------------------------------------------------------

/packages/chrome-extension/README.md:

--------------------------------------------------------------------------------

```markdown

# GitHub Code Vector Search Chrome Extension

A Chrome extension for indexing and semantically searching GitHub repository code, powered by Claude Context.

> 📖 **New to Claude Context?** Check out the [main project README](../../README.md) for an overview and setup instructions.

## Features

- 🔍 **Semantic Search**: Intelligent code search on GitHub repositories based on semantic understanding

- 📁 **Repository Indexing**: Automatically index GitHub repositories and build semantic vector database

- 🎯 **Context Search**: Search related code by selecting code snippets directly on GitHub

- 🔧 **Multi-platform Support**: Support for OpenAI and VoyageAI as embedding providers

- 💾 **Vector Storage**: Integrated with Milvus vector database for efficient storage and retrieval

- 🌐 **GitHub Integration**: Seamlessly integrates with GitHub's interface

- 📱 **Cross-Repository**: Search across multiple indexed repositories

- ⚡ **Real-time**: Index and search code as you browse GitHub

## Installation

### From Chrome Web Store

> **Coming Soon**: Extension will be available on Chrome Web Store

### Manual Installation (Development)

1. **Build the Extension**:

```bash

cd packages/chrome-extension

pnpm build

```

2. **Load in Chrome**:

- Open Chrome and navigate to `chrome://extensions/`

- Enable "Developer mode" in the top right

- Click "Load unpacked" and select the `dist` folder

- The extension should now appear in your extensions list

## Quick Start

1. **Configure Settings**:

- Click the extension icon in Chrome toolbar

- Go to Options/Settings

- Configure embedding provider and API Key

- Set up Milvus connection details

2. **Index a Repository**:

- Navigate to any GitHub repository

- Click the "Index Repository" button that appears in the sidebar

- Wait for indexing to complete

3. **Start Searching**:

- Use the search box that appears on GitHub repository pages

- Enter natural language queries like "function that handles authentication"

- Click on results to navigate to the code

## Configuration

The extension can be configured through the options page:

- **Embedding Provider**: Choose between OpenAI or VoyageAI

- **Embedding Model**: Select specific model (e.g., `text-embedding-3-small`)

- **API Key**: Your embedding provider API key

- **Milvus Settings**: Vector database connection details

- **GitHub Token**: Personal access token for private repositories (optional)

## Permissions

The extension requires the following permissions:

- **Storage**: To save configuration and index metadata

- **Scripting**: To inject search UI into GitHub pages

- **Unlimited Storage**: For storing vector embeddings locally

- **Host Permissions**: Access to GitHub.com and embedding provider APIs

## File Structure

- `src/content.ts` - Main content script that injects UI into GitHub pages

- `src/background.ts` - Background service worker for extension lifecycle

- `src/options.ts` - Options page for configuration

- `src/config/milvusConfig.ts` - Milvus connection configuration

- `src/milvus/chromeMilvusAdapter.ts` - Browser-compatible Milvus adapter

- `src/storage/indexedRepoManager.ts` - Repository indexing management

- `src/stubs/` - Browser compatibility stubs for Node.js modules

## Development Features

- **Browser Compatibility**: Node.js modules adapted for browser environment

- **WebAssembly Support**: Optimized for browser performance

- **Offline Capability**: Local storage for indexed repositories

- **Progress Tracking**: Real-time indexing progress indicators

- **Error Handling**: Graceful degradation and user feedback

## Usage Examples

### Basic Search

1. Navigate to a GitHub repository

2. Enter query: "error handling middleware"

3. Browse semantic search results

### Context Search

1. Select code snippet on GitHub

2. Right-click and choose "Search Similar Code"

3. View related code across the repository

### Multi-Repository Search

1. Index multiple repositories

2. Use the extension popup to search across all indexed repos

3. Filter results by repository or file type

## Contributing

This Chrome extension is part of the Claude Context monorepo. Please see:

- [Main Contributing Guide](../../CONTRIBUTING.md) - General contribution guidelines

- [Chrome Extension Contributing](CONTRIBUTING.md) - Specific development guide for this extension

## Related Packages

- **[@zilliz/claude-context-core](../core)** - Core indexing engine used by this extension

- **[@zilliz/claude-context-vscode-extension](../vscode-extension)** - VSCode integration

- **[@zilliz/claude-context-mcp](../mcp)** - MCP server integration

## Tech Stack

- **TypeScript** - Type-safe development

- **Chrome Extension Manifest V3** - Modern extension architecture

- **Webpack** - Module bundling and optimization

- **Claude Context Core** - Semantic search engine

- **Milvus Vector Database** - Vector storage and retrieval

- **OpenAI/VoyageAI Embeddings** - Text embedding generation

## Browser Support

- Chrome 88+

- Chromium-based browsers (Edge, Brave, etc.)

## License

MIT - See [LICENSE](../../LICENSE) for details

```

--------------------------------------------------------------------------------

/evaluation/README.md:

--------------------------------------------------------------------------------

```markdown

# Claude Context MCP Evaluation

This directory contains the evaluation framework and experimental results for comparing the efficiency of code retrieval using Claude Context MCP versus traditional grep-only approaches.

## Overview

We conducted a controlled experiment to measure the impact of adding Claude Context MCP tool to a baseline coding agent. The evaluation demonstrates significant improvements in token efficiency while maintaining comparable retrieval quality.

## Experimental Design

We designed a controlled experiment comparing two coding agents performing identical retrieval tasks. The baseline agent uses simple tools including read, grep, and edit functions. The enhanced agent adds Claude Context MCP tool to this same foundation. Both agents work on the same dataset using the same model to ensure fair comparison. We use [LangGraph MCP and ReAct framework](https://langchain-ai.github.io/langgraph/agents/mcp/#use-mcp-tools) to implement it.

We selected 30 instances from Princeton NLP's [SWE-bench_Verified](https://openai.com/index/introducing-swe-bench-verified/) dataset, filtering for 15-60 minute difficulty problems with exactly 2 file modifications. This subset represents typical coding tasks and enables quick validation. The dataset generation is implemented in [`generate_subset_json.py`](./generate_subset_json.py).

We chose [GPT-4o-mini](https://platform.openai.com/docs/models/gpt-4o-mini) as the default model for cost-effective considerations.

We ran each method 3 times independently, giving us 6 total runs for statistical reliability. We measured token usage, tool calls, retrieval precision, recall, and F1-score across all runs. The main entry point for running evaluations is [`run_evaluation.py`](./run_evaluation.py).

## Key Results

### Performance Summary

| Metric | Baseline (Grep Only) | With Claude Context MCP | Improvement |

|--------|---------------------|--------------------------|-------------|

| **Average F1-Score** | 0.40 | 0.40 | Comparable |

| **Average Token Usage** | 73,373 | 44,449 | **-39.4%** |

| **Average Tool Calls** | 8.3 | 5.3 | **-36.3%** |

### Key Findings

**Dramatic Efficiency Gains**:

With Claude Context MCP, we achieved:

- **39.4% reduction** in token consumption (28,924 tokens saved per instance)

- **36.3% reduction** in tool calls (3.0 fewer calls per instance)

## Conclusion

The results demonstrate that Claude Context MCP provides:

### Immediate Benefits

- **Cost Efficiency**: ~40% reduction in token usage directly reduces operational costs

- **Speed Improvement**: Fewer tool calls and tokens mean faster code localization and task completion

- **Better Quality**: This also means that, under the constraint of limited token context length, using Claude Context yields better retrieval and answer results.

### Strategic Advantages

- **Better Resource Utilization**: Under fixed token budgets, Claude Context MCP enables handling more tasks

- **Wider Usage Scenarios**: Lower per-task costs enable broader usage scenarios

- **Improved User Experience**: Faster responses with maintained accuracy

## Running the Evaluation

To reproduce these results:

1. **Install Dependencies**:

For python environment, you can use `uv` to install the lockfile dependencies.

```bash

cd evaluation && uv sync

source .venv/bin/activate

```

For node environment, make sure your `node` version is `Node.js >= 20.0.0 and < 24.0.0`.

Our evaluation results are tested on `[email protected]`, you can change the `claude-context` mcp server setting in the `retrieval/custom.py` file to get the latest version or use a development version.

2. **Set Environment Variables**:

```bash

export OPENAI_API_KEY=your_openai_api_key

export MILVUS_ADDRESS=your_milvus_address

```

For more configuration details, refer the `claude-context` mcp server settings in the `retrieval/custom.py` file.

```bash

export GITHUB_TOKEN=your_github_token

```

You need also prepare a `GITHUB_TOKEN` for automatically cloning the repositories, refer to [SWE-bench documentation](https://www.swebench.com/SWE-bench/guides/create_rag_datasets/#example-usage) for more details.

3. **Generate Dataset**:

```bash

python generate_subset_json.py

```

4. **Run Baseline Evaluation**:

```bash

python run_evaluation.py --retrieval_types grep --output_dir retrieval_results_grep

```

5. **Run Enhanced Evaluation**:

```bash

python run_evaluation.py --retrieval_types cc,grep --output_dir retrieval_results_both

```

6. **Analyze Results**:

```bash

python analyze_and_plot_mcp_efficiency.py

```

The evaluation framework is designed to be reproducible and can be easily extended to test additional configurations or datasets. Due to the proprietary nature of LLMs, exact numerical results may vary between runs and cannot be guaranteed to be identical. However, the core conclusions drawn from the analysis remain consistent and robust across different runs.

## Results Visualization

*The chart above shows the dramatic efficiency improvements achieved by Claude Context MCP. The token usage and tool calls are significantly reduced.*

## Case Study

For detailed analysis of why grep-only approaches have limitations and how semantic search addresses these challenges, please refer to our **[Case Study](./case_study/)** which provides in-depth comparisons and analysis on the this experiment results.

```

--------------------------------------------------------------------------------

/packages/vscode-extension/README.md:

--------------------------------------------------------------------------------

```markdown

# Semantic Code Search VSCode Extension

[](https://marketplace.visualstudio.com/items?itemName=zilliz.semanticcodesearch)

A code indexing and semantic search VSCode extension powered by [Claude Context](https://github.com/zilliztech/claude-context).

> 📖 **New to Claude Context?** Check out the [main project README](https://github.com/zilliztech/claude-context/blob/master/README.md) for an overview and setup instructions.

## Features

- 🔍 **Semantic Search**: Intelligent code search based on semantic understanding, not just keyword matching

- 📁 **Codebase Indexing**: Automatically index entire codebase and build semantic vector database

- 🎯 **Context Search**: Search related code by selecting code snippets

- 🔧 **Multi-platform Support**: Support for OpenAI, VoyageAI, Gemini, and Ollama as embedding providers

- 💾 **Vector Storage**: Integrated with Milvus vector database for efficient storage and retrieval

## Requirements

- **VSCode Version**: 1.74.0 or higher

## Installation

### From VS Code Marketplace

1. **Direct Link**: [Install from VS Code Marketplace](https://marketplace.visualstudio.com/items?itemName=zilliz.semanticcodesearch)

2. **Manual Search**:

- Open Extensions view in VSCode (Ctrl+Shift+X or Cmd+Shift+X on Mac)

- Search for "Semantic Code Search"

- Click Install

## Quick Start

### Configuration

The first time you open Claude Context, you need to click on Settings icon to configure the relevant options.

#### Embedding Configuration

Configure your embedding provider to convert code into semantic vectors.

**OpenAI Configuration:**

- `Embedding Provider`: Select "OpenAI" from the dropdown

- `Model name`: Choose the embedding model (e.g., `text-embedding-3-small`, `text-embedding-3-large`)

- `OpenAI API key`: Your OpenAI API key for authentication

- `Custom API endpoint URL`: Optional custom endpoint (defaults to `https://api.openai.com/v1`)

**Other Supported Providers:**

- **Gemini**: Google's state-of-the-art embedding model with Matryoshka representation learning

- **VoyageAI**: Alternative embedding provider with competitive performance

- **Ollama**: For local embedding models

#### Code Splitter Configuration

Configure how your code is split into chunks for indexing.

**Splitter Settings:**

- `Splitter Type`: Choose between "AST Splitter" (syntax-aware) or "LangChain Splitter" (character-based)

- `Chunk Size`: Maximum size of each code chunk (default: 1000 characters)

- `Chunk Overlap`: Number of overlapping characters between chunks (default: 200 characters)

> **Recommendation**: Use AST Splitter for better semantic understanding of code structure.

#### Zilliz Cloud configuration

Get a free Milvus vector database on Zilliz Cloud.

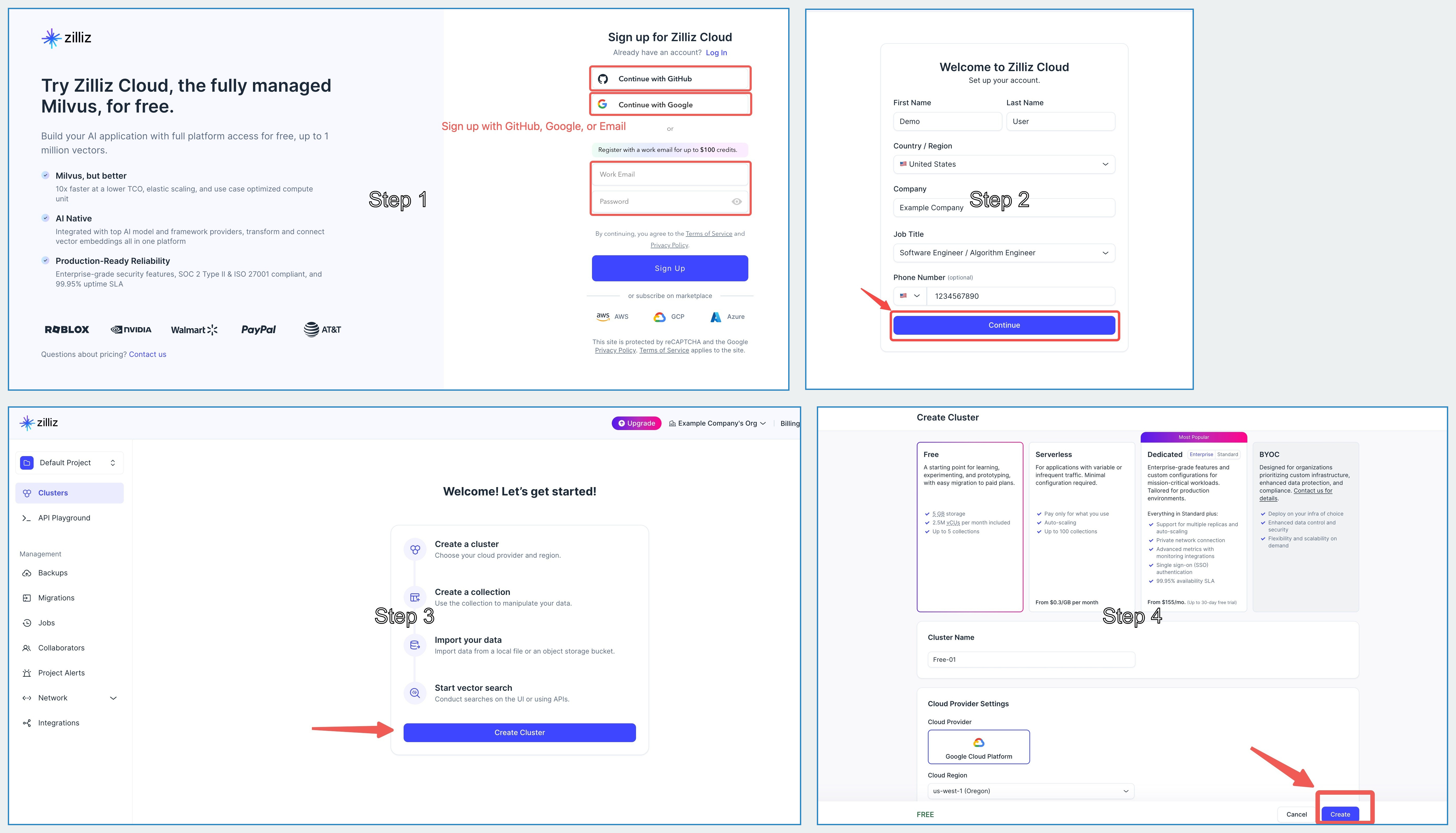

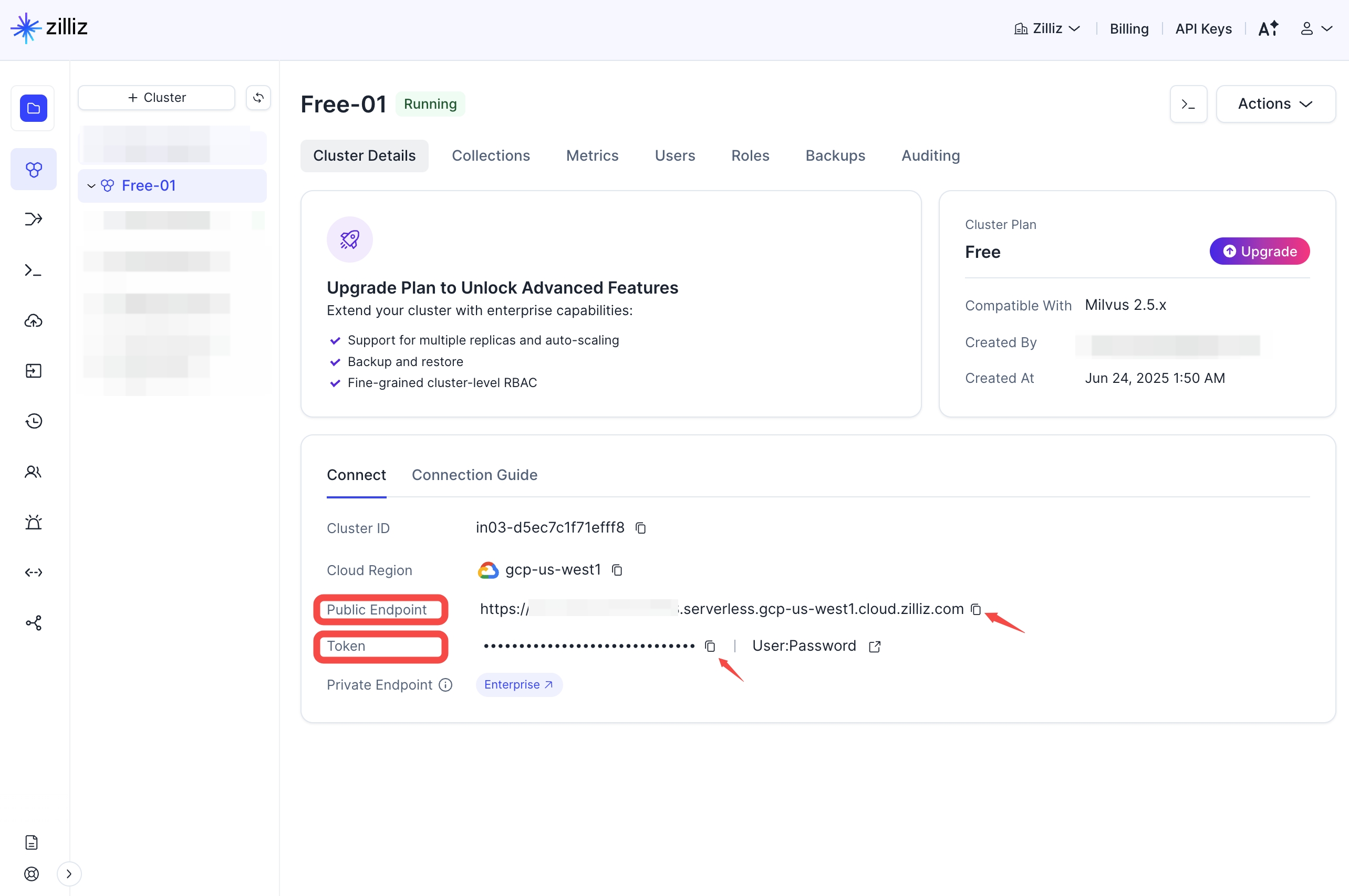

Claude Context needs a vector database. You can [sign up](https://cloud.zilliz.com/signup?utm_source=github&utm_medium=referral&utm_campaign=2507-codecontext-readme) on Zilliz Cloud to get a free Serverless cluster.

After creating your cluster, open your Zilliz Cloud console and copy both the **public endpoint** and your **API key**.

These will be used as `your-zilliz-cloud-public-endpoint` and `your-zilliz-cloud-api-key` in the configuration examples.

Keep both values handy for the configuration steps below.

If you need help creating your free vector database or finding these values, see the [Zilliz Cloud documentation](https://docs.zilliz.com/docs/create-cluster) for detailed instructions.

```bash

MILVUS_ADDRESS=your-zilliz-cloud-public-endpoint

MILVUS_TOKEN=your-zilliz-cloud-api-key

```

### Usage

1. **Set the Configuration**:

- Open VSCode Settings (Ctrl+, or Cmd+, on Mac)

- Search for "Semantic Code Search"

- Set the configuration

2. **Index Codebase**:

- Open Command Palette (Ctrl+Shift+P or Cmd+Shift+P on Mac)

- Run "Semantic Code Search: Index Codebase"

3. **Start Searching**:

- Open Semantic Code Search panel in sidebar

- Enter search query or right-click on selected code to search

## Commands

- `Semantic Code Search: Semantic Search` - Perform semantic search

- `Semantic Code Search: Index Codebase` - Index current codebase

- `Semantic Code Search: Clear Index` - Clear the index

## Configuration

- `semanticCodeSearch.embeddingProvider.provider` - Embedding provider (OpenAI/VoyageAI/Gemini/Ollama)

- `semanticCodeSearch.embeddingProvider.model` - Embedding model to use

- `semanticCodeSearch.embeddingProvider.apiKey` - API key for embedding provider

- `semanticCodeSearch.embeddingProvider.baseURL` - Custom API endpoint URL (optional, for OpenAI and Gemini)

- `semanticCodeSearch.embeddingProvider.outputDimensionality` - Output dimension for Gemini (supports 3072, 1536, 768, 256)

- `semanticCodeSearch.milvus.address` - Milvus server address

## Contributing

This VSCode extension is part of the Claude Context monorepo. Please see:

- [Main Contributing Guide](https://github.com/zilliztech/claude-context/blob/master/CONTRIBUTING.md) - General contribution guidelines

- [VSCode Extension Contributing](https://github.com/zilliztech/claude-context/blob/master/packages/vscode-extension/CONTRIBUTING.md) - Specific development guide for this extension

## Related Packages

- **[@zilliz/claude-context-core](https://github.com/zilliztech/claude-context/tree/master/packages/core)** - Core indexing engine used by this extension

- **[@zilliz/claude-context-mcp](https://github.com/zilliztech/claude-context/tree/master/packages/mcp)** - Alternative MCP server integration

## Tech Stack

- TypeScript

- VSCode Extension API

- Milvus Vector Database

- OpenAI/VoyageAI Embeddings

## License

MIT - See [LICENSE](https://github.com/zilliztech/claude-context/blob/master/LICENSE) for details

```

--------------------------------------------------------------------------------

/evaluation/case_study/django_14170/README.md:

--------------------------------------------------------------------------------

```markdown

# Django 14170: YearLookup ISO Year Bug

A comparison showing how both methods(grep + semantic search) outperform grep-based approaches for complex Django ORM bugs.

<details>

<summary><strong>📋 Original GitHub Issue</strong></summary>

## Query optimization in YearLookup breaks filtering by "__iso_year"

The optimization to use BETWEEN instead of the EXTRACT operation in YearLookup is also registered for the "__iso_year" lookup, which breaks the functionality provided by ExtractIsoYear when used via the lookup.

**Problem**: When using `__iso_year` filters, the `YearLookup` class applies standard BETWEEN optimization which works for calendar years but fails for ISO week-numbering years.

**Example**:

```python

# This should use EXTRACT('isoyear' FROM ...) but incorrectly uses BETWEEN

DTModel.objects.filter(start_date__iso_year=2020)

# Generates: WHERE "start_date" BETWEEN 2020-01-01 AND 2020-12-31

# Should be: WHERE EXTRACT('isoyear' FROM "start_date") = 2020

```

**Files**: `django/db/models/lookups.py`, `django/db/backends/base/operations.py`

</details>

## Results

| Metric | Both Methods | Grep Method | Improvement |

|--------|-------------|-------------|-------------|

| **Token Usage** | 9,036 | 130,819 | **93% less** |

| **Tool Calls** | 3 | 8 | **62% fewer** |

| **Success Rate** | 50% hit | 0% hit | **Much better** |

## How Each Method Found the Problem

### 🎯 Both Methods

```log

🔧 search_code('YearLookup')

⚙️ Found 10 results for query: "YearLookup" in codebase

1. Code snippet (python) [repo__django__django]

Location: django/db/models/lookups.py:568-577

Context: YearExact class with get_bound_params method

2. Code snippet (python) [repo__django__django]

Location: django/db/models/lookups.py:538-569

Context: YearLookup base class with year_lookup_bounds method

🔧 edit_file(django/db/models/lookups.py)

⚙️ Arguments: {'file_path': 'django/db/models/lookups.py',

'old_string': 'def get_direct_rhs_sql(self, connection, rhs):\n return connection.operators[self.lookup_name] % rhs',

'new_string': "def get_direct_rhs_sql(self, connection, rhs):\n if self.lookup_name == '__iso_year':\n return 'EXTRACT(ISOYEAR FROM %s)' % rhs\n return connection.operators[self.lookup_name] % rhs"}

Result: Successfully modified file

🔧 edit_file(django/db/models/lookups.py)

⚙️ Arguments: {'file_path': 'django/db/models/lookups.py',

'old_string': 'def year_lookup_bounds(self, connection, year):',

'new_string': "def year_lookup_bounds(self, connection, year):\n if self.lookup_name == '__iso_year':\n return (year - 1, year)"}

Result: Successfully modified file

```

**Key Success**: Semantic search immediately understood "YearLookup" as the core concept and found the exact classes that needed modification.

### 🔍 Grep Method

```log

🔧 directory_tree()

⚙️ Arguments: {'path': 'repo__django__django'}

Result: Retrieved 3000+ lines of directory structure (~50k tokens)

Problem: Massive information overload, no direct relevance

🔧 search_text('ExtractIsoYear')

⚙️ Arguments: {'pattern': 'ExtractIsoYear', 'path': 'repo__django__django'}

Result: Found 21 matches across multiple files:

- django/db/models/functions/__init__.py:5 (import statement)

- django/db/models/functions/__init__.py:31 (export list)

- django/db/models/functions/datetime.py:93 (class ExtractIsoYear)

- django/db/models/functions/datetime.py:150 (DateField.register_lookup)

Problem: Most were irrelevant imports and registrations

🔧 edit_file('django/db/models/functions/datetime.py')

⚙️ Arguments: {'old_string': 'ExtractIsoYear.register_lookup(YearExact)', 'new_string': '# ExtractIsoYear.register_lookup(YearExact)'}

Result: Successfully modified file

🔧 edit_file('django/db/models/functions/datetime.py')

⚙️ Arguments: {'old_string': 'ExtractIsoYear.register_lookup(YearGt)', 'new_string': '# ExtractIsoYear.register_lookup(YearGt)'}

Result: Successfully modified file

🔧 edit_file('django/db/models/functions/datetime.py')

⚙️ Arguments: {'old_string': 'ExtractIsoYear.register_lookup(YearGte)', 'new_string': '# ExtractIsoYear.register_lookup(YearGte)'}

Result: Successfully modified file

🔧 edit_file('django/db/models/functions/datetime.py')

⚙️ Arguments: {'old_string': 'ExtractIsoYear.register_lookup(YearLt)', 'new_string': '# ExtractIsoYear.register_lookup(YearLt)'}

Result: Successfully modified file

🔧 edit_file('django/db/models/functions/datetime.py')

⚙️ Arguments: {'old_string': 'ExtractIsoYear.register_lookup(YearLte)', 'new_string': '# ExtractIsoYear.register_lookup(YearLte)'}

Result: Successfully modified file

🔧 edit_file('tests/db_functions/datetime/test_extract_trunc.py')

⚙️ Arguments: {'old_string': "self.assertEqual(DTModel.objects.filter(start_datetime__iso_year=ExtractIsoYear('start_datetime')).count(), 2)", 'new_string': "# self.assertEqual(DTModel.objects.filter(start_datetime__iso_year=ExtractIsoYear('start_datetime')).count(), 2)"}

Result: Successfully modified file

```

**Key Failure**: Text search focused on wrong components (ExtractIsoYear registrations) instead of the actual optimization logic (YearLookup class).

## Why Grep + Semantic Search Won

1. **Conceptual Understanding**: Recognized "YearLookup" as the optimization mechanism causing the issue

2. **Direct Navigation**: Immediately found the relevant classes without noise

3. **Root Cause Focus**: Identified the boundary calculation logic that needed ISO year awareness

4. **Efficient Execution**: 3 targeted operations vs 8 scattered attempts

## Why Grep Failed

1. **Information Overload**: Wasted 50k tokens on irrelevant directory structure

2. **Surface-Level Matching**: Focused on "ExtractIsoYear" strings instead of understanding the optimization conflict

3. **Wrong Solution**: Applied superficial fixes (commenting registrations) instead of addressing the core logic

4. **No Context**: Couldn't understand the relationship between YearLookup optimization and ISO year boundaries

The semantic approach understood that the issue was about **optimization logic**, not just **ISO year functionality**, leading to the correct architectural fix.

## Files

- [`both_conversation.log`](./both_conversation.log) - Both methods interaction log

- [`grep_conversation.log`](./grep_conversation.log) - Grep method interaction log

- [`both_result.json`](./both_result.json) - Both methods performance metrics

- [`grep_result.json`](./grep_result.json) - Grep method performance metrics

```

--------------------------------------------------------------------------------

/evaluation/case_study/pydata_xarray_6938/README.md:

--------------------------------------------------------------------------------

```markdown

# Xarray 6938: swap_dims() Mutation Bug

A case study showing how both methods(grep + semantic search) efficiently identify object mutation bugs in scientific computing libraries.

<details>

<summary><strong>📋 Original GitHub Issue</strong></summary>

## `.swap_dims()` can modify original object

**Problem**: In certain cases, `.swap_dims()` modifies the original object instead of returning a new one, violating immutability expectations.

**Example**:

```python

import numpy as np

import xarray as xr

nz = 11

ds = xr.Dataset({

"y": ("z", np.random.rand(nz)),

"lev": ("z", np.arange(nz) * 10),

})

# This should not modify ds, but it does

ds2 = ds.swap_dims(z="lev").rename_dims(lev="z").reset_index("lev").reset_coords()

ds2.swap_dims(z='lev') # This modifies ds2['lev'] unexpectedly

# ds2['lev'] now has dimension 'lev' instead of 'z'

```

**Expected**: Original objects should remain unchanged after swap_dims operations.

**Actual**: Original object's internal state gets modified during dimension swapping.

**Files**: `xarray/core/dataset.py`, `xarray/core/variable.py`

</details>

## Results

| Metric | Both Methods | Grep Method | Improvement |

|--------|-------------|-------------|-------------|

| **Token Usage** | 15,826 | 41,999 | **62% less** |

| **Tool Calls** | 3 | 11 | **73% fewer** |

| **Success Rate** | 50% hit | 50% hit | **Equal accuracy** |

## How Each Method Found the Problem

### 🎯 Both Methods

```log

🔧 search_code('.swap_dims()')

⚙️ Arguments: {'path': 'repo__pydata__xarray', 'query': '.swap_dims()', 'limit': 10}

Result: Found 10 results for query: ".swap_dims()" in codebase

1. Code snippet (python) [repo__pydata__xarray]

Location: xarray/tests/test_formatting_html.py:67-76

2. Code snippet (python) [repo__pydata__xarray]

Location: xarray/tests/test_dataset.py:2926-2979

🔧 edit_file('xarray/core/dataset.py')

⚙️ Arguments: {'old_string': 'result_dims = {dims_dict.get(dim, dim) for dim in self.dims}', 'new_string': 'result_dims = {dims_dict.get(dim, dim) for dim in self.dims if dim in dims_dict or dim not in self.variables}'}

Result: Successfully modified file

🔧 edit_file('xarray/core/dataarray.py')

⚙️ Arguments: {'old_string': 'ds = self._to_temp_dataset().swap_dims(dims_dict)', 'new_string': 'ds = self._to_temp_dataset().swap_dims(dims_dict) # Ensure that ds does not modify the original DataArray'}

Result: Successfully modified file

```

**Key Success**: Semantic search immediately located the actual `swap_dims()` implementations and understood the functional context.

### 🔍 Grep Method

```log

🔧 list_directory('repo__pydata__xarray')

⚙️ Result: Retrieved directory structure with multiple subdirectories

Problem: Basic directory navigation without targeted search

🔧 list_directory('repo__pydata__xarray/xarray')

⚙️ Result: Found core directories: backends, coding, core, plot, static, tests

🔧 list_directory('repo__pydata__xarray/xarray/core')

⚙️ Result: Found 50+ Python files including dataset.py, dataarray.py, variable.py

🔧 search_text('swap_dims')

⚙️ Arguments: {'pattern': 'swap_dims', 'path': 'repo__pydata__xarray/xarray/core', 'include': '*.py'}

Result: Found 21 matches across core files:

- xarray/core/common.py:600 (Dataset.swap_dims reference)

- xarray/core/dataarray.py:753 (".rename() or .swap_dims() instead.")

- xarray/core/dataarray.py:2026 (DataArray.swap_dims reference)

- xarray/core/dataarray.py:2044 (def swap_dims method)

Problem: Many matches across different contexts

🔧 read_file('xarray/core/dataarray.py', offset=2000, limit=40)

⚙️ Result: Read lines 2001-2040 showing rename method documentation

🔧 read_file('xarray/core/dataset.py', offset=3600, limit=40)

⚙️ Result: Read lines 3601-3640 showing rename_dims method

🔧 read_file('xarray/core/common.py', offset=590, limit=10)

⚙️ Result: Read lines 591-600 showing assign_coords documentation

🔧 edit_file('xarray/core/dataarray.py')

⚙️ Arguments: {'old_string': ' def swap_dims(', 'new_string': ' def swap_dims(self, dims_dict: Mapping[str, str] | None = None, **dims: str) -> DataArray:\n """Returns a new DataArray with swapped dimensions..."""'}

Result: Successfully modified file

🔧 edit_file('xarray/core/dataset.py')

⚙️ Arguments: {'old_string': ' def swap_dims(', 'new_string': ' def swap_dims(self, dims_dict: Mapping[str, str] | None = None, **dims: str) -> Dataset:\n """Returns a new Dataset with swapped dimensions..."""'}

Result: Successfully modified file

🔧 edit_file('xarray/core/common.py')

⚙️ Arguments: {'old_string': 'Dataset.swap_dims', 'new_string': '# The swap_dims method is being used here without modification, so ensure appropriate usage.'}

Result: Successfully modified file

```

**Key Inefficiency**: Used massive list_directory and read_file operations, instead of focusing on relevant methods.

## Why Grep + Semantic Search Won

1. **Method-Level Understanding**: Recognized `.swap_dims()` as a specific method with defined behavior

2. **Functional Context**: Understood the relationship between Dataset, DataArray, and Variable classes

3. **Efficient Navigation**: Directly located method implementations without searching through tests and docs

4. **Mutation Awareness**: Connected the symptom (unexpected changes) to likely causes (shared references)

## Why Grep Was Less Efficient

1. **Information Overload**: Generated hundreds of matches for common terms like 'swap_dims' and 'dimension'

2. **Context Loss**: Treated method names as text strings rather than functional concepts

3. **Inefficient Reading**: Required reading large portions of files to understand basic functionality

## Key Insights

**Semantic Search Advantages**:

- **Concept Recognition**: Understands `.swap_dims()` as a method concept, not just text

- **Relationship Mapping**: Automatically connects related classes and methods

- **Relevance Filtering**: Prioritizes implementation code over tests and documentation

- **Efficiency**: Achieves same accuracy with 62% fewer tokens and 73% fewer operations

**Traditional Search Limitations**:

- **Text Literalism**: Treats code as text without understanding semantic meaning

- **Noise Generation**: Produces excessive irrelevant matches across different contexts

- **Resource Waste**: Consumes 2.6x more computational resources for equivalent results

- **Scalability Issues**: Becomes increasingly inefficient with larger codebases

This case demonstrates semantic search's particular value for scientific computing libraries where **data integrity** is paramount and **mutation bugs** can corrupt research results.

## Files

- [`both_conversation.log`](./both_conversation.log) - Both methods interaction log

- [`grep_conversation.log`](./grep_conversation.log) - Grep method interaction log

- [`both_result.json`](./both_result.json) - Both methods performance metrics

- [`grep_result.json`](./grep_result.json) - Grep method performance metrics

```

--------------------------------------------------------------------------------

/packages/core/README.md:

--------------------------------------------------------------------------------

```markdown

# @zilliz/claude-context-core

The core indexing engine for Claude Context - a powerful tool for semantic search and analysis of codebases using vector embeddings and AI.

[](https://www.npmjs.com/package/@zilliz/claude-context-core)

[](https://www.npmjs.com/package/@zilliz/claude-context-core)

> 📖 **New to Claude Context?** Check out the [main project README](../../README.md) for an overview and quick start guide.

## Installation

```bash

npm install @zilliz/claude-context-core

```

### Prepare Environment Variables

#### OpenAI API key

See [OpenAI Documentation](https://platform.openai.com/docs/api-reference) for more details to get your API key.

```bash

OPENAI_API_KEY=your-openai-api-key

```

#### Zilliz Cloud configuration

Get a free Milvus vector database on Zilliz Cloud.

Claude Context needs a vector database. You can [sign up](https://cloud.zilliz.com/signup?utm_source=github&utm_medium=referral&utm_campaign=2507-codecontext-readme) on Zilliz Cloud to get a free Serverless cluster.

After creating your cluster, open your Zilliz Cloud console and copy both the **public endpoint** and your **API key**.

These will be used as `your-zilliz-cloud-public-endpoint` and `your-zilliz-cloud-api-key` in the configuration examples.

Keep both values handy for the configuration steps below.

If you need help creating your free vector database or finding these values, see the [Zilliz Cloud documentation](https://docs.zilliz.com/docs/create-cluster) for detailed instructions.

```bash

MILVUS_ADDRESS=your-zilliz-cloud-public-endpoint

MILVUS_TOKEN=your-zilliz-cloud-api-key

```

> 💡 **Tip**: For easier configuration management across different usage scenarios, consider using [global environment variables](../../docs/getting-started/environment-variables.md).

## Quick Start

```typescript

import {

Context,

OpenAIEmbedding,

MilvusVectorDatabase

} from '@zilliz/claude-context-core';

// Initialize embedding provider

const embedding = new OpenAIEmbedding({

apiKey: process.env.OPENAI_API_KEY || 'your-openai-api-key',

model: 'text-embedding-3-small'

});

// Initialize vector database

const vectorDatabase = new MilvusVectorDatabase({

address: process.env.MILVUS_ADDRESS || 'localhost:19530',

token: process.env.MILVUS_TOKEN || ''

});

// Create context instance

const context = new Context({

embedding,

vectorDatabase

});

// Index a codebase

const stats = await context.indexCodebase('./my-project', (progress) => {

console.log(`${progress.phase} - ${progress.percentage}%`);

});

console.log(`Indexed ${stats.indexedFiles} files with ${stats.totalChunks} chunks`);

// Search the codebase

const results = await context.semanticSearch(

'./my-project',

'function that handles user authentication',

5

);

results.forEach(result => {

console.log(`${result.relativePath}:${result.startLine}-${result.endLine}`);

console.log(`Score: ${result.score}`);

console.log(result.content);

});

```

## Features

- **Multi-language Support**: Index TypeScript, JavaScript, Python, Java, C++, and many other programming languages

- **Semantic Search**: Find code using natural language queries powered by AI embeddings

- **Flexible Architecture**: Pluggable embedding providers and vector databases

- **Smart Chunking**: Intelligent code splitting that preserves context and structure

- **Batch Processing**: Efficient processing of large codebases with progress tracking

- **Pattern Matching**: Built-in ignore patterns for common build artifacts and dependencies

- **Incremental File Synchronization**: Efficient change detection using Merkle trees to only re-index modified files

## Embedding Providers

- **OpenAI Embeddings** (`text-embedding-3-small`, `text-embedding-3-large`, `text-embedding-ada-002`)

- **VoyageAI Embeddings** - High-quality embeddings optimized for code (`voyage-code-3`, `voyage-3.5`, etc.)

- **Gemini Embeddings** - Google's embedding models (`gemini-embedding-001`)

- **Ollama Embeddings** - Local embedding models via Ollama

## Vector Database Support

- **Milvus/Zilliz Cloud** - High-performance vector database

## Code Splitters

- **AST Code Splitter** - AST-based code splitting with automatic fallback (default)

- **LangChain Code Splitter** - Character-based code chunking

## Configuration

### ContextConfig

```typescript

interface ContextConfig {

embedding?: Embedding; // Embedding provider

vectorDatabase?: VectorDatabase; // Vector database instance (required)

codeSplitter?: Splitter; // Code splitting strategy

supportedExtensions?: string[]; // File extensions to index

ignorePatterns?: string[]; // Patterns to ignore

customExtensions?: string[]; // Custom extensions from MCP

customIgnorePatterns?: string[]; // Custom ignore patterns from MCP

}

```

### Supported File Extensions (Default)

```typescript

[

// Programming languages

'.ts', '.tsx', '.js', '.jsx', '.py', '.java', '.cpp', '.c', '.h', '.hpp',

'.cs', '.go', '.rs', '.php', '.rb', '.swift', '.kt', '.scala', '.m', '.mm',

// Text and markup files

'.md', '.markdown', '.ipynb'

]

```

### Default Ignore Patterns

- Build and dependency directories: `node_modules/**`, `dist/**`, `build/**`, `out/**`, `target/**`

- Version control: `.git/**`, `.svn/**`, `.hg/**`

- IDE files: `.vscode/**`, `.idea/**`, `*.swp`, `*.swo`

- Cache directories: `.cache/**`, `__pycache__/**`, `.pytest_cache/**`, `coverage/**`

- Minified files: `*.min.js`, `*.min.css`, `*.bundle.js`, `*.map`

- Log and temp files: `logs/**`, `tmp/**`, `temp/**`, `*.log`

- Environment files: `.env`, `.env.*`, `*.local`

## API Reference

### Context

#### Methods

- `indexCodebase(path, progressCallback?, forceReindex?)` - Index an entire codebase

- `reindexByChange(path, progressCallback?)` - Incrementally re-index only changed files

- `semanticSearch(path, query, topK?, threshold?, filterExpr?)` - Search indexed code semantically

- `hasIndex(path)` - Check if codebase is already indexed

- `clearIndex(path, progressCallback?)` - Remove index for a codebase

- `updateIgnorePatterns(patterns)` - Update ignore patterns

- `addCustomIgnorePatterns(patterns)` - Add custom ignore patterns

- `addCustomExtensions(extensions)` - Add custom file extensions

- `updateEmbedding(embedding)` - Switch embedding provider

- `updateVectorDatabase(vectorDB)` - Switch vector database

- `updateSplitter(splitter)` - Switch code splitter

### Search Results

```typescript

interface SemanticSearchResult {

content: string; // Code content

relativePath: string; // File path relative to codebase root

startLine: number; // Starting line number

endLine: number; // Ending line number

language: string; // Programming language

score: number; // Similarity score (0-1)

}

```

## Examples

### Using VoyageAI Embeddings

```typescript

import { Context, MilvusVectorDatabase, VoyageAIEmbedding } from '@zilliz/claude-context-core';

// Initialize with VoyageAI embedding provider

const embedding = new VoyageAIEmbedding({

apiKey: process.env.VOYAGEAI_API_KEY || 'your-voyageai-api-key',

model: 'voyage-code-3'

});

const vectorDatabase = new MilvusVectorDatabase({

address: process.env.MILVUS_ADDRESS || 'localhost:19530',

token: process.env.MILVUS_TOKEN || ''

});

const context = new Context({

embedding,

vectorDatabase

});

```

### Custom File Filtering

```typescript

const context = new Context({

embedding,

vectorDatabase,

supportedExtensions: ['.ts', '.js', '.py', '.java'],

ignorePatterns: [

'node_modules/**',

'dist/**',

'*.spec.ts',

'*.test.js'

]

});

```

## File Synchronization Architecture

Claude Context implements an intelligent file synchronization system that efficiently tracks and processes only the files that have changed since the last indexing operation. This dramatically improves performance when working with large codebases.

### How It Works

The file synchronization system uses a **Merkle tree-based approach** combined with SHA-256 file hashing to detect changes:

#### 1. File Hashing

- Each file in the codebase is hashed using SHA-256

- File hashes are computed based on file content, not metadata

- Hashes are stored with relative file paths for consistency across different environments

#### 2. Merkle Tree Construction

- All file hashes are organized into a Merkle tree structure

- The tree provides a single root hash that represents the entire codebase state

- Any change to any file will cause the root hash to change

#### 3. Snapshot Management

- File synchronization state is persisted to `~/.context/merkle/` directory

- Each codebase gets a unique snapshot file based on its absolute path hash

- Snapshots contain both file hashes and serialized Merkle tree data

#### 4. Change Detection Process

1. **Quick Check**: Compare current Merkle root hash with stored snapshot

2. **Detailed Analysis**: If root hashes differ, perform file-by-file comparison

3. **Change Classification**: Categorize changes into three types:

- **Added**: New files that didn't exist before

- **Modified**: Existing files with changed content

- **Removed**: Files that were deleted from the codebase

#### 5. Incremental Updates

- Only process files that have actually changed

- Update vector database entries only for modified chunks

- Remove entries for deleted files

- Add entries for new files

## Contributing

This package is part of the Claude Context monorepo. Please see:

- [Main Contributing Guide](../../CONTRIBUTING.md) - General contribution guidelines

- [Core Package Contributing](CONTRIBUTING.md) - Specific development guide for this package

## Related Packages

- **[@claude-context/mcp](../mcp)** - MCP server that uses this core engine

- **[VSCode Extension](../vscode-extension)** - VSCode extension built on this core

## License

MIT - See [LICENSE](../../LICENSE) for details

```

--------------------------------------------------------------------------------

/packages/mcp/README.md:

--------------------------------------------------------------------------------

```markdown

# @zilliz/claude-context-mcp

Model Context Protocol (MCP) integration for Claude Context - A powerful MCP server that enables AI assistants and agents to index and search codebases using semantic search.

[](https://www.npmjs.com/package/@zilliz/claude-context-mcp)

[](https://www.npmjs.com/package/@zilliz/claude-context-mcp)

> 📖 **New to Claude Context?** Check out the [main project README](../../README.md) for an overview and setup instructions.

## 🚀 Use Claude Context as MCP in Claude Code and others

Model Context Protocol (MCP) allows you to integrate Claude Context with your favorite AI coding assistants, e.g. Claude Code.

## Quick Start

### Prerequisites

Before using the MCP server, make sure you have:

- API key for your chosen embedding provider (OpenAI, VoyageAI, Gemini, or Ollama setup)

- Milvus vector database (local or cloud)

> 💡 **Setup Help:** See the [main project setup guide](../../README.md#-quick-start) for detailed installation instructions.

### Prepare Environment Variables

#### Embedding Provider Configuration

Claude Context MCP supports multiple embedding providers. Choose the one that best fits your needs:

> 📋 **Quick Reference**: For a complete list of environment variables and their descriptions, see the [Environment Variables Guide](../../docs/getting-started/environment-variables.md).

```bash

# Supported providers: OpenAI, VoyageAI, Gemini, Ollama

EMBEDDING_PROVIDER=OpenAI

```

<details>

<summary><strong>1. OpenAI Configuration (Default)</strong></summary>

OpenAI provides high-quality embeddings with excellent performance for code understanding.

```bash

# Required: Your OpenAI API key

OPENAI_API_KEY=sk-your-openai-api-key

# Optional: Specify embedding model (default: text-embedding-3-small)

EMBEDDING_MODEL=text-embedding-3-small

# Optional: Custom API base URL (for Azure OpenAI or other compatible services)

OPENAI_BASE_URL=https://api.openai.com/v1

```

**Available Models:**

See `getSupportedModels` in [`openai-embedding.ts`](https://github.com/zilliztech/claude-context/blob/master/packages/core/src/embedding/openai-embedding.ts) for the full list of supported models.

**Getting API Key:**

1. Visit [OpenAI Platform](https://platform.openai.com/api-keys)

2. Sign in or create an account

3. Generate a new API key

4. Set up billing if needed

</details>

<details>

<summary><strong>2. VoyageAI Configuration</strong></summary>

VoyageAI offers specialized code embeddings optimized for programming languages.

```bash

# Required: Your VoyageAI API key

VOYAGEAI_API_KEY=pa-your-voyageai-api-key

# Optional: Specify embedding model (default: voyage-code-3)

EMBEDDING_MODEL=voyage-code-3

```

**Available Models:**

See `getSupportedModels` in [`voyageai-embedding.ts`](https://github.com/zilliztech/claude-context/blob/master/packages/core/src/embedding/voyageai-embedding.ts) for the full list of supported models.

**Getting API Key:**

1. Visit [VoyageAI Console](https://dash.voyageai.com/)

2. Sign up for an account

3. Navigate to API Keys section

4. Create a new API key

</details>

<details>

<summary><strong>3. Gemini Configuration</strong></summary>

Google's Gemini provides competitive embeddings with good multilingual support.

```bash

# Required: Your Gemini API key

GEMINI_API_KEY=your-gemini-api-key

# Optional: Specify embedding model (default: gemini-embedding-001)

EMBEDDING_MODEL=gemini-embedding-001

# Optional: Custom API base URL (for custom endpoints)

GEMINI_BASE_URL=https://generativelanguage.googleapis.com/v1beta

```

**Available Models:**

See `getSupportedModels` in [`gemini-embedding.ts`](https://github.com/zilliztech/claude-context/blob/master/packages/core/src/embedding/gemini-embedding.ts) for the full list of supported models.

**Getting API Key:**

1. Visit [Google AI Studio](https://aistudio.google.com/)

2. Sign in with your Google account

3. Go to "Get API key" section

4. Create a new API key

</details>

<details>

<summary><strong>4. Ollama Configuration (Local/Self-hosted)</strong></summary>

Ollama allows you to run embeddings locally without sending data to external services.

```bash

# Required: Specify which Ollama model to use

EMBEDDING_MODEL=nomic-embed-text

# Optional: Specify Ollama host (default: http://127.0.0.1:11434)

OLLAMA_HOST=http://127.0.0.1:11434

```

**Setup Instructions:**

1. Install Ollama from [ollama.ai](https://ollama.ai/)

2. Pull the embedding model:

```bash

ollama pull nomic-embed-text

```

3. Ensure Ollama is running:

```bash

ollama serve

```

</details>

#### Get a free vector database on Zilliz Cloud

Claude Context needs a vector database. You can [sign up](https://cloud.zilliz.com/signup?utm_source=github&utm_medium=referral&utm_campaign=2507-codecontext-readme) on Zilliz Cloud to get an API key.

Copy your Personal Key to replace `your-zilliz-cloud-api-key` in the configuration examples.

```bash

MILVUS_TOKEN=your-zilliz-cloud-api-key

```

#### Embedding Batch Size

You can set the embedding batch size to optimize the performance of the MCP server, depending on your embedding model throughput. The default value is 100.

```bash

EMBEDDING_BATCH_SIZE=512

```

#### Custom File Processing (Optional)

You can configure custom file extensions and ignore patterns globally via environment variables:

```bash

# Additional file extensions to include beyond defaults

CUSTOM_EXTENSIONS=.vue,.svelte,.astro,.twig

# Additional ignore patterns to exclude files/directories

CUSTOM_IGNORE_PATTERNS=temp/**,*.backup,private/**,uploads/**

```

These settings work in combination with tool parameters - patterns from both sources will be merged together.

## Usage with MCP Clients

<details>

<summary><strong>Claude Code</strong></summary>

Use the command line interface to add the Claude Context MCP server:

```bash

# Add the Claude Context MCP server

claude mcp add claude-context -e OPENAI_API_KEY=your-openai-api-key -e MILVUS_TOKEN=your-zilliz-cloud-api-key -- npx @zilliz/claude-context-mcp@latest

```

See the [Claude Code MCP documentation](https://docs.anthropic.com/en/docs/claude-code/mcp) for more details about MCP server management.

</details>

<details>

<summary><strong>OpenAI Codex CLI</strong></summary>

Codex CLI uses TOML configuration files:

1. Create or edit the `~/.codex/config.toml` file.

2. Add the following configuration:

```toml

# IMPORTANT: the top-level key is `mcp_servers` rather than `mcpServers`.

[mcp_servers.claude-context]

command = "npx"

args = ["@zilliz/claude-context-mcp@latest"]

env = { "OPENAI_API_KEY" = "your-openai-api-key", "MILVUS_TOKEN" = "your-zilliz-cloud-api-key" }

# Optional: override the default 10s startup timeout

startup_timeout_ms = 20000

```

3. Save the file and restart Codex CLI to apply the changes.

</details>

<details>

<summary><strong>Gemini CLI</strong></summary>

Gemini CLI requires manual configuration through a JSON file:

1. Create or edit the `~/.gemini/settings.json` file.

2. Add the following configuration:

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["@zilliz/claude-context-mcp@latest"],

"env": {

"OPENAI_API_KEY": "your-openai-api-key",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

3. Save the file and restart Gemini CLI to apply the changes.

</details>

<details>

<summary><strong>Qwen Code</strong></summary>

Create or edit the `~/.qwen/settings.json` file and add the following configuration:

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["@zilliz/claude-context-mcp@latest"],

"env": {

"OPENAI_API_KEY": "your-openai-api-key",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

</details>

<details>

<summary><strong>Cursor</strong></summary>

Go to: `Settings` -> `Cursor Settings` -> `MCP` -> `Add new global MCP server`

Pasting the following configuration into your Cursor `~/.cursor/mcp.json` file is the recommended approach. You may also install in a specific project by creating `.cursor/mcp.json` in your project folder. See [Cursor MCP docs](https://docs.cursor.com/context/model-context-protocol) for more info.

**OpenAI Configuration (Default):**

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["-y", "@zilliz/claude-context-mcp@latest"],

"env": {

"EMBEDDING_PROVIDER": "OpenAI",

"OPENAI_API_KEY": "your-openai-api-key",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

**VoyageAI Configuration:**

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["-y", "@zilliz/claude-context-mcp@latest"],

"env": {

"EMBEDDING_PROVIDER": "VoyageAI",

"VOYAGEAI_API_KEY": "your-voyageai-api-key",

"EMBEDDING_MODEL": "voyage-code-3",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

**Gemini Configuration:**

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["-y", "@zilliz/claude-context-mcp@latest"],

"env": {

"EMBEDDING_PROVIDER": "Gemini",

"GEMINI_API_KEY": "your-gemini-api-key",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

**Ollama Configuration:**

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["-y", "@zilliz/claude-context-mcp@latest"],

"env": {

"EMBEDDING_PROVIDER": "Ollama",

"EMBEDDING_MODEL": "nomic-embed-text",

"OLLAMA_HOST": "http://127.0.0.1:11434",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

</details>

<details>

<summary><strong>Void</strong></summary>

Go to: `Settings` -> `MCP` -> `Add MCP Server`

Add the following configuration to your Void MCP settings:

```json

{

"mcpServers": {

"code-context": {

"command": "npx",

"args": ["-y", "@zilliz/claude-context-mcp@latest"],

"env": {

"OPENAI_API_KEY": "your-openai-api-key",

"MILVUS_ADDRESS": "your-zilliz-cloud-public-endpoint",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

</details>

<details>

<summary><strong>Claude Desktop</strong></summary>

Add to your Claude Desktop configuration:

```json

{

"mcpServers": {

"claude-context": {

"command": "npx",

"args": ["@zilliz/claude-context-mcp@latest"],

"env": {

"OPENAI_API_KEY": "your-openai-api-key",

"MILVUS_TOKEN": "your-zilliz-cloud-api-key"

}

}

}

}

```

</details>

<details>

<summary><strong>Windsurf</strong></summary>

Windsurf supports MCP configuration through a JSON file. Add the following configuration to your Windsurf MCP settings:

```json

{

"mcpServers": {